43 Fully automated directed network provisioning #

43.1 Introduction #

Directed network provisioning is a feature that allows you to automate the provisioning of downstream clusters. This feature is useful when you have many downstream clusters to provision, and you want to automate the process.

A management cluster (Chapter 41, Setting up the management cluster) automates deployment of the following components:

SUSE Linux Micro RTas the OS. Depending on the use case, configurations like networking, storage, users and kernel arguments can be customized.RKE2as the Kubernetes cluster. The defaultCNIplug-in isCilium. Depending on the use case, certainCNIplug-ins can be used, such asCilium+Multus.SUSE StorageSUSE SecurityMetalLBcan be used as the load balancer for highly available multi-node clusters.

For more information about SUSE Linux Micro, see Chapter 9, SUSE Linux Micro

For more information about RKE2, see Chapter 15, RKE2

For more information about SUSE Storage, see Chapter 16, SUSE Storage

For more information about SUSE Security, see Chapter 17, SUSE Security

The following sections describe the different directed network provisioning workflows and some additional features that can be added to the provisioning process:

Section 43.2, “Prepare downstream cluster image for connected scenarios”

Section 43.3, “Prepare downstream cluster image for air-gap scenarios”

Section 43.4, “Downstream cluster provisioning with Directed network provisioning (single-node)”

Section 43.5, “Downstream cluster provisioning with Directed network provisioning (multi-node)”

Section 43.7, “Telco features (DPDK, SR-IOV, CPU isolation, huge pages, NUMA, etc.)”

Section 43.9, “Downstream cluster provisioning in air-gapped scenarios”

The following sections show how to prepare the different scenarios for the directed network provisioning workflow using SUSE Telco Cloud. For examples of the different configurations options for deployment (incl. air-gapped environments, DHCP and DHCP-less networks, private container registries, etc.), see the SUSE Telco Cloud repository.

43.2 Prepare downstream cluster image for connected scenarios #

Edge Image Builder (Chapter 11, Edge Image Builder) is used to prepare a modified SLEMicro base image which is provisioned on downstream cluster hosts.

Much of the configuration via Edge Image Builder is possible, but in this guide, we cover the minimal configurations necessary to set up the downstream cluster.

43.2.1 Prerequisites for connected scenarios #

A container runtime such as Podman or Rancher Desktop is required to run Edge Image Builder.

The base image will be built using the following guide Chapter 28, Building Updated SUSE Linux Micro Images with Kiwi with the profile

Base-SelfInstall(orBase-RT-SelfInstallfor the Real-Time kernel). The process is the same for both architectures (x86-64 and aarch64).

It is required to use a build host with the same architecture of the images being built. In other words, to build an aarch64 image, it is required to use an aarch64 build host, and vice-versa for x86-64 (cross-builds are not supported at this time).

43.2.2 Image configuration for connected scenarios #

When running Edge Image Builder, a directory is mounted from the host, so it is necessary to create a directory structure to store the configuration files used to define the target image.

downstream-cluster-config.yamlis the image definition file, see Chapter 3, Standalone clusters with Edge Image Builder for more details.The base image folder will contain the output raw image generated following the guide Chapter 28, Building Updated SUSE Linux Micro Images with Kiwi with the profile

Base-SelfInstall(orBase-RT-SelfInstallfor the Real-Time kernel) must be copied/moved under thebase-imagesfolder.The

networkfolder is optional, see Section 43.2.2.6, “Additional script for Advanced Network Configuration” for more details.The

custom/scriptsdirectory contains scripts to be run on first-boot:01-fix-growfs.shscript is required to resize the OS root partition on deployment02-performance.shscript is optional and can be used to configure the system for performance tuning.03-sriov.shscript is optional and can be used to configure the system for SR-IOV.

The

custom/filesdirectory contains theperformance-settings.shandsriov-auto-filler.shfiles to be copied to the image during the image creation process.

├── downstream-cluster-config.yaml

├── base-images/

│ └ SL-Micro.x86_64-6.2-Base-GM.raw

├── network/

| └ configure-network.sh

└── custom/

└ scripts/

| └ 01-fix-growfs.sh

| └ 02-performance.sh

| └ 03-sriov.sh

└ files/

└ performance-settings.sh

└ sriov-auto-filler.sh43.2.2.1 Downstream cluster image definition file #

The downstream-cluster-config.yaml file is the main configuration file for the downstream cluster image. The following is a minimal example for deployment via Metal3:

apiVersion: 1.3

image:

imageType: raw

arch: x86_64

baseImage: SL-Micro.x86_64-6.2-Base-GM.raw

outputImageName: eibimage-output-telco.raw

operatingSystem:

kernelArgs:

- ignition.platform.id=openstack

systemd:

disable:

- rebootmgr

- transactional-update.timer

- transactional-update-cleanup.timer

- fstrim

- time-sync.target

users:

- username: root

encryptedPassword: $ROOT_PASSWORD

sshKeys:

- $USERKEY1

packages:

packageList:

- jq

sccRegistrationCode: $SCC_REGISTRATION_CODEWhere $SCC_REGISTRATION_CODE is the registration code copied from SUSE Customer Center, and the package list contains jq which is required.

$ROOT_PASSWORD is the encrypted password for the root user, which can be useful for test/debugging. It can be generated with the openssl passwd -6 PASSWORD command

For the production environments, it is recommended to use the SSH keys that can be added to the users block replacing the $USERKEY1 with the real SSH keys.

arch: x86_64 is the architecture of the image. For arm64 architecture, use arch: aarch64.

Note ignition.platform.id=openstack is mandatory, without this argument SLEMicro configuration via ignition will fail in the Metal3 automated flow.

43.2.2.2 Growfs script #

Currently, a custom script (custom/scripts/01-fix-growfs.sh) is required to grow the file system to match the disk size on first-boot after provisioning. The 01-fix-growfs.sh script contains the following information:

#!/bin/bash

growfs() {

mnt="$1"

dev="$(findmnt --fstab --target ${mnt} --evaluate --real --output SOURCE --noheadings)"

# /dev/sda3 -> /dev/sda, /dev/nvme0n1p3 -> /dev/nvme0n1

parent_dev="/dev/$(lsblk --nodeps -rno PKNAME "${dev}")"

# Last number in the device name: /dev/nvme0n1p42 -> 42

partnum="$(echo "${dev}" | sed 's/^.*[^0-9]\([0-9]\+\)$/\1/')"

ret=0

growpart "$parent_dev" "$partnum" || ret=$?

[ $ret -eq 0 ] || [ $ret -eq 1 ] || exit 1

/usr/lib/systemd/systemd-growfs "$mnt"

}

growfs /43.2.2.3 Performance script #

The following optional script (custom/scripts/02-performance.sh) can be used to configure the system for performance tuning:

#!/bin/bash # create the folder to extract the artifacts there mkdir -p /opt/performance-settings # copy the artifacts cp performance-settings.sh /opt/performance-settings/

The content of custom/files/performance-settings.sh is a script that can be used to configure the system for performance tuning and can be downloaded from the following link.

43.2.2.4 SR-IOV script #

The following optional script (custom/scripts/03-sriov.sh) can be used to configure the system for SR-IOV:

#!/bin/bash # create the folder to extract the artifacts there mkdir -p /opt/sriov # copy the artifacts cp sriov-auto-filler.sh /opt/sriov/sriov-auto-filler.sh

The content of custom/files/sriov-auto-filler.sh is a script that can be used to configure the system for SR-IOV and can be downloaded from the following link.

Add your own custom scripts to be executed during the provisioning process using the same approach. For more information, see Chapter 3, Standalone clusters with Edge Image Builder.

43.2.2.5 Additional configuration for Telco workloads #

To enable Telco features like dpdk, sr-iov or FEC, additional packages may be required as shown in the following example.

apiVersion: 1.3

image:

imageType: raw

arch: x86_64

baseImage: SL-Micro.x86_64-6.2-Base-GM.raw

outputImageName: eibimage-output-telco.raw

operatingSystem:

kernelArgs:

- ignition.platform.id=openstack

systemd:

disable:

- rebootmgr

- transactional-update.timer

- transactional-update-cleanup.timer

- fstrim

- time-sync.target

users:

- username: root

encryptedPassword: $ROOT_PASSWORD

sshKeys:

- $user1Key1

packages:

packageList:

- jq

- dpdk

- dpdk-tools

- libdpdk-25

- pf-bb-config

sccRegistrationCode: $SCC_REGISTRATION_CODEWhere $SCC_REGISTRATION_CODE is the registration code copied from SUSE Customer Center, and the package list contains the minimum packages to be used for the Telco profiles.

arch: x86_64 is the architecture of the image. For arm64 architecture, use arch: aarch64.

43.2.2.6 Additional script for Advanced Network Configuration #

If you need to configure static IPs or more advanced networking scenarios as described in Section 43.6, “Advanced Network Configuration”, the following additional configuration is required.

In the network folder, create the following configure-network.sh file - this consumes configuration drive data on first-boot, and configures the

host networking using the NM Configurator tool.

#!/bin/bash

set -eux

# Attempt to statically configure a NIC in the case where we find a network_data.json

# In a configuration drive

CONFIG_DRIVE=$(blkid --label config-2 || true)

if [ -z "${CONFIG_DRIVE}" ]; then

echo "No config-2 device found, skipping network configuration"

exit 0

fi

mount -o ro $CONFIG_DRIVE /mnt

NETWORK_DATA_FILE="/mnt/openstack/latest/network_data.json"

if [ ! -f "${NETWORK_DATA_FILE}" ]; then

umount /mnt

echo "No network_data.json found, skipping network configuration"

exit 0

fi

DESIRED_HOSTNAME=$(cat /mnt/openstack/latest/meta_data.json | tr ',{}' '\n' | grep '\"metal3-name\"' | sed 's/.*\"metal3-name\": \"\(.*\)\"/\1/')

echo "${DESIRED_HOSTNAME}" > /etc/hostname

mkdir -p /tmp/nmc/{desired,generated}

cp ${NETWORK_DATA_FILE} /tmp/nmc/desired/_all.yaml

umount /mnt

./nmc generate --config-dir /tmp/nmc/desired --output-dir /tmp/nmc/generated

./nmc apply --config-dir /tmp/nmc/generated43.2.3 Image creation #

Once the directory structure is prepared following the previous sections, run the following command to build the image:

podman run --rm --privileged -it -v $PWD:/eib \ registry.suse.com/edge/3.5/edge-image-builder:1.3.2 \ build --definition-file downstream-cluster-config.yaml

This creates the output ISO image file named eibimage-output-telco.raw, based on the definition described above.

The output image must then be made available via a webserver, either the media-server container enabled via the Management Cluster Documentation (Note)

or some other locally accessible server. In the examples below, we refer to this server as imagecache.local:8080

43.3 Prepare downstream cluster image for air-gap scenarios #

Edge Image Builder (Chapter 11, Edge Image Builder) is used to prepare a modified SLEMicro base image which is provisioned on downstream cluster hosts.

Much of the configuration is possible with Edge Image Builder, but in this guide, we cover the minimal configurations necessary to set up the downstream cluster for air-gap scenarios.

43.3.1 Prerequisites for air-gap scenarios #

A container runtime such as Podman or Rancher Desktop is required to run Edge Image Builder.

The base image will be built using the following guide Chapter 28, Building Updated SUSE Linux Micro Images with Kiwi with the profile

Base-SelfInstall(orBase-RT-SelfInstallfor the Real-Time kernel). The process is the same for both architectures (x86-64 and aarch64).If you want to use SR-IOV or any other workload which require a container image, a local private registry must be deployed and already configured (with/without TLS and/or authentication). This registry will be used to store the images and the helm chart OCI images.

It is required to use a build host with the same architecture of the images being built. In other words, to build an aarch64 image, it is required to use an aarch64 build host, and vice-versa for x86-64 (cross-builds are not supported at this time).

43.3.2 Image configuration for air-gap scenarios #

When running Edge Image Builder, a directory is mounted from the host, so it is necessary to create a directory structure to store the configuration files used to define the target image.

downstream-cluster-airgap-config.yamlis the image definition file, see Chapter 3, Standalone clusters with Edge Image Builder for more details.The base image folder will contain the output raw image generated following the guide Chapter 28, Building Updated SUSE Linux Micro Images with Kiwi with the profile

Base-SelfInstall(orBase-RT-SelfInstallfor the Real-Time kernel) must be copied/moved under thebase-imagesfolder.The

networkfolder is optional, see Section 43.2.2.6, “Additional script for Advanced Network Configuration” for more details.The

custom/scriptsdirectory contains scripts to be run on first-boot:01-fix-growfs.shscript is required to resize the OS root partition on deployment.02-airgap.shscript is required to copy the images to the right place during the image creation process for air-gapped environments.03-performance.shscript is optional and can be used to configure the system for performance tuning.04-sriov.shscript is optional and can be used to configure the system for SR-IOV.

The

custom/filesdirectory contains therke2and thecniimages to be copied to the image during the image creation process. Also, the optionalperformance-settings.shandsriov-auto-filler.shfiles can be included.

├── downstream-cluster-airgap-config.yaml

├── base-images/

│ └ SL-Micro.x86_64-6.2-Base-GM.raw

├── network/

| └ configure-network.sh

└── custom/

└ files/

| └ install.sh

| └ rke2-images-cilium.linux-amd64.tar.zst

| └ rke2-images-core.linux-amd64.tar.zst

| └ rke2-images-multus.linux-amd64.tar.zst

| └ rke2-images.linux-amd64.tar.zst

| └ rke2.linux-amd64.tar.zst

| └ sha256sum-amd64.txt

| └ performance-settings.sh

| └ sriov-auto-filler.sh

└ scripts/

└ 01-fix-growfs.sh

└ 02-airgap.sh

└ 03-performance.sh

└ 04-sriov.sh43.3.2.1 Downstream cluster image definition file #

The downstream-cluster-airgap-config.yaml file is the main configuration file for the downstream cluster image and the content has been described in the previous section (Section 43.2.2.5, “Additional configuration for Telco workloads”).

43.3.2.2 Growfs script #

Currently, a custom script (custom/scripts/01-fix-growfs.sh) is required to grow the file system to match the disk size on first-boot after provisioning. The 01-fix-growfs.sh script contains the following information:

#!/bin/bash

growfs() {

mnt="$1"

dev="$(findmnt --fstab --target ${mnt} --evaluate --real --output SOURCE --noheadings)"

# /dev/sda3 -> /dev/sda, /dev/nvme0n1p3 -> /dev/nvme0n1

parent_dev="/dev/$(lsblk --nodeps -rno PKNAME "${dev}")"

# Last number in the device name: /dev/nvme0n1p42 -> 42

partnum="$(echo "${dev}" | sed 's/^.*[^0-9]\([0-9]\+\)$/\1/')"

ret=0

growpart "$parent_dev" "$partnum" || ret=$?

[ $ret -eq 0 ] || [ $ret -eq 1 ] || exit 1

/usr/lib/systemd/systemd-growfs "$mnt"

}

growfs /43.3.2.3 Air-gap script #

The following script (custom/scripts/02-airgap.sh) is required to copy the images to the right place during the image creation process:

#!/bin/bash # create the folder to extract the artifacts there mkdir -p /opt/rke2-artifacts mkdir -p /var/lib/rancher/rke2/agent/images # copy the artifacts cp install.sh /opt/ cp rke2-images*.tar.zst rke2.linux-amd64.tar.gz sha256sum-amd64.txt /opt/rke2-artifacts/

43.3.2.4 Performance script #

The following optional script (custom/scripts/03-performance.sh) can be used to configure the system for performance tuning:

#!/bin/bash # create the folder to extract the artifacts there mkdir -p /opt/performance-settings # copy the artifacts cp performance-settings.sh /opt/performance-settings/

The content of custom/files/performance-settings.sh is a script that can be used to configure the system for performance tuning and can be downloaded from the following link.

43.3.2.5 SR-IOV script #

The following optional script (custom/scripts/04-sriov.sh) can be used to configure the system for SR-IOV:

#!/bin/bash # create the folder to extract the artifacts there mkdir -p /opt/sriov # copy the artifacts cp sriov-auto-filler.sh /opt/sriov/sriov-auto-filler.sh

The content of custom/files/sriov-auto-filler.sh is a script that can be used to configure the system for SR-IOV and can be downloaded from the following link.

43.3.2.6 Custom files for air-gap scenarios #

The custom/files directory contains the rke2 and the cni images to be copied to the image during the image creation process.

To easily generate the images, prepare them locally using following script and the list of images here to generate the artifacts required to be included in custom/files.

Also, you can download the latest rke2-install script from here.

$ ./edge-save-rke2-images.sh -o custom/files -l ~/edge-release-rke2-images.txt

After downloading the images, the directory structure should look like this:

└── custom/

└ files/

└ install.sh

└ rke2-images-cilium.linux-amd64.tar.zst

└ rke2-images-core.linux-amd64.tar.zst

└ rke2-images-multus.linux-amd64.tar.zst

└ rke2-images.linux-amd64.tar.zst

└ rke2.linux-amd64.tar.zst

└ sha256sum-amd64.txt43.3.2.7 Preload your private registry with images required for air-gap scenarios and SR-IOV (optional) #

If you want to use SR-IOV in your air-gap scenario or any other workload images, you must preload your local private registry with the images following the next steps:

Download, extract, and push the helm-chart OCI images to the private registry

Download, extract, and push the rest of images required to the private registry

The following scripts can be used to download, extract, and push the images to the private registry. We will show an example to preload the SR-IOV images, but you can also use the same approach to preload any other custom images:

Preload with helm-chart OCI images for SR-IOV:

You must create a list with the helm-chart OCI images required:

$ cat > edge-release-helm-oci-artifacts.txt <<EOF edge/charts/sriov-network-operator:305.0.4+up1.6.0 edge/charts/sriov-crd:305.0.4+up1.6.0 EOF

Generate a local tarball file using the following script and the list created above:

$ ./edge-save-oci-artefacts.sh -al ./edge-release-helm-oci-artifacts.txt -s registry.suse.com Pulled: registry.suse.com/edge/charts/sriov-network-operator:305.0.4+up1.6.0 Pulled: registry.suse.com/edge/charts/sriov-crd:305.0.4+up1.6.0 a edge-release-oci-tgz-20240705 a edge-release-oci-tgz-20240705/sriov-network-operator-305.0.4+up1.6.0.tgz a edge-release-oci-tgz-20240705/sriov-crd-305.0.4+up1.6.0.tgz

Upload your tarball file to your private registry (e.g.

myregistry:5000) using the following script to preload your registry with the helm chart OCI images downloaded in the previous step:$ tar zxvf edge-release-oci-tgz-20240705.tgz $ ./edge-load-oci-artefacts.sh -ad edge-release-oci-tgz-20240705 -r myregistry:5000

Preload with the rest of the images required for SR-IOV:

In this case, we must include the `sr-iov container images for telco workloads (e.g. as a reference, you could get them from helm-chart values)

$ cat > edge-release-images.txt <<EOF rancher/hardened-sriov-network-operator:v1.3.0-build20240816 rancher/hardened-sriov-network-config-daemon:v1.3.0-build20240816 rancher/hardened-sriov-cni:v2.8.1-build20240820 rancher/hardened-ib-sriov-cni:v1.1.1-build20240816 rancher/hardened-sriov-network-device-plugin:v3.7.0-build20240816 rancher/hardened-sriov-network-resources-injector:v1.6.0-build20240816 rancher/hardened-sriov-network-webhook:v1.3.0-build20240816 EOF

Using the following script and the list created above, you must generate locally the tarball file with the images required:

$ ./edge-save-images.sh -l ./edge-release-images.txt -s registry.suse.com Image pull success: registry.suse.com/rancher/hardened-sriov-network-operator:v1.3.0-build20240816 Image pull success: registry.suse.com/rancher/hardened-sriov-network-config-daemon:v1.3.0-build20240816 Image pull success: registry.suse.com/rancher/hardened-sriov-cni:v2.8.1-build20240820 Image pull success: registry.suse.com/rancher/hardened-ib-sriov-cni:v1.1.1-build20240816 Image pull success: registry.suse.com/rancher/hardened-sriov-network-device-plugin:v3.7.0-build20240816 Image pull success: registry.suse.com/rancher/hardened-sriov-network-resources-injector:v1.6.0-build20240816 Image pull success: registry.suse.com/rancher/hardened-sriov-network-webhook:v1.3.0-build20240816 Creating edge-images.tar.gz with 7 images

Upload your tarball file to your private registry (e.g.

myregistry:5000) using the following script to preload your private registry with the images downloaded in the previous step:$ tar zxvf edge-release-images-tgz-20240705.tgz $ ./edge-load-images.sh -ad edge-release-images-tgz-20240705 -r myregistry:5000

43.3.3 Image creation for air-gap scenarios #

Once the directory structure is prepared following the previous sections, run the following command to build the image:

podman run --rm --privileged -it -v $PWD:/eib \ registry.suse.com/edge/3.5/edge-image-builder:1.3.2 \ build --definition-file downstream-cluster-airgap-config.yaml

This creates the output ISO image file named eibimage-output-telco.raw, based on the definition described above.

The output image must then be made available via a webserver, either the media-server container enabled via the Management Cluster Documentation (Note)

or some other locally accessible server. In the examples below, we refer to this server as imagecache.local:8080.

43.4 Downstream cluster provisioning with Directed network provisioning (single-node) #

This section describes the workflow used to automate the provisioning of a single-node downstream cluster using directed network provisioning. This is the simplest way to automate the provisioning of a downstream cluster.

Requirements

The image generated using

EIB, as described in the previous section (Section 43.2, “Prepare downstream cluster image for connected scenarios”), with the minimal configuration to set up the downstream cluster has to be located in the management cluster exactly on the path you configured on this section (Note).The management server created and available to be used on the following sections. For more information, refer to the Management Cluster section Chapter 41, Setting up the management cluster.

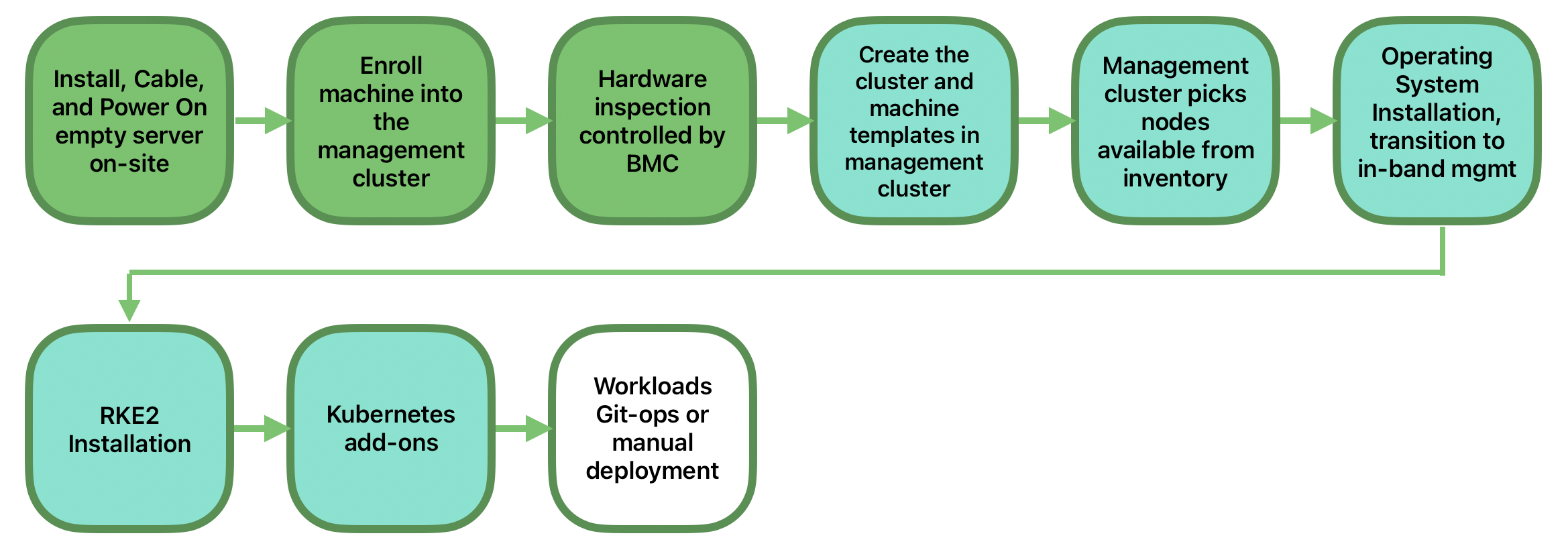

Workflow

The following diagram shows the workflow used to automate the provisioning of a single-node downstream cluster using directed network provisioning:

There are two different steps to automate the provisioning of a single-node downstream cluster using directed network provisioning:

Enroll the bare-metal host to make it available for the provisioning process.

Provision the bare-metal host to install and configure the operating system and the Kubernetes cluster.

Enroll the bare-metal host

The first step is to enroll the new bare-metal host in the management cluster to make it available to be provisioned.

To do that, the following file (bmh-example.yaml) has to be created in the management cluster, to specify the BMC credentials to be used and the BaremetalHost object to be enrolled:

apiVersion: v1

kind: Secret

metadata:

name: example-demo-credentials

type: Opaque

data:

username: ${BMC_USERNAME}

password: ${BMC_PASSWORD}

---

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: example-demo

labels:

cluster-role: control-plane

spec:

architecture: x86_64

online: true

bootMACAddress: ${BMC_MAC}

rootDeviceHints:

deviceName: /dev/nvme0n1

bmc:

address: ${BMC_ADDRESS}

disableCertificateVerification: true

credentialsName: example-demo-credentialswhere:

${BMC_USERNAME}— The user name for theBMCof the new bare-metal host.${BMC_PASSWORD}— The password for theBMCof the new bare-metal host.${BMC_MAC}— TheMACaddress of the new bare-metal host to be used.${BMC_ADDRESS}— TheURLfor the bare-metal hostBMC(for example,redfish-virtualmedia://192.168.200.75/redfish/v1/Systems/1/). To learn more about the different options available depending on your hardware provider, check the following link.

Architecture must be either

x86_64oraarch64, depending on the architecture of the bare-metal host to be enrolled.If no network configuration for the host has been specified, either at image build time or through the

BareMetalHostdefinition, an autoconfiguration mechanism (DHCP, DHCPv6, SLAAC) will be used. For more details or complex configurations, check the Section 43.6, “Advanced Network Configuration”.

Once the file is created, the following command has to be executed in the management cluster to start enrolling the new bare-metal host in the management cluster:

$ kubectl apply -f bmh-example.yaml

The new bare-metal host object will be enrolled, changing its state from registering to inspecting and available. The changes can be checked using the following command:

$ kubectl get bmh

The BaremetalHost object is in the registering state until the BMC credentials are validated. Once the credentials are validated, the BaremetalHost object changes its state to inspecting, and this step could take some time depending on the hardware (up to 20 minutes). During the inspecting phase, the hardware information is retrieved and the Kubernetes object is updated. Check the information using the following command: kubectl get bmh -o yaml.

Provision step

Once the bare-metal host is enrolled and available, the next step is to provision the bare-metal host to install and configure the operating system and the Kubernetes cluster.

To do that, the following file (capi-provisioning-example.yaml) has to be created in the management-cluster with the following information (the capi-provisioning-example.yaml can be generated by joining the following blocks).

Only values between $\{…\} must be replaced with the real values.

The following block is the cluster definition, where the networking can be configured using the pods and the services blocks. Also, it contains the references to the control plane and the infrastructure (using the Metal3 provider) objects to be used.

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: single-node-cluster

namespace: default

labels:

cluster-api.cattle.io/rancher-auto-import: "true"

spec:

clusterNetwork:

pods:

cidrBlocks:

- 192.168.0.0/18

- fd00:bad:cafe::/48

services:

cidrBlocks:

- 10.96.0.0/12

- fd00:bad:bad:cafe::/112

controlPlaneRef:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

name: single-node-cluster

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3Cluster

name: single-node-clusterBoth single-stack and dual-stack deployments are possible, remove the IPv6 CIDRs from the above definition for an IPv4 only cluster.

Single-stack IPv6 deployments are in tech preview status and not yet officially supported.

Adding the label

cluster-api.cattle.io/rancher-auto-import: "true"to thecluster.x-k8s.ioobjects will import the cluster into Rancher (by creating a correspondingclusters.management.cattle.ioobject). See the Cluster API documentation for more information.

The Metal3Cluster object specifies the control-plane endpoint (replacing the ${DOWNSTREAM_CONTROL_PLANE_IPV4}) to be configured and the noCloudProvider because a bare-metal node is used.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3Cluster

metadata:

name: single-node-cluster

namespace: default

spec:

controlPlaneEndpoint:

host: ${DOWNSTREAM_CONTROL_PLANE_IPV4}

port: 6443

noCloudProvider: trueThe RKE2ControlPlane object specifies the control-plane configuration to be used and the Metal3MachineTemplate object specifies the control-plane image to be used.

Also, it contains the information about the number of replicas to be used (in this case, one) and the CNI plug-in to be used (in this case, Cilium).

The agentConfig block contains the Ignition format to be used and the additionalUserData to be used to configure the RKE2 node with information like a systemd named rke2-preinstall.service to replace automatically the BAREMETALHOST_UUID and node-name during the provisioning process using the Ironic information.

To enable multus with cilium a file is created in the rke2 server manifests directory named rke2-cilium-config.yaml with the configuration to be used.

The last block of information contains the Kubernetes version to be used. ${RKE2_VERSION} is the version of RKE2 to be used replacing this value (for example, v1.34.2+rke2r1).

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

metadata:

name: single-node-cluster

namespace: default

annotations: {

rke2.controlplane.cluster.x-k8s.io/load-balancer-exclusion: "true"

}

spec:

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: single-node-cluster-controlplane

replicas: 1

version: ${RKE2_VERSION}

rolloutStrategy:

type: "RollingUpdate"

rollingUpdate:

maxSurge: 0

serverConfig:

cni: cilium

agentConfig:

format: ignition

additionalUserData:

config: |

variant: fcos

version: 1.4.0

systemd:

units:

- name: rke2-preinstall.service

enabled: true

contents: |

[Unit]

Description=rke2-preinstall

Wants=network-online.target

Before=rke2-install.service

ConditionPathExists=!/run/cluster-api/bootstrap-success.complete

[Service]

Type=oneshot

User=root

ExecStartPre=/bin/sh -c "mount -L config-2 /mnt"

ExecStart=/bin/sh -c "sed -i \"s/BAREMETALHOST_UUID/$(jq -r .uuid /mnt/openstack/latest/meta_data.json)/\" /etc/rancher/rke2/config.yaml"

ExecStart=/bin/sh -c "echo \"node-name: $(jq -r .name /mnt/openstack/latest/meta_data.json)\" >> /etc/rancher/rke2/config.yaml"

ExecStartPost=/bin/sh -c "umount /mnt"

[Install]

WantedBy=multi-user.target

storage:

files:

# https://docs.rke2.io/networking/multus_sriov#using-multus-with-cilium

- path: /var/lib/rancher/rke2/server/manifests/rke2-cilium-config.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-cilium

namespace: kube-system

spec:

valuesContent: |-

cni:

exclusive: false

mode: 0644

user:

name: root

group:

name: root

kubelet:

extraArgs:

- provider-id=metal3://BAREMETALHOST_UUID

nodeName: "localhost.localdomain"The Metal3MachineTemplate object specifies the following information:

The

dataTemplateto be used as a reference to the template.The

hostSelectorto be used matching with the label created during the enrollment process.The

imageto be used as a reference to the image generated usingEIBon the previous section (Section 43.2, “Prepare downstream cluster image for connected scenarios”), and thechecksumandchecksumTypeto be used to validate the image.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

metadata:

name: single-node-cluster-controlplane

namespace: default

spec:

template:

spec:

dataTemplate:

name: single-node-cluster-controlplane-template

hostSelector:

matchLabels:

cluster-role: control-plane

image:

checksum: http://imagecache.local:8080/eibimage-output-telco.raw.sha256

checksumType: sha256

format: raw

url: http://imagecache.local:8080/eibimage-output-telco.rawThe Metal3DataTemplate object specifies the metaData for the downstream cluster.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3DataTemplate

metadata:

name: single-node-cluster-controlplane-template

namespace: default

spec:

clusterName: single-node-cluster

metaData:

objectNames:

- key: name

object: machine

- key: local-hostname

object: machine

- key: local_hostname

object: machineOnce the file is created by joining the previous blocks, the following command must be executed in the management cluster to start provisioning the new bare-metal host:

$ kubectl apply -f capi-provisioning-example.yaml

43.5 Downstream cluster provisioning with Directed network provisioning (multi-node) #

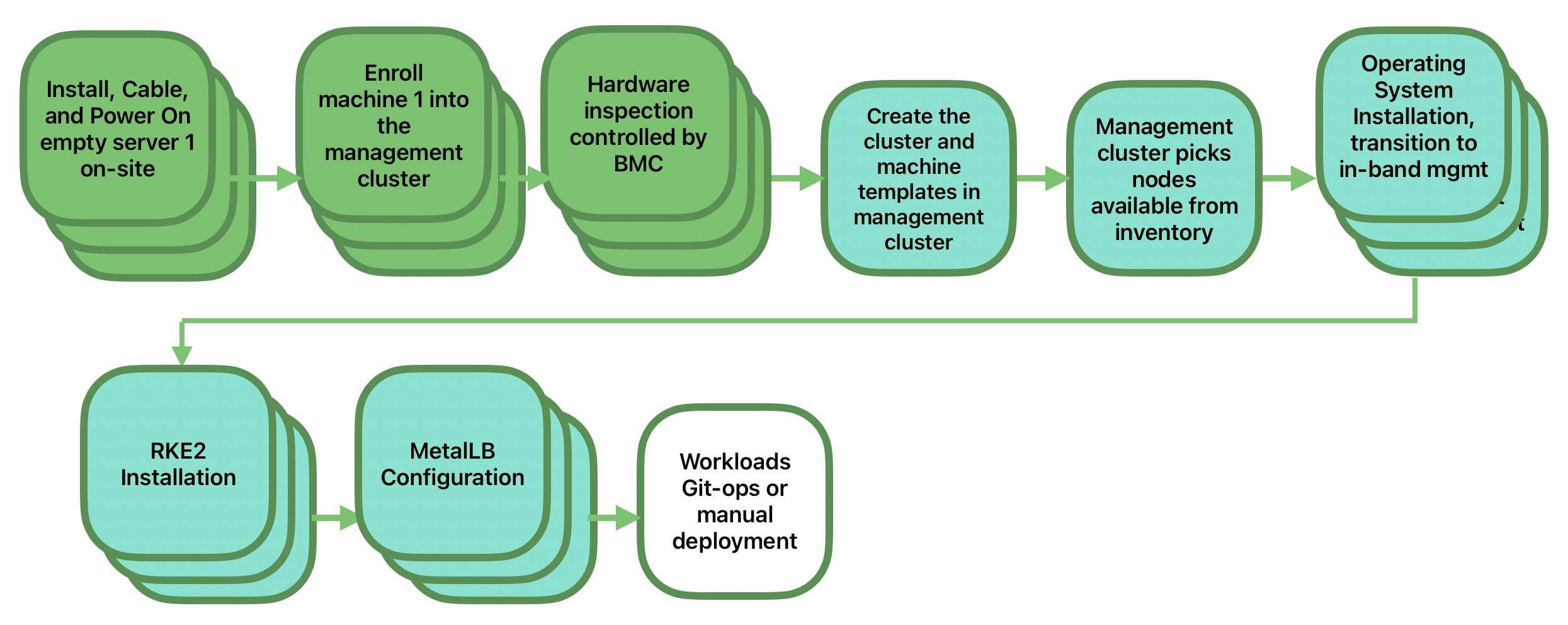

This section describes the workflow used to automate the provisioning of a multi-node downstream cluster using directed network provisioning and MetalLB as a load-balancer strategy.

This is the simplest way to automate the provisioning of a downstream cluster. The following diagram shows the workflow used to automate the provisioning of a multi-node downstream cluster using directed network provisioning and MetalLB.

Requirements

The image generated using

EIB, as described in the previous section (Section 43.2, “Prepare downstream cluster image for connected scenarios”), with the minimal configuration to set up the downstream cluster has to be located in the management cluster exactly on the path you configured on this section (Note).The management server created and available to be used on the following sections. For more information, refer to the Management Cluster section: Chapter 41, Setting up the management cluster.

Workflow

The following diagram shows the workflow used to automate the provisioning of a multi-node downstream cluster using directed network provisioning:

Enroll the three bare-metal hosts to make them available for the provisioning process.

Provision the three bare-metal hosts to install and configure the operating system and the Kubernetes cluster using

MetalLB.

Enroll the bare-metal hosts

The first step is to enroll the three bare-metal hosts in the management cluster to make them available to be provisioned.

To do that, the following files (bmh-example-node1.yaml, bmh-example-node2.yaml and bmh-example-node3.yaml) must be created in the management cluster, to specify the BMC credentials to be used and the BaremetalHost object to be enrolled in the management cluster.

Only the values between

$\{…\}have to be replaced with the real values.We will walk you through the process for only one host. The same steps apply to the other two nodes.

apiVersion: v1

kind: Secret

metadata:

name: node1-example-credentials

type: Opaque

data:

username: ${BMC_NODE1_USERNAME}

password: ${BMC_NODE1_PASSWORD}

---

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: node1-example

labels:

cluster-role: control-plane

spec:

architecture: x86_64

online: true

bootMACAddress: ${BMC_NODE1_MAC}

bmc:

address: ${BMC_NODE1_ADDRESS}

disableCertificateVerification: true

credentialsName: node1-example-credentialsWhere:

${BMC_NODE1_USERNAME}— The username for the BMC of the first bare-metal host.${BMC_NODE1_PASSWORD}— The password for the BMC of the first bare-metal host.${BMC_NODE1_MAC}— The MAC address of the first bare-metal host to be used.${BMC_NODE1_ADDRESS}— The URL for the first bare-metal host BMC (for example,redfish-virtualmedia://192.168.200.75/redfish/v1/Systems/1/). The host part of the URL can be an IP address (v4 or v6) or a domain name, where the existing infrastructure allows. To learn more about the different options available depending on your hardware provider, check the following link.

If no network configuration for the host has been specified, either at image build time or through the

BareMetalHostdefinition, an autoconfiguration mechanism (DHCP, DHCPv6, SLAAC) will be used. For more details or complex configurations, check the Section 43.6, “Advanced Network Configuration”.Single-stack IPv6 clusters are in tech preview status and not yet officially supported.

Architecture must be either

x86_64oraarch64, depending on the architecture of the bare-metal host to be enrolled.All modern servers come with a dual-stack capable BMC, however IPv6 support (and possibly the option of using hostnames for the VirtualMedia capability) should be verified before use in production in a dual-stack environment.

Once the file is created, the following command must be executed in the management cluster to start enrolling the bare-metal hosts in the management cluster:

$ kubectl apply -f bmh-example-node1.yaml $ kubectl apply -f bmh-example-node2.yaml $ kubectl apply -f bmh-example-node3.yaml

The new bare-metal host objects are enrolled, changing their state from registering to inspecting and available. The changes can be checked using the following command:

$ kubectl get bmh -o wide

The BaremetalHost object is in the registering state until the BMC credentials are validated. Once the credentials are validated, the BaremetalHost object changes its state to inspecting, and this step could take some time depending on the hardware (up to 20 minutes). During the inspecting phase, the hardware information is retrieved and the Kubernetes object is updated. Check the information using the following command: kubectl get bmh -o yaml.

Provision step

Once the three bare-metal hosts are enrolled and available, the next step is to provision the bare-metal hosts to install and configure the operating system and the Kubernetes cluster, creating a load balancer to manage them.

To do that, the following file (capi-provisioning-example.yaml) must be created in the management cluster with the following information (the `capi-provisioning-example.yaml can be generated by joining the following blocks).

Only values between

$\{…\}must be replaced with the real values.The

VIPaddress is a reserved IP address that is not assigned to any node and is used to configure the load balancer. In a dual-stack cluster, both an IPv4 and IPv6 can be specified, but in the following examples priority will be given to the IPv4 address.

Below is the cluster definition, where the cluster network can be configured using the pods and the services blocks. Also, it contains the references to the control plane and the infrastructure (using the Metal3 provider) objects to be used.

apiVersion: cluster.x-k8s.io/v1beta1

kind: Cluster

metadata:

name: multinode-cluster

namespace: default

labels:

cluster-api.cattle.io/rancher-auto-import: "true"

spec:

clusterNetwork:

pods:

cidrBlocks:

- 192.168.0.0/18

- fd00:1234:4321::/48

services:

cidrBlocks:

- 10.96.0.0/12

- fd00:5678:8765:4321::/112

controlPlaneRef:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

name: multinode-cluster

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3Cluster

name: multinode-clusterBoth single-stack and dual-stack deployments are possible, remove the IPv6 CIDRs and IPv6 VIP addresses (in the subsequent sections) for an IPv4 only cluster.

Adding the label

cluster-api.cattle.io/rancher-auto-import: "true"to thecluster.x-k8s.ioobjects will import the cluster into Rancher (by creating a correspondingclusters.management.cattle.ioobject). See the Cluster API documentation for more information.

The Metal3Cluster object specifies the control-plane endpoint that uses the VIP address already reserved (replacing the ${EDGE_VIP_ADDRESS_IPV4}) to be configured and the noCloudProvider because the three bare-metal nodes are used.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3Cluster

metadata:

name: multinode-cluster

namespace: default

spec:

controlPlaneEndpoint:

host: ${EDGE_VIP_ADDRESS_IPV4}

port: 6443

noCloudProvider: trueThe RKE2ControlPlane object specifies the control-plane configuration to be used, and the Metal3MachineTemplate object specifies the control-plane image to be used.

A load balancer exclusion annotation that informs external load balancers like MetalLB that a node is going to be drained during lifecycle operations like upgrades of downstream clusters. For details see: Section 44.1, “Load Balancer Exclusion”

The number of replicas to be used (in this case, three).

The advertisement mode to be used by the Load Balancer (

addressuses the L2 implementation), as well as the address to be used (replacing the${EDGE_VIP_ADDRESS}with theVIPaddress).The

serverConfigwith theCNIplug-in to be used (in this case,Cilium), and the additionalVIPaddress(es) and name(s) to be listed undertlsSan.The agentConfig block contains the

Ignitionformat to be used and theadditionalUserDatato be used to configure theRKE2node with information like:The systemd service named

rke2-preinstall.serviceto replace automatically theBAREMETALHOST_UUIDandnode-nameduring the provisioning process using the Ironic information.The

storageblock which contains the Helm charts to be used to install theMetalLBand theendpoint-copier-operator.The

metalLBcustom resource file with theIPaddressPooland theL2Advertisementto be used (replacing${EDGE_VIP_ADDRESS_IPV4}with theVIPaddress).The

endpoint-svc.yamlfile to be used to configure thekubernetes-vipservice to be used by theMetalLBto manage theVIPaddress.

The last block of information contains the Kubernetes version to be used. The

${RKE2_VERSION}is the version ofRKE2to be used replacing this value (for example,v1.34.2+rke2r1).

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

metadata:

name: multinode-cluster

namespace: default

annotations: {

rke2.controlplane.cluster.x-k8s.io/load-balancer-exclusion: "true"

}

spec:

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: multinode-cluster-controlplane

replicas: 3

version: ${RKE2_VERSION}

rolloutStrategy:

type: "RollingUpdate"

rollingUpdate:

maxSurge: 0

registrationMethod: "control-plane-endpoint"

registrationAddress: ${EDGE_VIP_ADDRESS}

serverConfig:

cni: cilium

tlsSan:

- ${EDGE_VIP_ADDRESS_IPV4}

- ${EDGE_VIP_ADDRESS_IPV6}

- https://${EDGE_VIP_ADDRESS_IPV4}.sslip.io

- https://${EDGE_VIP_ADDRESS_IPV6}.sslip.io

agentConfig:

format: ignition

additionalUserData:

config: |

variant: fcos

version: 1.4.0

systemd:

units:

- name: rke2-preinstall.service

enabled: true

contents: |

[Unit]

Description=rke2-preinstall

Wants=network-online.target

Before=rke2-install.service

ConditionPathExists=!/run/cluster-api/bootstrap-success.complete

[Service]

Type=oneshot

User=root

ExecStartPre=/bin/sh -c "mount -L config-2 /mnt"

ExecStart=/bin/sh -c "sed -i \"s/BAREMETALHOST_UUID/$(jq -r .uuid /mnt/openstack/latest/meta_data.json)/\" /etc/rancher/rke2/config.yaml"

ExecStart=/bin/sh -c "echo \"node-name: $(jq -r .name /mnt/openstack/latest/meta_data.json)\" >> /etc/rancher/rke2/config.yaml"

ExecStartPost=/bin/sh -c "umount /mnt"

[Install]

WantedBy=multi-user.target

storage:

files:

# https://docs.rke2.io/networking/multus_sriov#using-multus-with-cilium

- path: /var/lib/rancher/rke2/server/manifests/rke2-cilium-config.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: rke2-cilium

namespace: kube-system

spec:

valuesContent: |-

cni:

exclusive: false

mode: 0644

user:

name: root

group:

name: root

- path: /var/lib/rancher/rke2/server/manifests/endpoint-copier-operator.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: endpoint-copier-operator

namespace: kube-system

spec:

chart: oci://registry.suse.com/edge/charts/endpoint-copier-operator

targetNamespace: endpoint-copier-operator

version: 305.0.1+up0.3.0

createNamespace: true

- path: /var/lib/rancher/rke2/server/manifests/metallb.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: metallb

namespace: kube-system

spec:

chart: oci://registry.suse.com/edge/charts/metallb

targetNamespace: metallb-system

version: 305.0.1+up0.15.2

createNamespace: true

- path: /var/lib/rancher/rke2/server/manifests/metallb-cr.yaml

overwrite: true

contents:

inline: |

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: kubernetes-vip-ip-pool

namespace: metallb-system

spec:

addresses:

- ${EDGE_VIP_ADDRESS_IPV4}/32

- ${EDGE_VIP_ADDRESS_IPV6}/128

serviceAllocation:

priority: 100

namespaces:

- default

serviceSelectors:

- matchExpressions:

- {key: "serviceType", operator: In, values: [kubernetes-vip]}

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: ip-pool-l2-adv

namespace: metallb-system

spec:

ipAddressPools:

- kubernetes-vip-ip-pool

- path: /var/lib/rancher/rke2/server/manifests/endpoint-svc.yaml

overwrite: true

contents:

inline: |

apiVersion: v1

kind: Service

metadata:

name: kubernetes-vip

namespace: default

labels:

serviceType: kubernetes-vip

spec:

ipFamilyPolicy: PreferDualStack

ports:

- name: rke2-api

port: 9345

protocol: TCP

targetPort: 9345

- name: k8s-api

port: 6443

protocol: TCP

targetPort: 6443

type: LoadBalancer

kubelet:

extraArgs:

- provider-id=metal3://BAREMETALHOST_UUID

nodeName: "Node-multinode-cluster"The Metal3MachineTemplate object specifies the following information:

The

dataTemplateto be used as a reference to the template.The

hostSelectorto be used matching with the label created during the enrollment process.The

imageto be used as a reference to the image generated usingEIBon the previous section (Section 43.2, “Prepare downstream cluster image for connected scenarios”), andchecksumandchecksumTypeto be used to validate the image.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

metadata:

name: multinode-cluster-controlplane

namespace: default

spec:

template:

spec:

dataTemplate:

name: multinode-cluster-controlplane-template

hostSelector:

matchLabels:

cluster-role: control-plane

image:

checksum: http://imagecache.local:8080/eibimage-output-telco.raw.sha256

checksumType: sha256

format: raw

url: http://imagecache.local:8080/eibimage-output-telco.rawThe Metal3DataTemplate object specifies the metaData for the downstream cluster.

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3DataTemplate

metadata:

name: multinode-cluster-controlplane-template

namespace: default

spec:

clusterName: multinode-cluster

metaData:

objectNames:

- key: name

object: machine

- key: local-hostname

object: machine

- key: local_hostname

object: machineThe following yaml files are an example configuration for the worker nodes.

A MachineDeployment:

apiVersion: cluster.x-k8s.io/v1beta1

kind: MachineDeployment

metadata:

labels:

cluster.x-k8s.io/cluster-name: multinode-cluster

nodepool: nodepool-0

name: multinode-cluster-workers

namespace: default

spec:

clusterName: multinode-cluster

replicas: 3

selector:

matchLabels:

cluster.x-k8s.io/cluster-name: multinode-cluster

nodepool: nodepool-0

template:

metadata:

labels:

cluster.x-k8s.io/cluster-name: multinode-cluster

nodepool: nodepool-0

spec:

bootstrap:

configRef:

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: RKE2ConfigTemplate

name: multinode-cluster-workers

clusterName: multinode-cluster

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: multinode-cluster-workers

nodeDrainTimeout: 0s

version: ${RKE2_VERSION}The RKE2ConfigTemplate` object specifies the configuration template to be used for multinode cluster worker nodes.

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

kind: RKE2ConfigTemplate

metadata:

name: multinode-cluster-workers

namespace: default

spec:

template:

spec:

agentConfig:

format: ignition

kubelet:

extraArgs:

- provider-id=metal3://BAREMETALHOST_UUID

nodeName: "Node-multinode-cluster-worker"

additionalUserData:

config: |

variant: fcos

version: 1.4.0

systemd:

units:

- name: rke2-preinstall.service

enabled: true

contents: |

[Unit]

Description=rke2-preinstall

Wants=network-online.target

Before=rke2-install.service

ConditionPathExists=!/run/cluster-api/bootstrap-success.complete

[Service]

Type=oneshot

User=root

ExecStartPre=/bin/sh -c "mount -L config-2 /mnt"

ExecStart=/bin/sh -c "sed -i \"s/BAREMETALHOST_UUID/$(jq -r .uuid /mnt/openstack/latest/meta_data.json)/\" /etc/rancher/rke2/config.yaml"

ExecStart=/bin/sh -c "echo \"node-name: $(jq -r .name /mnt/openstack/latest/meta_data.json)\" >> /etc/rancher/rke2/config.yaml"

ExecStartPost=/bin/sh -c "umount /mnt"

[Install]

WantedBy=multi-user.targetThe Metal3MachineTemplate object contain references to dataTemplate, hostSelector, and image for the worker nodes:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

metadata:

name: multinode-cluster-workers

namespace: default

spec:

template:

spec:

dataTemplate:

name: multinode-cluster-workers-template

hostSelector:

matchLabels:

cluster-role: worker

image:

checksum: http://imagecache.local:8080/eibimage-slmicro-rt-telco.raw.sha256

checksumType: sha256

format: raw

url: http://imagecache.local:8080/eibimage-slmicro-rt-telco.rawThe Metal3DataTemplate object specifies the metaData for the downstream cluster for the worker nodes:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3DataTemplate

metadata:

name: multinode-cluster-workers-template

namespace: default

spec:

clusterName: multinode-cluster

metaData:

objectNames:

- key: name

object: machine

- key: local-hostname

object: machine

- key: local_hostname

object: machineOnce the file is created by joining the previous blocks, run the following command in the management cluster to start provisioning the new three bare-metal hosts:

$ kubectl apply -f capi-provisioning-example.yaml

43.6 Advanced Network Configuration #

The directed network provisioning workflow allows for specific network configurations in downstream clusters, such as static IPs, bonding, VLANs, IPv6, etc.

The following sections describe the additional steps required to enable provisioning downstream clusters using advanced network configuration.

Requirements

The image generated using

EIBhas to include the network folder and the script following this section (Section 43.2.2.6, “Additional script for Advanced Network Configuration”).

Configuration

Before proceeding refer to one of the following sections for guidance on the steps required to enroll and provision the host(s):

Downstream cluster provisioning with Directed network provisioning (single-node) (Section 43.4, “Downstream cluster provisioning with Directed network provisioning (single-node)”)

Downstream cluster provisioning with Directed network provisioning (multi-node) (Section 43.5, “Downstream cluster provisioning with Directed network provisioning (multi-node)”)

Any advanced network configuration must be applied at enrollment time through the BareMetalHost host definition and an associated Secret containing an nmstate formatted networkData block. The following example file defines a secret containing the required networkData that requests a static IP and VLAN for the downstream cluster host:

apiVersion: v1

kind: Secret

metadata:

name: controlplane-0-networkdata

type: Opaque

stringData:

networkData: |

interfaces:

- name: ${CONTROLPLANE_INTERFACE}

type: ethernet

state: up

mtu: 1500

identifier: mac-address

mac-address: "${CONTROLPLANE_MAC}"

ipv4:

address:

- ip: "${CONTROLPLANE_IP}"

prefix-length: "${CONTROLPLANE_PREFIX}"

enabled: true

dhcp: false

- name: floating

type: vlan

state: up

vlan:

base-iface: ${CONTROLPLANE_INTERFACE}

id: ${VLAN_ID}

dns-resolver:

config:

server:

- "${DNS_SERVER}"

routes:

config:

- destination: 0.0.0.0/0

next-hop-address: "${CONTROLPLANE_GATEWAY}"

next-hop-interface: ${CONTROLPLANE_INTERFACE}As you can see, the example shows the configuration to enable the interface with static IPs, as well as the configuration to enable the VLAN using the base interface, once the following variables are replaced with the actual values, according to your infrastructure:

${CONTROLPLANE_INTERFACE}— The control-plane interface to be used for the edge cluster (for example,eth0). Includingidentifier: mac-addressthe naming is inspected automatically by the MAC address so any interface name can be used.${CONTROLPLANE_IP}— The IP address to be used as an endpoint for the edge cluster (must match with the kubeapi-server endpoint).${CONTROLPLANE_PREFIX}— The CIDR to be used for the edge cluster (for example,24if you want/24or255.255.255.0).${CONTROLPLANE_GATEWAY}— The gateway to be used for the edge cluster (for example,192.168.100.1).${CONTROLPLANE_MAC}— The MAC address to be used for the control-plane interface (for example,00:0c:29:3e:3e:3e).${DNS_SERVER}— The DNS to be used for the edge cluster (for example,192.168.100.2).${VLAN_ID}— The VLAN ID to be used for the edge cluster (for example,100).

Any other nmstate-compliant definition can be used to configure the network for the downstream cluster to adapt to the specific requirements. For example, it is possible to specify a static dual-stack configuration:

apiVersion: v1

kind: Secret

metadata:

name: controlplane-0-networkdata

type: Opaque

stringData:

networkData: |

interfaces:

- name: ${CONTROLPLANE_INTERFACE}

type: ethernet

state: up

mac-address: ${CONTROLPLANE_MAC}

ipv4:

enabled: true

dhcp: false

address:

- ip: ${CONTROLPLANE_IP_V4}

prefix-length: ${CONTROLPLANE_PREFIX_V4}

ipv6:

enabled: true

dhcp: false

autoconf: false

address:

- ip: ${CONTROLPLANE_IP_V6}

prefix-length: ${CONTROLPLANE_PREFIX_V6}

routes:

config:

- destination: 0.0.0.0/0

next-hop-address: ${CONTROLPLANE_GATEWAY_V4}

next-hop-interface: ${CONTROLPLANE_INTERFACE}

- destination: ::/0

next-hop-address: ${CONTROLPLANE_GATEWAY_V6}

next-hop-interface: ${CONTROLPLANE_INTERFACE}

dns-resolver:

config:

server:

- ${DNS_SERVER_V4}

- ${DNS_SERVER_V6}As for the previous example, replace the following variables with actual values, according to your infrastructure:

${CONTROLPLANE_IP_V4}- the IPv4 address to assign to the host${CONTROLPLANE_PREFIX_V4}- the IPv4 prefix of the network to which the host IP belongs${CONTROLPLANE_IP_V6}- the IPv6 address to assign to the host${CONTROLPLANE_PREFIX_V6}- the IPv6 prefix of the network to which the host IP belongs${CONTROLPLANE_GATEWAY_V4}- the IPv4 address of the gateway for the traffic matching the default route${CONTROLPLANE_GATEWAY_V6}- the IPv6 address of the gateway for the traffic matching the default route${CONTROLPLANE_INTERFACE}- the name of the interface to assign the addresses to and to use for egress traffic matching the default route, for both IPv4 and IPv6${DNS_SERVER_V4}and/or${DNS_SERVER_V6}- the IP address(es) of the DNS server(s) to use, which can be specified as single or multiple entries. Both IPv4 and/or IPv6 addresses are supported

You can refer to SUSE Telco Cloud examples repo for more complex examples, including IPv6 only and dual-stack configurations.

Single-stack IPv6 deployments are in tech preview status and not yet officially supported.

Lastly, regardless of the network configuration details, ensure that the secret is referenced by appending preprovisioningNetworkDataName to the BaremetalHost object to successfully enroll the host in the management cluster.

apiVersion: v1

kind: Secret

metadata:

name: example-demo-credentials

type: Opaque

data:

username: ${BMC_USERNAME}

password: ${BMC_PASSWORD}

---

apiVersion: metal3.io/v1alpha1

kind: BareMetalHost

metadata:

name: example-demo

labels:

cluster-role: control-plane

spec:

architecture: x86_64

online: true

bootMACAddress: ${BMC_MAC}

rootDeviceHints:

deviceName: /dev/nvme0n1

bmc:

address: ${BMC_ADDRESS}

disableCertificateVerification: true

credentialsName: example-demo-credentials

preprovisioningNetworkDataName: controlplane-0-networkdataIf you need to deploy a multi-node cluster, the same process must be done for each node.

The

Metal3DataTemplate,networkDataandMetal3 IPAMare currently not supported; only the configuration via static secrets is fully supported.Architecture must be either

x86_64oraarch64, depending on the architecture of the bare-metal host to be enrolled.

43.7 Telco features (DPDK, SR-IOV, CPU isolation, huge pages, NUMA, etc.) #

The directed network provisioning workflow allows to automate the Telco features to be used in the downstream clusters to run Telco workloads on top of those servers.

Requirements

The image generated using

EIB, as described in the previous section (Section 43.2, “Prepare downstream cluster image for connected scenarios”), has to be located in the management cluster exactly on the path you configured on this section (Note).The image generated using

EIBhas to include the specific Telco packages following this section (Section 43.2.2.5, “Additional configuration for Telco workloads”).The management server created and available to be used on the following sections. For more information, refer to the Management Cluster section: Chapter 41, Setting up the management cluster.

Configuration

Use the following two sections as the base to enroll and provision the hosts:

Downstream cluster provisioning with Directed network provisioning (single-node) (Section 43.4, “Downstream cluster provisioning with Directed network provisioning (single-node)”)

Downstream cluster provisioning with Directed network provisioning (multi-node) (Section 43.5, “Downstream cluster provisioning with Directed network provisioning (multi-node)”)

The Telco features covered in this section are the following:

DPDK and VFs creation

SR-IOV and VFs allocation to be used by the workloads

CPU isolation and performance tuning

Huge pages configuration

Kernel parameters tuning

The changes required to enable the Telco features shown above are all inside the RKE2ControlPlane block in the provision file capi-provisioning-example.yaml. The rest of the information inside the file capi-provisioning-example.yaml is the same as the information provided in the provisioning section (Section 43.4, “Downstream cluster provisioning with Directed network provisioning (single-node)”).

To make the process clear, the changes required on that block (RKE2ControlPlane) to enable the Telco features are the following:

The

preRKE2Commandsto be used to execute the commands before theRKE2installation process. In this case, use themodprobecommand to enable thevfio-pciand theSR-IOVkernel modules.The ignition file

/var/lib/rancher/rke2/server/manifests/configmap-sriov-custom-auto.yamlto be used to define the interfaces, drivers and the number ofVFsto be created and exposed to the workloads.The values inside the config map

sriov-custom-auto-configare the only values to be replaced with real values.${RESOURCE_NAME1}— The resource name to be used for the firstPFinterface (for example,sriov-resource-du1). It is added to the prefixrancher.ioto be used as a label to be used by the workloads (for example,rancher.io/sriov-resource-du1).${SRIOV-NIC-NAME1}— The name of the firstPFinterface to be used (for example,eth0).${PF_NAME1}— The name of the first physical functionPFto be used. Generate more complex filters using this (for example,eth0#2-5).${DRIVER_NAME1}— The driver name to be used for the firstVFinterface (for example,vfio-pci).${NUM_VFS1}— The number ofVFsto be created for the firstPFinterface (for example,8).

The

/var/sriov-auto-filler.shto be used as a translator between the high-level config mapsriov-custom-auto-configand thesriovnetworknodepolicywhich contains the low-level hardware information. This script has been created to abstract the user from the complexity to know in advance the hardware information. No changes are required in this file, but it should be present if we need to enablesr-iovand createVFs.The kernel arguments to be used to enable the following features:

Parameter | Value | Description |

isolcpus | domain,nohz,managed_irq,1-30,33-62 | Isolate the cores 1-30 and 33-62. |

skew_tick | 1 | Allows the kernel to skew the timer interrupts across the isolated CPUs. |

nohz | on | Allows the kernel to run the timer tick on a single CPU when the system is idle. |

nohz_full | 1-30,33-62 | kernel boot parameter is the current main interface to configure full dynticks along with CPU Isolation. |

rcu_nocbs | 1-30,33-62 | Allows the kernel to run the RCU callbacks on a single CPU when the system is idle. |

irqaffinity | 0,31,32,63 | Allows the kernel to run the interrupts on a single CPU when the system is idle. |

idle | poll | Minimizes the latency of exiting the idle state. |

iommu | pt | Allows to use vfio for the dpdk interfaces. |

intel_iommu | on | Enables the use of vfio for VFs. |

hugepagesz | 1G | Allows to set the size of huge pages to 1 G. |

hugepages | 40 | Number of huge pages defined before. |

default_hugepagesz | 1G | Default value to enable huge pages. |

nowatchdog | Disables the watchdog. | |

nmi_watchdog | 0 | Disables the NMI watchdog. |

The following systemd services are used to enable the following:

rke2-preinstall.serviceto replace automatically theBAREMETALHOST_UUIDandnode-nameduring the provisioning process using the Ironic information.cpu-partitioning.serviceto enable the isolation cores of theCPU(for example,1-30,33-62).performance-settings.serviceto enable the CPU performance tuning.sriov-custom-auto-vfs.serviceto install thesriovHelm chart, wait until custom resources are created and run the/var/sriov-auto-filler.shto replace the values in the config mapsriov-custom-auto-configand create thesriovnetworknodepolicyto be used by the workloads.

The

${RKE2_VERSION}is the version ofRKE2to be used replacing this value (for example,v1.34.2+rke2r1).

With all these changes mentioned, the RKE2ControlPlane block in the capi-provisioning-example.yaml will look like the following:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

metadata:

name: single-node-cluster

namespace: default

spec:

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: single-node-cluster-controlplane

replicas: 1

version: ${RKE2_VERSION}

rolloutStrategy:

type: "RollingUpdate"

rollingUpdate:

maxSurge: 0

serverConfig:

cni: calico

cniMultusEnable: true

preRKE2Commands:

- modprobe vfio-pci enable_sriov=1 disable_idle_d3=1

agentConfig:

format: ignition

additionalUserData:

config: |

variant: fcos

version: 1.4.0

storage:

files:

- path: /var/lib/rancher/rke2/server/manifests/configmap-sriov-custom-auto.yaml

overwrite: true

contents:

inline: |

apiVersion: v1

kind: ConfigMap

metadata:

name: sriov-custom-auto-config

namespace: kube-system

data:

config.json: |

[

{

"resourceName": "${RESOURCE_NAME1}",

"interface": "${SRIOV-NIC-NAME1}",

"pfname": "${PF_NAME1}",

"driver": "${DRIVER_NAME1}",

"numVFsToCreate": ${NUM_VFS1}

},

{

"resourceName": "${RESOURCE_NAME2}",

"interface": "${SRIOV-NIC-NAME2}",

"pfname": "${PF_NAME2}",

"driver": "${DRIVER_NAME2}",

"numVFsToCreate": ${NUM_VFS2}

}

]

mode: 0644

user:

name: root

group:

name: root

- path: /var/lib/rancher/rke2/server/manifests/sriov-crd.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: sriov-crd

namespace: kube-system

spec:

chart: oci://registry.suse.com/edge/charts/sriov-crd

targetNamespace: sriov-network-operator

version: 305.0.4+up1.6.0

createNamespace: true

- path: /var/lib/rancher/rke2/server/manifests/sriov-network-operator.yaml

overwrite: true

contents:

inline: |

apiVersion: helm.cattle.io/v1

kind: HelmChart

metadata:

name: sriov-network-operator

namespace: kube-system

spec:

chart: oci://registry.suse.com/edge/charts/sriov-network-operator

targetNamespace: sriov-network-operator

version: 305.0.4+up1.6.0

createNamespace: true

kernel_arguments:

should_exist:

- intel_iommu=on

- iommu=pt

- idle=poll

- mce=off

- hugepagesz=1G hugepages=40

- hugepagesz=2M hugepages=0

- default_hugepagesz=1G

- irqaffinity=${NON-ISOLATED_CPU_CORES}

- isolcpus=domain,nohz,managed_irq,${ISOLATED_CPU_CORES}

- nohz_full=${ISOLATED_CPU_CORES}

- rcu_nocbs=${ISOLATED_CPU_CORES}

- rcu_nocb_poll

- nosoftlockup

- nowatchdog

- nohz=on

- nmi_watchdog=0

- skew_tick=1

- quiet

systemd:

units:

- name: rke2-preinstall.service

enabled: true

contents: |

[Unit]

Description=rke2-preinstall

Wants=network-online.target

Before=rke2-install.service

ConditionPathExists=!/run/cluster-api/bootstrap-success.complete

[Service]

Type=oneshot

User=root

ExecStartPre=/bin/sh -c "mount -L config-2 /mnt"

ExecStart=/bin/sh -c "sed -i \"s/BAREMETALHOST_UUID/$(jq -r .uuid /mnt/openstack/latest/meta_data.json)/\" /etc/rancher/rke2/config.yaml"

ExecStart=/bin/sh -c "echo \"node-name: $(jq -r .name /mnt/openstack/latest/meta_data.json)\" >> /etc/rancher/rke2/config.yaml"

ExecStartPost=/bin/sh -c "umount /mnt"

[Install]

WantedBy=multi-user.target

- name: cpu-partitioning.service

enabled: true

contents: |

[Unit]

Description=cpu-partitioning

Wants=network-online.target

After=network.target network-online.target

[Service]

Type=oneshot

User=root

ExecStart=/bin/sh -c "echo isolated_cores=${ISOLATED_CPU_CORES} > /etc/tuned/cpu-partitioning-variables.conf"

ExecStartPost=/bin/sh -c "tuned-adm profile cpu-partitioning"

ExecStartPost=/bin/sh -c "systemctl enable tuned.service"

[Install]

WantedBy=multi-user.target

- name: performance-settings.service

enabled: true

contents: |

[Unit]

Description=performance-settings

Wants=network-online.target

After=network.target network-online.target cpu-partitioning.service

[Service]

Type=oneshot

User=root

ExecStart=/bin/sh -c "/opt/performance-settings/performance-settings.sh"

[Install]

WantedBy=multi-user.target

- name: sriov-custom-auto-vfs.service

enabled: true

contents: |

[Unit]

Description=SRIOV Custom Auto VF Creation

Wants=network-online.target rke2-server.target

After=network.target network-online.target rke2-server.target

[Service]

User=root

Type=forking

TimeoutStartSec=900

ExecStart=/bin/sh -c "while ! /var/lib/rancher/rke2/bin/kubectl --kubeconfig=/etc/rancher/rke2/rke2.yaml wait --for condition=ready nodes --all ; do sleep 2 ; done"

ExecStartPost=/bin/sh -c "while [ $(/var/lib/rancher/rke2/bin/kubectl --kubeconfig=/etc/rancher/rke2/rke2.yaml get sriovnetworknodestates.sriovnetwork.openshift.io --ignore-not-found --no-headers -A | wc -l) -eq 0 ]; do sleep 1; done"

ExecStartPost=/bin/sh -c "/opt/sriov/sriov-auto-filler.sh"

RemainAfterExit=yes

KillMode=process

[Install]

WantedBy=multi-user.target

kubelet:

extraArgs:

- provider-id=metal3://BAREMETALHOST_UUID

nodeName: "localhost.localdomain"Once the file is created by joining the previous blocks, the following command must be executed in the management cluster to start provisioning the new downstream cluster using the Telco features:

$ kubectl apply -f capi-provisioning-example.yaml

43.8 Private registry #

It is possible to configure a private registry as a mirror for images used by workloads.

To do this we create the secret containing the information about the private registry to be used by the downstream cluster.

apiVersion: v1

kind: Secret

metadata:

name: private-registry-cert

namespace: default

data:

tls.crt: ${TLS_CERTIFICATE}

tls.key: ${TLS_KEY}

ca.crt: ${CA_CERTIFICATE}

type: kubernetes.io/tls

---

apiVersion: v1

kind: Secret

metadata:

name: private-registry-auth

namespace: default

data:

username: ${REGISTRY_USERNAME}

password: ${REGISTRY_PASSWORD}The tls.crt, tls.key and ca.crt are the certificates to be used to authenticate the private registry. The username and password are the credentials to be used to authenticate the private registry.

The tls.crt, tls.key, ca.crt , username and password have to be encoded in base64 format before to be used in the secret.

With all these changes mentioned, the RKE2ControlPlane block in the capi-provisioning-example.yaml will look like the following:

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

metadata:

name: single-node-cluster

namespace: default

spec:

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: single-node-cluster-controlplane

replicas: 1

version: ${RKE2_VERSION}

rolloutStrategy:

type: "RollingUpdate"

rollingUpdate:

maxSurge: 0

privateRegistriesConfig:

mirrors:

"registry.example.com":

endpoint:

- "https://registry.example.com:5000"

configs:

"registry.example.com":

authSecret:

apiVersion: v1

kind: Secret

namespace: default

name: private-registry-auth

tls:

tlsConfigSecret:

apiVersion: v1

kind: Secret

namespace: default

name: private-registry-cert

serverConfig:

cni: calico

cniMultusEnable: true

agentConfig:

format: ignition

additionalUserData:

config: |

variant: fcos

version: 1.4.0

systemd:

units:

- name: rke2-preinstall.service

enabled: true

contents: |

[Unit]

Description=rke2-preinstall

Wants=network-online.target

Before=rke2-install.service

ConditionPathExists=!/run/cluster-api/bootstrap-success.complete

[Service]

Type=oneshot

User=root

ExecStartPre=/bin/sh -c "mount -L config-2 /mnt"

ExecStart=/bin/sh -c "sed -i \"s/BAREMETALHOST_UUID/$(jq -r .uuid /mnt/openstack/latest/meta_data.json)/\" /etc/rancher/rke2/config.yaml"

ExecStart=/bin/sh -c "echo \"node-name: $(jq -r .name /mnt/openstack/latest/meta_data.json)\" >> /etc/rancher/rke2/config.yaml"

ExecStartPost=/bin/sh -c "umount /mnt"

[Install]

WantedBy=multi-user.target

kubelet:

extraArgs:

- provider-id=metal3://BAREMETALHOST_UUID

nodeName: "localhost.localdomain"Where the registry.example.com is the example name of the private registry to be used by the downstream cluster, and it should be replaced with the real values.

43.9 Downstream cluster provisioning in air-gapped scenarios #

The directed network provisioning workflow allows to automate the provisioning of downstream clusters in air-gapped scenarios.

43.9.1 Requirements for air-gapped scenarios #

The

rawimage generated usingEIBmust include the specific container images (helm-chart OCI and container images) required to run the downstream cluster in an air-gapped scenario. For more information, refer to this section (Section 43.3, “Prepare downstream cluster image for air-gap scenarios”).In case of using SR-IOV or any other custom workload, the images required to run the workloads must be preloaded in your private registry following the preload private registry section (Section 43.3.2.7, “Preload your private registry with images required for air-gap scenarios and SR-IOV (optional)”).

43.9.2 Enroll the bare-metal hosts in air-gap scenarios #

The process to enroll the bare-metal hosts in the management cluster is the same as described in the previous section (Section 43.4, “Downstream cluster provisioning with Directed network provisioning (single-node)”).

43.9.3 Provision the downstream cluster in air-gap scenarios #

There are some important changes required to provision the downstream cluster in air-gapped scenarios:

The

RKE2ControlPlaneblock in thecapi-provisioning-example.yamlfile must include thespec.agentConfig.airGapped: truedirective.The private registry configuration must be included in the

RKE2ControlPlaneblock in thecapi-provisioning-airgap-example.yamlfile following the private registry section (Section 43.8, “Private registry”).If you are using SR-IOV or any other

AdditionalUserDataconfiguration (combustion script) which requires the helm-chart installation, you must modify the content to reference the private registry instead of using the public registry.

The following example shows the SR-IOV configuration in the AdditionalUserData block in the capi-provisioning-airgap-example.yaml file with the modifications required to reference the private registry

Private Registry secrets references

Helm-Chart definition using the private registry instead of the public OCI images.

# secret to include the private registry certificates

apiVersion: v1

kind: Secret

metadata:

name: private-registry-cert

namespace: default

data:

tls.crt: ${TLS_BASE64_CERT}

tls.key: ${TLS_BASE64_KEY}

ca.crt: ${CA_BASE64_CERT}

type: kubernetes.io/tls

---

# secret to include the private registry auth credentials

apiVersion: v1

kind: Secret

metadata:

name: private-registry-auth

namespace: default

data:

username: ${REGISTRY_USERNAME}

password: ${REGISTRY_PASSWORD}

---

apiVersion: controlplane.cluster.x-k8s.io/v1beta1

kind: RKE2ControlPlane

metadata:

name: single-node-cluster

namespace: default

spec:

infrastructureRef:

apiVersion: infrastructure.cluster.x-k8s.io/v1beta1

kind: Metal3MachineTemplate

name: single-node-cluster-controlplane

replicas: 1

version: ${RKE2_VERSION}

rolloutStrategy:

type: "RollingUpdate"

rollingUpdate:

maxSurge: 0