20 Edge Virtualization #

This section describes how you can use Edge Virtualization to run virtual machines on your edge nodes. Edge Virtualization is designed for lightweight virtualization use-cases, where it is expected that a common workflow for the deployment and management of both virtualized and containerized applications will be utilized.

SUSE Edge Virtualization supports two methods of running virtual machines:

Deploying the virtual machines manually via libvirt+qemu-kvm at the host level (where Kubernetes is not involved)

Deploying the KubeVirt operator for Kubernetes-based management of virtual machines

Both options are valid, but only the second one is covered below. If you want to use the standard out-of-the box virtualization mechanisms provided by SUSE Linux Micro, a comprehensive guide can be found here, and whilst it was primarily written for SUSE Linux Enterprise Server, the concepts are almost identical.

This guide initially explains how to deploy the additional virtualization components onto a system that has already been pre-deployed, but follows with a section that describes how to embed this configuration in the initial deployment via Edge Image Builder. If you do not want to run through the basics and set things up manually, skip right ahead to that section.

20.1 KubeVirt overview #

KubeVirt allows for managing Virtual Machines with Kubernetes alongside the rest of your containerized workloads. It does this by running the user space portion of the Linux virtualization stack in a container. This minimizes the requirements on the host system, allowing for easier setup and management.

Details about KubeVirt’s architecture can be found in the upstream documentation.

20.2 Prerequisites #

If you are following this guide, we assume you have the following already available:

At least one physical host with SUSE Linux Micro 6.2 installed, and with virtualization extensions enabled in the BIOS (see here for details).

Across your nodes, a K3s/RKE2 Kubernetes cluster already deployed and with an appropriate

kubeconfigthat enables superuser access to the cluster.Access to the root user — these instructions assume you are the root user, and not escalating your privileges via

sudo.You have Helm available locally with an adequate network connection to be able to push configurations to your Kubernetes cluster and download the required images.

20.3 Manual installation of Edge Virtualization #

This guide will not walk you through the deployment of Kubernetes, but it assumes that you have either installed the SUSE Edge-appropriate version of K3s or RKE2 and that you have your kubeconfig configured accordingly so that standard kubectl commands can be executed as the superuser. We assume your node forms a single-node cluster, although there are no significant differences expected for multi-node deployments.

SUSE Edge Virtualization is deployed via three separate Helm charts, specifically:

KubeVirt: The core virtualization components, that is, Kubernetes CRDs, operators and other components required for enabling Kubernetes to deploy and manage virtual machines.

KubeVirt Dashboard Extension: An optional Rancher UI extension that allows basic virtual machine management, for example, starting/stopping of virtual machines as well as accessing the console.

Containerized Data Importer (CDI): An additional component that enables persistent-storage integration for KubeVirt, providing capabilities for virtual machines to use existing Kubernetes storage back-ends for data, but also allowing users to import or clone data volumes for virtual machines.

Each of these Helm charts is versioned according to the SUSE Edge release you are currently using. For production/supported usage, employ the artifacts that can be found in the SUSE Registry.

First, ensure that your kubectl access is working:

$ kubectl get nodes

This should show something similar to the following:

NAME STATUS ROLES AGE VERSION node1.edge.rdo.wales Ready control-plane,etcd,master 4h20m v1.30.5+rke2r1 node2.edge.rdo.wales Ready control-plane,etcd,master 4h15m v1.30.5+rke2r1 node3.edge.rdo.wales Ready control-plane,etcd,master 4h15m v1.30.5+rke2r1

Now you can proceed to install the KubeVirt and Containerized Data Importer (CDI) Helm charts:

$ helm install kubevirt oci://registry.suse.com/edge/charts/kubevirt --namespace kubevirt-system --create-namespace $ helm install cdi oci://registry.suse.com/edge/charts/cdi --namespace cdi-system --create-namespace

In a few minutes, you should have all KubeVirt and CDI components deployed. You can validate this by checking all the deployed resources in the kubevirt-system and cdi-system namespace.

Verify KubeVirt resources:

$ kubectl get all -n kubevirt-system

This should show something similar to the following:

NAME READY STATUS RESTARTS AGE pod/virt-operator-5fbcf48d58-p7xpm 1/1 Running 0 2m24s pod/virt-operator-5fbcf48d58-wnf6s 1/1 Running 0 2m24s pod/virt-handler-t594x 1/1 Running 0 93s pod/virt-controller-5f84c69884-cwjvd 1/1 Running 1 (64s ago) 93s pod/virt-controller-5f84c69884-xxw6q 1/1 Running 1 (64s ago) 93s pod/virt-api-7dfc54cf95-v8kcl 1/1 Running 1 (59s ago) 118s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/kubevirt-prometheus-metrics ClusterIP None <none> 443/TCP 2m1s service/virt-api ClusterIP 10.43.56.140 <none> 443/TCP 2m1s service/kubevirt-operator-webhook ClusterIP 10.43.201.121 <none> 443/TCP 2m1s service/virt-exportproxy ClusterIP 10.43.83.23 <none> 443/TCP 2m1s NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE daemonset.apps/virt-handler 1 1 1 1 1 kubernetes.io/os=linux 93s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/virt-operator 2/2 2 2 2m24s deployment.apps/virt-controller 2/2 2 2 93s deployment.apps/virt-api 1/1 1 1 118s NAME DESIRED CURRENT READY AGE replicaset.apps/virt-operator-5fbcf48d58 2 2 2 2m24s replicaset.apps/virt-controller-5f84c69884 2 2 2 93s replicaset.apps/virt-api-7dfc54cf95 1 1 1 118s NAME AGE PHASE kubevirt.kubevirt.io/kubevirt 2m24s Deployed

Verify CDI resources:

$ kubectl get all -n cdi-system

This should show something similar to the following:

NAME READY STATUS RESTARTS AGE pod/cdi-operator-55c74f4b86-692xb 1/1 Running 0 2m24s pod/cdi-apiserver-db465b888-62lvr 1/1 Running 0 2m21s pod/cdi-deployment-56c7d74995-mgkfn 1/1 Running 0 2m21s pod/cdi-uploadproxy-7d7b94b968-6kxc2 1/1 Running 0 2m22s NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE service/cdi-uploadproxy ClusterIP 10.43.117.7 <none> 443/TCP 2m22s service/cdi-api ClusterIP 10.43.20.101 <none> 443/TCP 2m22s service/cdi-prometheus-metrics ClusterIP 10.43.39.153 <none> 8080/TCP 2m21s NAME READY UP-TO-DATE AVAILABLE AGE deployment.apps/cdi-operator 1/1 1 1 2m24s deployment.apps/cdi-apiserver 1/1 1 1 2m22s deployment.apps/cdi-deployment 1/1 1 1 2m21s deployment.apps/cdi-uploadproxy 1/1 1 1 2m22s NAME DESIRED CURRENT READY AGE replicaset.apps/cdi-operator-55c74f4b86 1 1 1 2m24s replicaset.apps/cdi-apiserver-db465b888 1 1 1 2m21s replicaset.apps/cdi-deployment-56c7d74995 1 1 1 2m21s replicaset.apps/cdi-uploadproxy-7d7b94b968 1 1 1 2m22s

To verify that the VirtualMachine custom resource definitions (CRDs) are deployed, you can validate with:

$ kubectl explain virtualmachine

This should print out the definition of the VirtualMachine object, which should print as follows:

GROUP: kubevirt.io

KIND: VirtualMachine

VERSION: v1

DESCRIPTION:

VirtualMachine handles the VirtualMachines that are not running or are in a

stopped state The VirtualMachine contains the template to create the

VirtualMachineInstance. It also mirrors the running state of the created

VirtualMachineInstance in its status.

(snip)20.4 Deploying virtual machines #

Now that KubeVirt and CDI are deployed, let us define a simple virtual machine based on openSUSE Tumbleweed. This virtual machine has the most simple of configurations, using standard "pod networking" for a networking configuration identical to any other pod. It also employs non-persistent storage, ensuring the storage is ephemeral, just like in any container that does not have a PVC.

$ kubectl apply -f - <<EOF

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: tumbleweed

namespace: default

spec:

runStrategy: Always

template:

spec:

domain:

devices: {}

machine:

type: q35

memory:

guest: 2Gi

resources: {}

volumes:

- containerDisk:

image: quay.io/containerdisks/opensuse-tumbleweed:1.0.0

name: tumbleweed-containerdisk-0

- cloudInitNoCloud:

userDataBase64: I2Nsb3VkLWNvbmZpZwpkaXNhYmxlX3Jvb3Q6IGZhbHNlCnNzaF9wd2F1dGg6IFRydWUKdXNlcnM6CiAgLSBkZWZhdWx0CiAgLSBuYW1lOiBzdXNlCiAgICBncm91cHM6IHN1ZG8KICAgIHNoZWxsOiAvYmluL2Jhc2gKICAgIHN1ZG86ICBBTEw9KEFMTCkgTk9QQVNTV0Q6QUxMCiAgICBsb2NrX3Bhc3N3ZDogRmFsc2UKICAgIHBsYWluX3RleHRfcGFzc3dkOiAnc3VzZScK

name: cloudinitdisk

EOFThis should print that a VirtualMachine was created:

virtualmachine.kubevirt.io/tumbleweed created

This VirtualMachine definition is minimal, specifying little about the configuration. It simply outlines that it is a machine type "q35" with 2 GB of memory that uses a disk image based on an ephemeral containerDisk (that is, a disk image that is stored in a container image from a remote image repository), and specifies a base64 encoded cloudInit disk, which we only use for user creation and password enforcement at boot time (use base64 -d to decode it).

NoteThis virtual machine image is only for testing. The image is not officially supported and is only meant as a documentation example.

This machine takes a few minutes to boot as it needs to download the openSUSE Tumbleweed disk image, but once it has done so, you can view further details about the virtual machine by checking the virtual machine information:

$ kubectl get vmi

This should print the node that the virtual machine was started on, and the IP address of the virtual machine. Remember, since it uses pod networking, the reported IP address will be just like any other pod, and routable as such:

NAME AGE PHASE IP NODENAME READY tumbleweed 4m24s Running 10.42.2.98 node3.edge.rdo.wales True

When running these commands on the Kubernetes cluster nodes themselves, with a CNI that routes traffic directly to pods (for example, Cilium), you should be able to ssh directly to the machine itself. Substitute the following IP address with the one that was assigned to your virtual machine:

$ ssh suse@10.42.2.98 (password is "suse")

Once you are in this virtual machine, you can play around, but remember that it is limited in terms of resources, and only has 1 GB disk space. When you are finished, Ctrl-D or exit to disconnect from the SSH session.

The virtual machine process is still wrapped in a standard Kubernetes pod. The VirtualMachine CRD is a representation of the desired virtual machine, but the process in which the virtual machine is actually started is via the virt-launcher pod, a standard Kubernetes pod, just like any other application. For every virtual machine started, you can see there is a virt-launcher pod:

$ kubectl get pods

This should then show the one virt-launcher pod for the Tumbleweed machine that we have defined:

NAME READY STATUS RESTARTS AGE virt-launcher-tumbleweed-8gcn4 3/3 Running 0 10m

If we take a look into this virt-launcher pod, you see it is executing libvirt and qemu-kvm processes. We can enter the pod itself and have a look under the covers, noting that you need to adapt the following command for your pod name:

$ kubectl exec -it virt-launcher-tumbleweed-8gcn4 -- bash

Once you are in the pod, try running virsh commands along with looking at the processes. You will see the qemu-system-x86_64 binary running, along with certain processes for monitoring the virtual machine. You will also see the location of the disk image and how the networking is plugged (as a tap device):

qemu@tumbleweed:/> ps ax

PID TTY STAT TIME COMMAND

1 ? Ssl 0:00 /usr/bin/virt-launcher-monitor --qemu-timeout 269s --name tumbleweed --uid b9655c11-38f7-4fa8-8f5d-bfe987dab42c --namespace default --kubevirt-share-dir /var/run/kubevirt --ephemeral-disk-dir /var/run/kubevirt-ephemeral-disks --container-disk-dir /var/run/kube

12 ? Sl 0:01 /usr/bin/virt-launcher --qemu-timeout 269s --name tumbleweed --uid b9655c11-38f7-4fa8-8f5d-bfe987dab42c --namespace default --kubevirt-share-dir /var/run/kubevirt --ephemeral-disk-dir /var/run/kubevirt-ephemeral-disks --container-disk-dir /var/run/kubevirt/con

24 ? Sl 0:00 /usr/sbin/virtlogd -f /etc/libvirt/virtlogd.conf

25 ? Sl 0:01 /usr/sbin/virtqemud -f /var/run/libvirt/virtqemud.conf

83 ? Sl 0:31 /usr/bin/qemu-system-x86_64 -name guest=default_tumbleweed,debug-threads=on -S -object {"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/run/kubevirt-private/libvirt/qemu/lib/domain-1-default_tumbleweed/master-key.aes"} -machine pc-q35-7.1,usb

286 pts/0 Ss 0:00 bash

320 pts/0 R+ 0:00 ps ax

qemu@tumbleweed:/> virsh list --all

Id Name State

------------------------------------

1 default_tumbleweed running

qemu@tumbleweed:/> virsh domblklist 1

Target Source

---------------------------------------------------------------------------------------------

sda /var/run/kubevirt-ephemeral-disks/disk-data/tumbleweed-containerdisk-0/disk.qcow2

sdb /var/run/kubevirt-ephemeral-disks/cloud-init-data/default/tumbleweed/noCloud.iso

qemu@tumbleweed:/> virsh domiflist 1

Interface Type Source Model MAC

------------------------------------------------------------------------------

tap0 ethernet - virtio-non-transitional e6:e9:1a:05:c0:92

qemu@tumbleweed:/> exit

exitFinally, let us delete this virtual machine to clean up:

$ kubectl delete vm/tumbleweed virtualmachine.kubevirt.io "tumbleweed" deleted

20.5 Using virtctl #

Along with the standard Kubernetes CLI tooling, that is, kubectl, KubeVirt comes with an accompanying CLI utility that allows you to interface with your cluster in a way that bridges some gaps between the virtualization world and the world that Kubernetes was designed for. For example, the virtctl tool provides the capability of managing the lifecycle of virtual machines (starting, stopping, restarting, etc.), providing access to the virtual consoles, uploading virtual machine images, as well as interfacing with Kubernetes constructs such as services, without using the API or CRDs directly.

Let us download the latest stable version of the virtctl tool:

$ export VERSION=v0.6.0 $ wget https://github.com/kubevirt/kubevirt/releases/download/$VERSION/virtctl-$VERSION-linux-amd64

If you are using a different architecture or a non-Linux machine, you can find other releases here. You need to make this executable before proceeding, and it may be useful to move it to a location within your $PATH:

$ mv virtctl-$VERSION-linux-amd64 /usr/local/bin/virtctl $ chmod a+x /usr/local/bin/virtctl

You can then use the virtctl command-line tool to create virtual machines. Let us replicate our previous virtual machine, noting that we are piping the output directly into kubectl apply:

$ virtctl create vm --name virtctl-example --memory=1Gi \

--volume-containerdisk=src:quay.io/containerdisks/opensuse-tumbleweed:1.0.0 \

--cloud-init-user-data "I2Nsb3VkLWNvbmZpZwpkaXNhYmxlX3Jvb3Q6IGZhbHNlCnNzaF9wd2F1dGg6IFRydWUKdXNlcnM6CiAgLSBkZWZhdWx0CiAgLSBuYW1lOiBzdXNlCiAgICBncm91cHM6IHN1ZG8KICAgIHNoZWxsOiAvYmluL2Jhc2gKICAgIHN1ZG86ICBBTEw9KEFMTCkgTk9QQVNTV0Q6QUxMCiAgICBsb2NrX3Bhc3N3ZDogRmFsc2UKICAgIHBsYWluX3RleHRfcGFzc3dkOiAnc3VzZScK" | kubectl apply -f -This should then show the virtual machine running (it should start a lot quicker this time given that the container image will be cached):

$ kubectl get vmi NAME AGE PHASE IP NODENAME READY virtctl-example 52s Running 10.42.2.29 node3.edge.rdo.wales True

Now we can use virtctl to connect directly to the virtual machine:

$ virtctl ssh suse@virtctl-example (password is "suse" - Ctrl-D to exit)

There are plenty of other commands that can be used by virtctl. For example, virtctl console can give you access to the serial console if networking is not working, and you can use virtctl guestosinfo to get comprehensive OS information, subject to the guest having the qemu-guest-agent installed and running.

Finally, let us pause and resume the virtual machine:

$ virtctl pause vm virtctl-example VMI virtctl-example was scheduled to pause

You find that the VirtualMachine object shows as Paused and the VirtualMachineInstance object shows as Running but READY=False:

$ kubectl get vm NAME AGE STATUS READY virtctl-example 8m14s Paused False $ kubectl get vmi NAME AGE PHASE IP NODENAME READY virtctl-example 8m15s Running 10.42.2.29 node3.edge.rdo.wales False

You also find that you can no longer connect to the virtual machine:

$ virtctl ssh suse@virtctl-example can't access VMI virtctl-example: Operation cannot be fulfilled on virtualmachineinstance.kubevirt.io "virtctl-example": VMI is paused

Let us resume the virtual machine and try again:

$ virtctl unpause vm virtctl-example VMI virtctl-example was scheduled to unpause

Now we should be able to re-establish a connection:

$ virtctl ssh suse@virtctl-example suse@vmi/virtctl-example.default's password: suse@virtctl-example:~> exit logout

Finally, let us remove the virtual machine:

$ kubectl delete vm/virtctl-example virtualmachine.kubevirt.io "virtctl-example" deleted

20.6 Simple ingress networking #

In this section, we show how you can expose virtual machines as standard Kubernetes services and make them available via the Kubernetes ingress service, for example, NGINX with RKE2 or Traefik with K3s. This document assumes that these components are already configured appropriately and that you have an appropriate DNS pointer, for example, via a wild card, to point at your Kubernetes server nodes or your ingress virtual IP for proper ingress resolution.

NoteIn SUSE Edge 3.1+, if you are using K3s in a multi-server node configuration, you might have needed to configure a MetalLB-based VIP for Ingress; this is not required for RKE2.

In the example environment, another openSUSE Tumbleweed virtual machine is deployed, cloud-init is used to install NGINX as a simple Web server at boot time, and a simple message is configured to be returned to verify that it works as expected when a call is made. To see how this is done, simply base64 -d the cloud-init section in the output below.

Let us create this virtual machine now:

$ kubectl apply -f - <<EOF

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: ingress-example

namespace: default

spec:

runStrategy: Always

template:

metadata:

labels:

app: nginx

spec:

domain:

devices: {}

machine:

type: q35

memory:

guest: 2Gi

resources: {}

volumes:

- containerDisk:

image: quay.io/containerdisks/opensuse-tumbleweed:1.0.0

name: tumbleweed-containerdisk-0

- cloudInitNoCloud:

userDataBase64: I2Nsb3VkLWNvbmZpZwpkaXNhYmxlX3Jvb3Q6IGZhbHNlCnNzaF9wd2F1dGg6IFRydWUKdXNlcnM6CiAgLSBkZWZhdWx0CiAgLSBuYW1lOiBzdXNlCiAgICBncm91cHM6IHN1ZG8KICAgIHNoZWxsOiAvYmluL2Jhc2gKICAgIHN1ZG86ICBBTEw9KEFMTCkgTk9QQVNTV0Q6QUxMCiAgICBsb2NrX3Bhc3N3ZDogRmFsc2UKICAgIHBsYWluX3RleHRfcGFzc3dkOiAnc3VzZScKcnVuY21kOgogIC0genlwcGVyIGluIC15IG5naW54CiAgLSBzeXN0ZW1jdGwgZW5hYmxlIC0tbm93IG5naW54CiAgLSBlY2hvICJJdCB3b3JrcyEiID4gL3Nydi93d3cvaHRkb2NzL2luZGV4Lmh0bQo=

name: cloudinitdisk

EOFWhen this virtual machine has successfully started, we can use the virtctl command to expose the VirtualMachineInstance with an external port of 8080 and a target port of 80 (where NGINX listens by default). We use the virtctl command here as it understands the mapping between the virtual machine object and the pod. This creates a new service for us:

$ virtctl expose vmi ingress-example --port=8080 --target-port=80 --name=ingress-example Service ingress-example successfully exposed for vmi ingress-example

We will then have an appropriate service automatically created:

$ kubectl get svc/ingress-example NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ingress-example ClusterIP 10.43.217.19 <none> 8080/TCP 9s

Next, if you then use kubectl create ingress, we can create an ingress object that points to this service. Adapt the URL (known as the "host" in the ingress object) here to match your DNS configuration and ensure that you point it to port 8080:

$ kubectl create ingress ingress-example --rule=ingress-example.suse.local/=ingress-example:8080

With DNS being configured correctly, you should be able to curl the URL immediately:

$ curl ingress-example.suse.local It works!

Let us clean up by removing this virtual machine and its service and ingress resources:

$ kubectl delete vm/ingress-example svc/ingress-example ingress/ingress-example virtualmachine.kubevirt.io "ingress-example" deleted service "ingress-example" deleted ingress.networking.k8s.io "ingress-example" deleted

20.7 Using the Rancher UI extension #

SUSE Edge Virtualization provides a UI extension for Rancher Manager, enabling basic virtual machine management using the Rancher dashboard UI.

20.7.1 Installation #

See Rancher Dashboard Extensions (Chapter 6, Rancher Dashboard Extensions) for installation guidance.

20.7.2 Using KubeVirt Rancher Dashboard Extension #

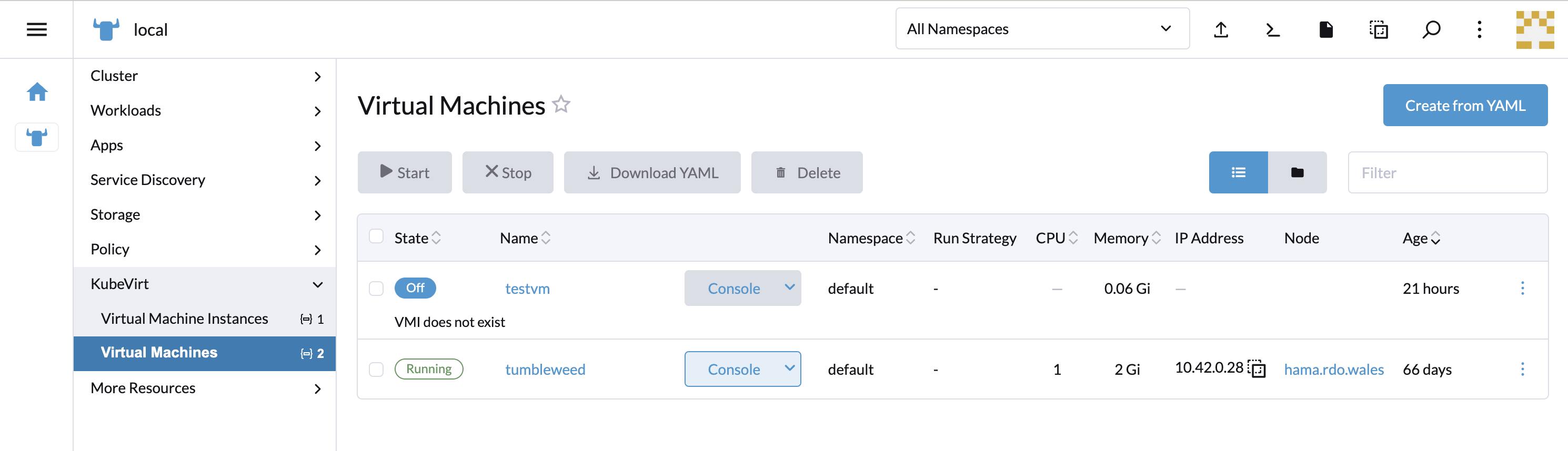

The extension introduces a new KubeVirt section to the Cluster Explorer. This section is added to any managed cluster which has KubeVirt installed.

The extension allows you to directly interact with KubeVirt Virtual Machine resources to manage Virtual Machines lifecycle.

20.7.2.1 Creating a virtual machine #

Navigate to Cluster Explorer clicking KubeVirt-enabled managed cluster in the left navigation.

Navigate to KubeVirt > Virtual Machines page and click

Create from YAMLin the upper right of the screen.Fill in or paste a virtual machine definition and press

Create. Use virtual machine definition from Deploying Virtual Machines section as an inspiration.

20.7.2.2 Virtual Machine Actions #

You can use the action menu accessed from the ⋮ drop-down list to the right of each virtual machine to perform start, stop, pause or soft reboot actions. Alternatively you can also use group actions at the top of the list by selecting virtual machines to perform the action on.

Performing the actions may have an effect on Virtual Machine Run Strategy. See the table in KubeVirt documentation for more details.

20.7.2.3 Accessing virtual machine console #

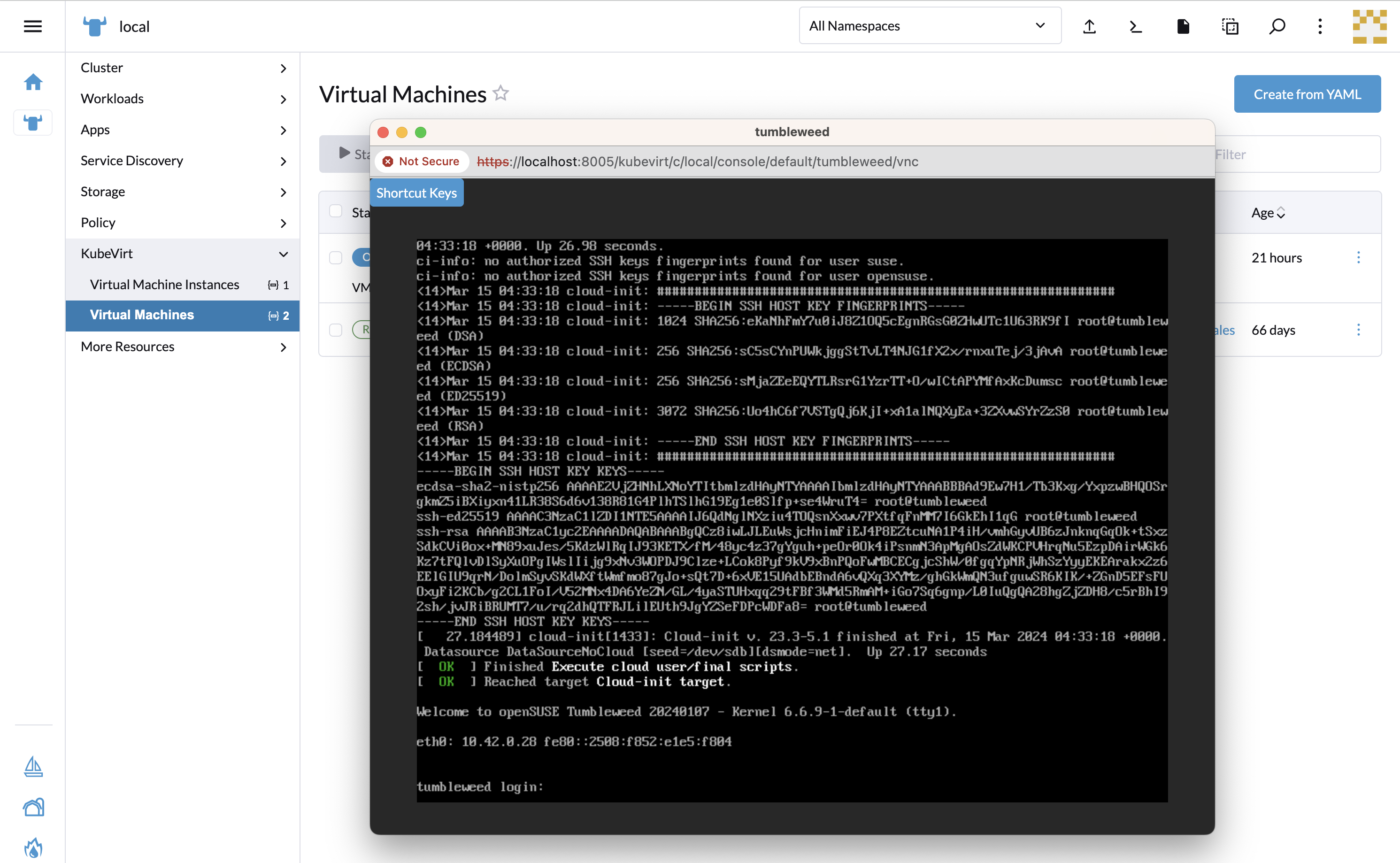

The "Virtual machines" list provides a Console drop-down list that allows to connect to the machine using VNC or Serial Console. This action is only available to running machines.

In some cases, it takes a short while before the console is accessible on a freshly started virtual machine.

20.8 Installing with Edge Image Builder #

SUSE Edge is using Chapter 11, Edge Image Builder in order to customize base SUSE Linux Micro OS images. Follow Section 27.9, “KubeVirt and CDI Installation” for an air-gapped installation of both KubeVirt and CDI on top of Kubernetes clusters provisioned by EIB.