36 Management Cluster #

Currently, there are two ways to perform "Day 2" operations on your management cluster:

36.1 Upgrade Controller #

The Upgrade Controller currently only supports Day 2 operations for non air-gapped management clusters.

This section covers how to perform the various Day 2 operations related to upgrading your management cluster from one Edge platform version to another.

The Day 2 operations are automated by the Upgrade Controller (Chapter 22, Upgrade Controller) and include:

SUSE Linux Micro (Chapter 9, SUSE Linux Micro) OS upgrade

Chapter 15, RKE2 or Chapter 14, K3s Kubernetes upgrade

SUSE additional components (SUSE Rancher Prime, SUSE Security, etc.) upgrade

36.1.1 Prerequisites #

Before upgrading your management cluster, the following prerequisites must be met:

SCC registered nodes- ensure your cluster nodes' OS are registered with a subscription key that supports the OS version specified in the Edge release (Section 53.1, “Abstract”) you intend to upgrade to.Upgrade Controller- make sure that theUpgrade Controllerhas been deployed on yourmanagementcluster. For installation steps, refer to Section 22.3, “Installing the Upgrade Controller”.

36.1.2 Upgrade #

Determine the Edge release (Section 53.1, “Abstract”) version that you wish to upgrade your

managementcluster to.In the

managementcluster, deploy anUpgradePlanthat specifies the desiredrelease version. TheUpgradePlanmust be deployed in the namespace of theUpgrade Controller.kubectl apply -n <upgrade_controller_namespace> -f - <<EOF apiVersion: lifecycle.suse.com/v1alpha1 kind: UpgradePlan metadata: name: upgrade-plan-mgmt spec: # Version retrieved from release notes releaseVersion: 3.X.Y EOF

NoteThere may be use-cases where you would want to make additional configurations over the

UpgradePlan. For all possible configurations, refer to Section 22.6.1, “UpgradePlan”.Deploying the

UpgradePlanto theUpgrade Controller’snamespace will begin theupgrade process.NoteFor more information on the actual

upgrade process, refer to Section 22.5, “How does the Upgrade Controller work?”.For information on how to track the

upgrade process, refer to Section 22.7, “Tracking the upgrade process”.

36.2 Fleet #

This section offers information on how to perform "Day 2" operations using the Fleet (Chapter 8, Fleet) component.

The following topics are covered as part of this section:

Section 36.2.1, “Components” - default components used for all "Day 2" operations.

Section 36.2.2, “Determine your use-case” - provides an overview of the Fleet custom resources that will be used and their suitability for different "Day 2" operations use-cases.

Section 36.2.3, “Day 2 workflow” - provides a workflow guide for executing "Day 2" operations with Fleet.

Section 36.2.4, “OS upgrade” - describes how to do OS upgrades using Fleet.

Section 36.2.5, “Kubernetes version upgrade” - describes how to do Kubernetes version upgrades using Fleet.

Section 36.2.6, “Helm chart upgrade” - describes how to do Helm chart upgrades using Fleet.

36.2.1 Components #

Below you can find a description of the default components that should be set up on your management cluster so that you can successfully perform "Day 2" operations using Fleet.

36.2.1.1 Rancher #

Optional; Responsible for managing downstream clusters and deploying the System Upgrade Controller on your management cluster.

For more information, see Chapter 5, Rancher.

36.2.1.2 System Upgrade Controller (SUC) #

System Upgrade Controller is responsible for executing tasks on specified nodes based on configuration data provided through a custom resource, called a Plan.

SUC is actively utilized to upgrade the operating system and Kubernetes distribution.

For more information about the SUC component and how it fits in the Edge stack, see Chapter 21, System Upgrade Controller.

36.2.2 Determine your use-case #

Fleet uses two types of custom resources to enable the management of Kubernetes and Helm resources.

Below you can find information about the purpose of these resources and the use-cases they are best suited for in the context of "Day 2" operations.

36.2.2.1 GitRepo #

A GitRepo is a Fleet (Chapter 8, Fleet) resource that represents a Git repository from which Fleet can create Bundles. Each Bundle is created based on configuration paths defined inside of the GitRepo resource. For more information, see the GitRepo documentation.

In the context of "Day 2" operations, GitRepo resources are normally used to deploy SUC or SUC Plans in non air-gapped environments that utilize a Fleet GitOps approach.

Alternatively, GitRepo resources can also be used to deploy SUC or SUC Plans on air-gapped environments, provided you mirror your repository setup through a local git server.

36.2.2.2 Bundle #

Bundles hold raw Kubernetes resources that will be deployed on the targeted cluster. Usually they are created from a GitRepo resource, but there are use-cases where they can be deployed manually. For more information refer to the Bundle documentation.

In the context of "Day 2" operations, Bundle resources are normally used to deploy SUC or SUC Plans in air-gapped environments that do not use some form of local GitOps procedure (e.g. a local git server).

Alternatively, if your use-case does not allow for a GitOps workflow (e.g. using a Git repository), Bundle resources could also be used to deploy SUC or SUC Plans in non air-gapped environments.

36.2.3 Day 2 workflow #

The following is a "Day 2" workflow that should be followed when upgrading a management cluster to a specific Edge release.

OS upgrade (Section 36.2.4, “OS upgrade”)

Kubernetes version upgrade (Section 36.2.5, “Kubernetes version upgrade”)

Helm chart upgrade (Section 36.2.6, “Helm chart upgrade”)

36.2.4 OS upgrade #

This section describes how to perform an operating system upgrade using Chapter 8, Fleet and the Chapter 21, System Upgrade Controller.

The following topics are covered as part of this section:

Section 36.2.4.1, “Components” - additional components used by the upgrade process.

Section 36.2.4.2, “Overview” - overview of the upgrade process.

Section 36.2.4.3, “Requirements” - requirements of the upgrade process.

Section 36.2.4.4, “OS upgrade - SUC plan deployment” - information on how to deploy

SUC plans, responsible for triggering the upgrade process.

36.2.4.1 Components #

This section covers the custom components that the OS upgrade process uses over the default "Day 2" components (Section 36.2.1, “Components”).

36.2.4.1.1 systemd.service #

The OS upgrade on a specific node is handled by a systemd.service.

A different service is created depending on what type of upgrade the OS requires from one Edge version to another:

For Edge versions that require the same OS version (e.g.

6.1), theos-pkg-update.servicewill be created. It uses transactional-update to perform a normal package upgrade.For Edge versions that require an OS version migration (e.g

6.1→6.2), theos-migration.servicewill be created. It uses transactional-update to perform:A normal package upgrade which ensures that all packages are at up-to-date in order to mitigate any failures in the migration related to old package versions.

An OS migration by utilizing the

zypper migrationcommand.

The services mentioned above are shipped on each node through a SUC plan which must be located on the management cluster that is in need of an OS upgrade.

36.2.4.2 Overview #

The upgrade of the operating system for management cluster nodes is done by utilizing Fleet and the System Upgrade Controller (SUC).

Fleet is used to deploy and manage SUC plans onto the desired cluster.

SUC plans are custom resources that describe the steps that SUC needs to follow in order for a specific task to be executed on a set of nodes. For an example of how an SUC plan looks like, refer to the upstream repository.

The OS SUC plans are shipped to each cluster by deploying a GitRepo or Bundle resource to a specific Fleet workspace. Fleet retrieves the deployed GitRepo/Bundle and deploys its contents (the OS SUC plans) to the desired cluster(s).

GitRepo/Bundle resources are always deployed on the management cluster. Whether to use a GitRepo or Bundle resource depends on your use-case, check Section 36.2.2, “Determine your use-case” for more information.

OS SUC plans describe the following workflow:

Always cordon the nodes before OS upgrades.

Always upgrade

control-planenodes beforeworkernodes.Always upgrade the cluster on a one node at a time basis.

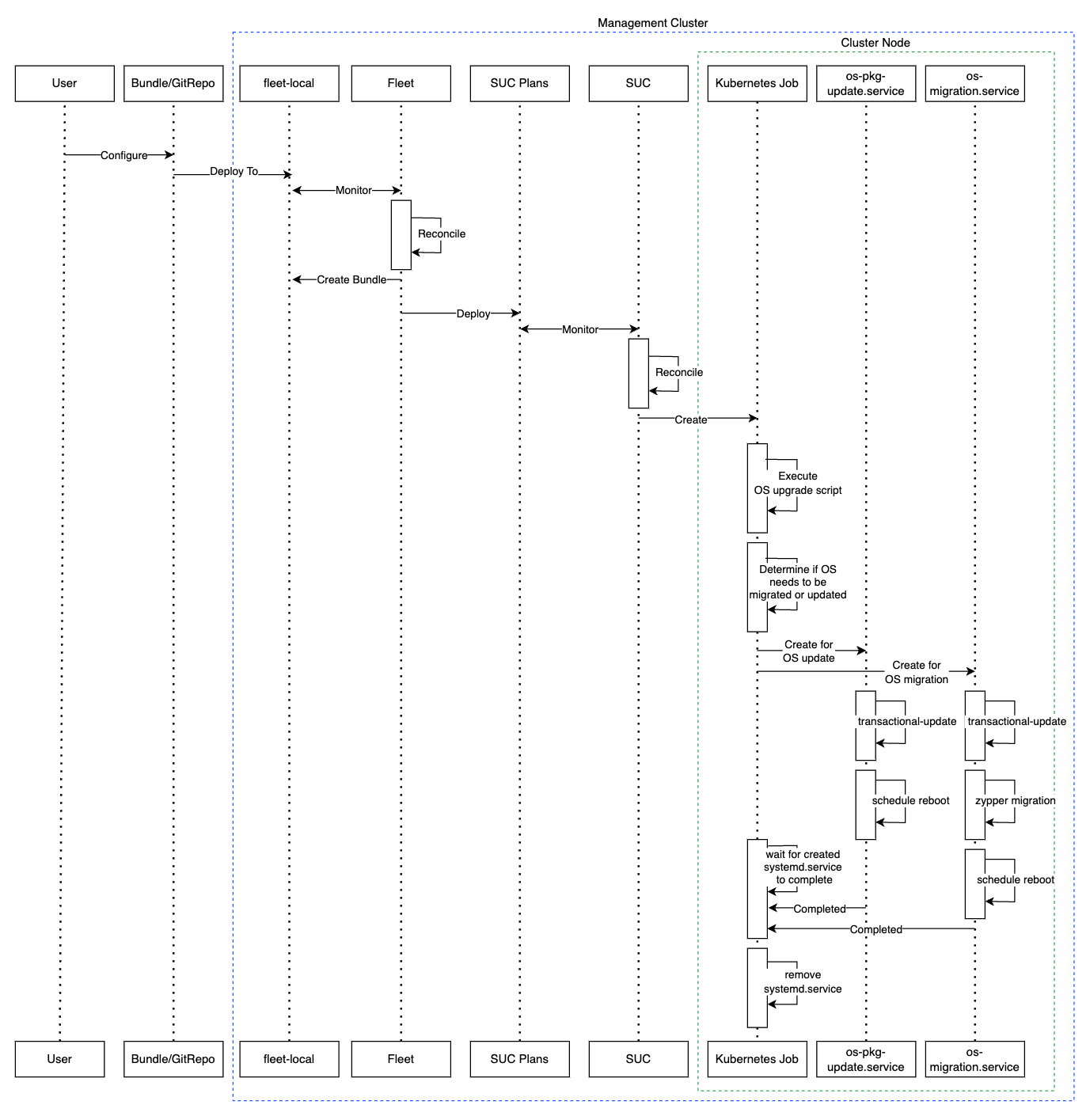

Once the OS SUC plans are deployed, the workflow looks like this:

SUC reconciles the deployed

OS SUC plansand creates aKubernetes Jobon each node.The

Kubernetes Jobcreates a systemd.service (Section 36.2.4.1.1, “systemd.service”) for either package upgrade, or OS migration.The created

systemd.servicetriggers the OS upgrade process on the specific node.ImportantOnce the OS upgrade process finishes, the corresponding node will be

rebootedto apply the updates on the system.

Below you can find a diagram of the above description:

36.2.4.3 Requirements #

General:

SCC registered machine - All management cluster nodes should be registered to

https://scc.suse.com/which is needed so that the respectivesystemd.servicecan successfully connect to the desired RPM repository.ImportantFor Edge releases that require an OS version migration (e.g.

6.1→6.2), make sure that your SCC key supports the migration to the new version.Make sure that SUC Plan tolerations match node tolerations - If your Kubernetes cluster nodes have custom taints, make sure to add tolerations for those taints in the SUC Plans. By default, SUC Plans have tolerations only for control-plane nodes. Default tolerations include:

CriticalAddonsOnly=true:NoExecute

node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/etcd:NoExecute

NoteAny additional tolerations must be added under the

.spec.tolerationssection of each Plan. SUC Plans related to the OS upgrade can be found in the suse-edge/fleet-examples repository underfleets/day2/system-upgrade-controller-plans/os-upgrade. Make sure you use the Plans from a valid repository release tag.An example of defining custom tolerations for the control-plane SUC Plan would look like this:

apiVersion: upgrade.cattle.io/v1 kind: Plan metadata: name: os-upgrade-control-plane spec: ... tolerations: # default tolerations - key: "CriticalAddonsOnly" operator: "Equal" value: "true" effect: "NoExecute" - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" - key: "node-role.kubernetes.io/etcd" operator: "Equal" effect: "NoExecute" # custom toleration - key: "foo" operator: "Equal" value: "bar" effect: "NoSchedule" ...

Air-gapped:

36.2.4.4 OS upgrade - SUC plan deployment #

For environments previously upgraded using this procedure, users should ensure that one of the following steps is completed:

Remove any previously deployed SUC Plans related to older Edge release versions from the management cluster- can be done by removing the desired cluster from the existingGitRepo/Bundletarget configuration, or removing theGitRepo/Bundleresource altogether.Reuse the existing GitRepo/Bundle resource- can be done by pointing the resource’s revision to a new tag that holds the correct fleets for the desiredsuse-edge/fleet-examplesrelease.

This is done in order to avoid clashes between SUC Plans for older Edge release versions.

If users attempt to upgrade, while there are existing SUC Plans on the management cluster, they will see the following fleet error:

Not installed: Unable to continue with install: Plan <plan_name> in namespace <plan_namespace> exists and cannot be imported into the current release: invalid ownership metadata; annotation validation error..As mentioned in Section 36.2.4.2, “Overview”, OS upgrades are done by shipping SUC plans to the desired cluster through one of the following ways:

Fleet

GitReporesource - Section 36.2.4.4.1, “SUC plan deployment - GitRepo resource”.Fleet

Bundleresource - Section 36.2.4.4.2, “SUC plan deployment - Bundle resource”.

To determine which resource you should use, refer to Section 36.2.2, “Determine your use-case”.

For use-cases where you wish to deploy the OS SUC plans from a third-party GitOps tool, refer to Section 36.2.4.4.3, “SUC Plan deployment - third-party GitOps workflow”

36.2.4.4.1 SUC plan deployment - GitRepo resource #

A GitRepo resource, that ships the needed OS SUC plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 36.2.4.4.1.1, “GitRepo creation - Rancher UI” (whenRancheris available).By manually deploying (Section 36.2.4.4.1.2, “GitRepo creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the OS upgrade process of the nodes of your targeted cluster, refer to Section 21.3, “Monitoring System Upgrade Controller Plans”.

36.2.4.4.1.1 GitRepo creation - Rancher UI #

To create a GitRepo resource through the Rancher UI, follow their official documentation.

The Edge team maintains a ready to use fleet. Depending on your environment this fleet could be used directly or as a template.

For use-cases where no custom changes need to be included to the SUC plans that the fleet ships, users can directly refer the os-upgrade fleet from the suse-edge/fleet-examples repository.

In cases where custom changes are needed (e.g. to add custom tolerations), users should refer the os-upgrade fleet from a separate repository, allowing them to add the changes to the SUC plans as required.

An example of how a GitRepo can be configured to use the fleet from the suse-edge/fleet-examples repository, can be viewed here.

36.2.4.4.1.2 GitRepo creation - manual #

Pull the GitRepo resource:

curl -o os-upgrade-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/gitrepos/day2/os-upgrade-gitrepo.yaml

Edit the GitRepo configuration:

Remove the

spec.targetssection - only needed for downstream clusters.# Example using sed sed -i.bak '/^ targets:/,$d' os-upgrade-gitrepo.yaml && rm -f os-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval 'del(.spec.targets)' -i os-upgrade-gitrepo.yaml

Point the namespace of the

GitRepoto thefleet-localnamespace - done in order to deploy the resource on the management cluster.# Example using sed sed -i.bak 's/namespace: fleet-default/namespace: fleet-local/' os-upgrade-gitrepo.yaml && rm -f os-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval '.metadata.namespace = "fleet-local"' -i os-upgrade-gitrepo.yaml

Apply the GitRepo resource your

management cluster:kubectl apply -f os-upgrade-gitrepo.yaml

View the created GitRepo resource under the

fleet-localnamespace:kubectl get gitrepo os-upgrade -n fleet-local # Example output NAME REPO COMMIT BUNDLEDEPLOYMENTS-READY STATUS os-upgrade https://github.com/suse-edge/fleet-examples.git release-3.5.0 0/0

36.2.4.4.2 SUC plan deployment - Bundle resource #

A Bundle resource, that ships the needed OS SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 36.2.4.4.2.1, “Bundle creation - Rancher UI” (whenRancheris available).By manually deploying (Section 36.2.4.4.2.2, “Bundle creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the OS upgrade process of the nodes of your targeted cluster, refer to Section 21.3, “Monitoring System Upgrade Controller Plans”.

36.2.4.4.2.1 Bundle creation - Rancher UI #

The Edge team maintains a ready to use bundle that can be used in the below steps.

To create a bundle through Rancher’s UI:

In the upper left corner, click ☰ → Continuous Delivery

Go to Advanced > Bundles

Select Create from YAML

From here you can create the Bundle in one of the following ways:

NoteThere might be use-cases where you would need to include custom changes to the

SUC plansthat the bundle ships (e.g. to add custom tolerations). Make sure to include those changes in the bundle that will be generated by the below steps.By manually copying the bundle content from

suse-edge/fleet-examplesto the Create from YAML page.By cloning the suse-edge/fleet-examples repository from the desired release tag and selecting the Read from File option in the Create from YAML page. From there, navigate to the bundle location (

bundles/day2/system-upgrade-controller-plans/os-upgrade) and select the bundle file. This will auto-populate the Create from YAML page with the bundle content.

Edit the Bundle in the Rancher UI:

Change the namespace of the

Bundleto point to thefleet-localnamespace.# Example kind: Bundle apiVersion: fleet.cattle.io/v1alpha1 metadata: name: os-upgrade namespace: fleet-local ...

Change the target clusters for the

Bundleto point to yourlocal(management) cluster:spec: targets: - clusterName: local

NoteThere are some use-cases where your

localcluster could have a different name.To retrieve your

localcluster name, execute the command below:kubectl get clusters.fleet.cattle.io -n fleet-local

Select Create

36.2.4.4.2.2 Bundle creation - manual #

Pull the Bundle resource:

curl -o os-upgrade-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/bundles/day2/system-upgrade-controller-plans/os-upgrade/os-upgrade-bundle.yaml

Edit the

Bundleconfiguration:Change the target clusters for the

Bundleto point to yourlocal(management) cluster:spec: targets: - clusterName: local

NoteThere are some use-cases where your

localcluster could have a different name.To retrieve your

localcluster name, execute the command below:kubectl get clusters.fleet.cattle.io -n fleet-local

Change the namespace of the

Bundleto point to thefleet-localnamespace.# Example kind: Bundle apiVersion: fleet.cattle.io/v1alpha1 metadata: name: os-upgrade namespace: fleet-local ...

Apply the Bundle resource to your

management cluster:kubectl apply -f os-upgrade-bundle.yaml

View the created Bundle resource under the

fleet-localnamespace:kubectl get bundles -n fleet-local

36.2.4.4.3 SUC Plan deployment - third-party GitOps workflow #

There might be use-cases where users would like to incorporate the OS SUC plans to their own third-party GitOps workflow (e.g. Flux).

To get the OS upgrade resources that you need, first determine the Edge release tag of the suse-edge/fleet-examples repository that you would like to use.

After that, resources can be found at fleets/day2/system-upgrade-controller-plans/os-upgrade, where:

plan-control-plane.yamlis a SUC plan resource for control-plane nodes.plan-worker.yamlis a SUC plan resource for worker nodes.secret.yamlis a Secret that contains theupgrade.shscript, which is responsible for creating the systemd.service (Section 36.2.4.1.1, “systemd.service”).config-map.yamlis a ConfigMap that holds configurations that are consumed by theupgrade.shscript.

These Plan resources are interpreted by the System Upgrade Controller and should be deployed on each downstream cluster that you wish to upgrade. For SUC deployment information, see Section 21.2, “Installing the System Upgrade Controller”.

To better understand how your GitOps workflow can be used to deploy the SUC Plans for OS upgrade, it can be beneficial to take a look at overview (Section 36.2.4.2, “Overview”).

36.2.5 Kubernetes version upgrade #

This section describes how to perform a Kubernetes upgrade using Chapter 8, Fleet and the Chapter 21, System Upgrade Controller.

The following topics are covered as part of this section:

Section 36.2.5.1, “Components” - additional components used by the upgrade process.

Section 36.2.5.2, “Overview” - overview of the upgrade process.

Section 36.2.5.3, “Requirements” - requirements of the upgrade process.

Section 36.2.5.4, “K8s upgrade - SUC plan deployment” - information on how to deploy

SUC plans, responsible for triggering the upgrade process.

36.2.5.1 Components #

This section covers the custom components that the K8s upgrade process uses over the default "Day 2" components (Section 36.2.1, “Components”).

36.2.5.1.1 rke2-upgrade #

Container image responsible for upgrading the RKE2 version of a specific node.

Shipped through a Pod created by SUC based on a SUC Plan. The Plan should be located on each cluster that is in need of a RKE2 upgrade.

For more information regarding how the rke2-upgrade image performs the upgrade, see the upstream documentation.

36.2.5.1.2 k3s-upgrade #

Container image responsible for upgrading the K3s version of a specific node.

Shipped through a Pod created by SUC based on a SUC Plan. The Plan should be located on each cluster that is in need of a K3s upgrade.

For more information regarding how the k3s-upgrade image performs the upgrade, see the upstream documentation.

36.2.5.2 Overview #

The Kubernetes distribution upgrade for management cluster nodes is done by utilizing Fleet and the System Upgrade Controller (SUC).

Fleet is used to deploy and manage SUC plans onto the desired cluster.

SUC plans are custom resources that describe the steps that SUC needs to follow in order for a specific task to be executed on a set of nodes. For an example of how an SUC plan looks like, refer to the upstream repository.

The K8s SUC plans are shipped on each cluster by deploying a GitRepo or Bundle resource to a specific Fleet workspace. Fleet retrieves the deployed GitRepo/Bundle and deploys its contents (the K8s SUC plans) to the desired cluster(s).

GitRepo/Bundle resources are always deployed on the management cluster. Whether to use a GitRepo or Bundle resource depends on your use-case, check Section 36.2.2, “Determine your use-case” for more information.

K8s SUC plans describe the following workflow:

Always cordon the nodes before K8s upgrades.

Always upgrade

control-planenodes beforeworkernodes.Always upgrade the

control-planenodes one node at a time and theworkernodes two nodes at a time.

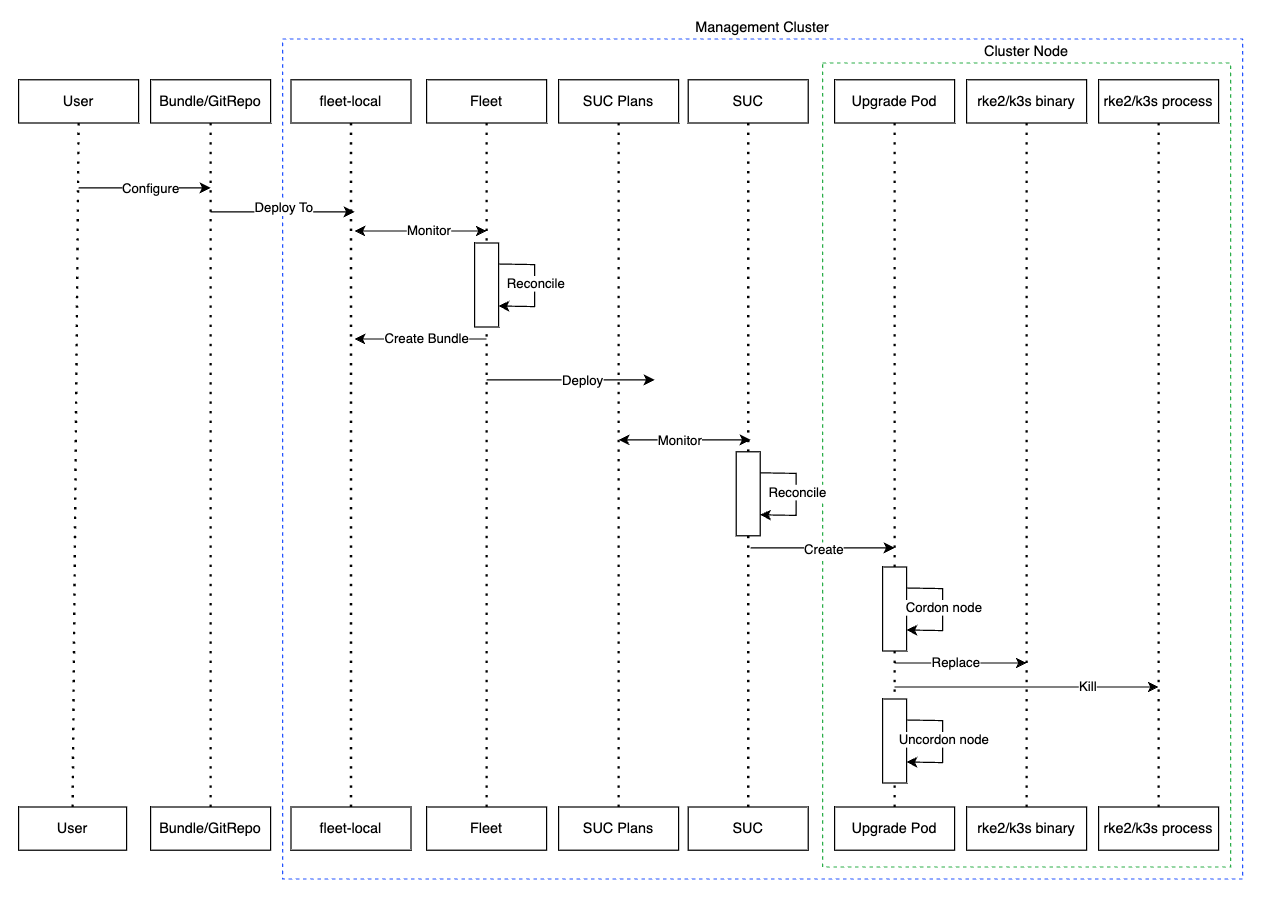

Once the K8s SUC plans are deployed, the workflow looks like this:

SUC reconciles the deployed

K8s SUC plansand creates aKubernetes Jobon each node.Depending on the Kubernetes distribution, the Job will create a Pod that runs either the rke2-upgrade (Section 36.2.5.1.1, “rke2-upgrade”) or the k3s-upgrade (Section 36.2.5.1.2, “k3s-upgrade”) container image.

The created Pod will go through the following workflow:

Replace the existing

rke2/k3sbinary on the node with the one from therke2-upgrade/k3s-upgradeimage.Kill the running

rke2/k3sprocess.

Killing the

rke2/k3sprocess triggers a restart, launching a new process that runs the updated binary, resulting in an upgraded Kubernetes distribution version.

Below you can find a diagram of the above description:

36.2.5.3 Requirements #

Backup your Kubernetes distribution:

For RKE2 clusters, see the RKE2 Backup and Restore documentation.

For K3s clusters, see the K3s Backup and Restore documentation.

Make sure that SUC Plan tolerations match node tolerations - If your Kubernetes cluster nodes have custom taints, make sure to add tolerations for those taints in the SUC Plans. By default SUC Plans have tolerations only for control-plane nodes. Default tolerations include:

CriticalAddonsOnly=true:NoExecute

node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/etcd:NoExecute

NoteAny additional tolerations must be added under the

.spec.tolerationssection of each Plan. SUC Plans related to the Kubernetes version upgrade can be found in the suse-edge/fleet-examples repository under:For RKE2 -

fleets/day2/system-upgrade-controller-plans/rke2-upgradeFor K3s -

fleets/day2/system-upgrade-controller-plans/k3s-upgrade

Make sure you use the Plans from a valid repository release tag.

An example of defining custom tolerations for the RKE2 control-plane SUC Plan, would look like this:

apiVersion: upgrade.cattle.io/v1 kind: Plan metadata: name: rke2-upgrade-control-plane spec: ... tolerations: # default tolerations - key: "CriticalAddonsOnly" operator: "Equal" value: "true" effect: "NoExecute" - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" - key: "node-role.kubernetes.io/etcd" operator: "Equal" effect: "NoExecute" # custom toleration - key: "foo" operator: "Equal" value: "bar" effect: "NoSchedule" ...

36.2.5.4 K8s upgrade - SUC plan deployment #

For environments previously upgraded using this procedure, users should ensure that one of the following steps is completed:

Remove any previously deployed SUC Plans related to older Edge release versions from the management cluster- can be done by removing the desired cluster from the existingGitRepo/Bundletarget configuration, or removing theGitRepo/Bundleresource altogether.Reuse the existing GitRepo/Bundle resource- can be done by pointing the resource’s revision to a new tag that holds the correct fleets for the desiredsuse-edge/fleet-examplesrelease.

This is done in order to avoid clashes between SUC Plans for older Edge release versions.

If users attempt to upgrade, while there are existing SUC Plans on the management cluster, they will see the following fleet error:

Not installed: Unable to continue with install: Plan <plan_name> in namespace <plan_namespace> exists and cannot be imported into the current release: invalid ownership metadata; annotation validation error..As mentioned in Section 36.2.5.2, “Overview”, Kubernetes upgrades are done by shipping SUC plans to the desired cluster through one of the following ways:

Fleet GitRepo resource (Section 36.2.5.4.1, “SUC plan deployment - GitRepo resource”)

Fleet Bundle resource (Section 36.2.5.4.2, “SUC plan deployment - Bundle resource”)

To determine which resource you should use, refer to Section 36.2.2, “Determine your use-case”.

For use-cases where you wish to deploy the K8s SUC plans from a third-party GitOps tool, refer to Section 36.2.5.4.3, “SUC Plan deployment - third-party GitOps workflow”

36.2.5.4.1 SUC plan deployment - GitRepo resource #

A GitRepo resource, that ships the needed K8s SUC plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 36.2.5.4.1.1, “GitRepo creation - Rancher UI” (whenRancheris available).By manually deploying (Section 36.2.5.4.1.2, “GitRepo creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the Kubernetes upgrade process of the nodes of your targeted cluster, refer to Section 21.3, “Monitoring System Upgrade Controller Plans”.

36.2.5.4.1.1 GitRepo creation - Rancher UI #

To create a GitRepo resource through the Rancher UI, follow their official documentation.

The Edge team maintains ready to use fleets for both rke2 and k3s Kubernetes distributions. Depending on your environment, this fleet could be used directly or as a template.

For use-cases where no custom changes need to be included to the SUC plans that these fleets ship, users can directly refer the fleets from the suse-edge/fleet-examples repository.

In cases where custom changes are needed (e.g. to add custom tolerations), users should refer the fleets from a separate repository, allowing them to add the changes to the SUC plans as required.

Configuration examples for a GitRepo resource using the fleets from suse-edge/fleet-examples repository:

36.2.5.4.1.2 GitRepo creation - manual #

Pull the GitRepo resource:

For RKE2 clusters:

curl -o rke2-upgrade-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/gitrepos/day2/rke2-upgrade-gitrepo.yaml

For K3s clusters:

curl -o k3s-upgrade-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/gitrepos/day2/k3s-upgrade-gitrepo.yaml

Edit the GitRepo configuration:

Remove the

spec.targetssection - only needed for downstream clusters.For RKE2:

# Example using sed sed -i.bak '/^ targets:/,$d' rke2-upgrade-gitrepo.yaml && rm -f rke2-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval 'del(.spec.targets)' -i rke2-upgrade-gitrepo.yaml

For K3s:

# Example using sed sed -i.bak '/^ targets:/,$d' k3s-upgrade-gitrepo.yaml && rm -f k3s-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval 'del(.spec.targets)' -i k3s-upgrade-gitrepo.yaml

Point the namespace of the

GitRepoto thefleet-localnamespace - done in order to deploy the resource on the management cluster.For RKE2:

# Example using sed sed -i.bak 's/namespace: fleet-default/namespace: fleet-local/' rke2-upgrade-gitrepo.yaml && rm -f rke2-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval '.metadata.namespace = "fleet-local"' -i rke2-upgrade-gitrepo.yaml

For K3s:

# Example using sed sed -i.bak 's/namespace: fleet-default/namespace: fleet-local/' k3s-upgrade-gitrepo.yaml && rm -f k3s-upgrade-gitrepo.yaml.bak # Example using yq (v4+) yq eval '.metadata.namespace = "fleet-local"' -i k3s-upgrade-gitrepo.yaml

Apply the GitRepo resources to your

management cluster:# RKE2 kubectl apply -f rke2-upgrade-gitrepo.yaml # K3s kubectl apply -f k3s-upgrade-gitrepo.yaml

View the created GitRepo resource under the

fleet-localnamespace:# RKE2 kubectl get gitrepo rke2-upgrade -n fleet-local # K3s kubectl get gitrepo k3s-upgrade -n fleet-local # Example output NAME REPO COMMIT BUNDLEDEPLOYMENTS-READY STATUS k3s-upgrade https://github.com/suse-edge/fleet-examples.git fleet-local 0/0 rke2-upgrade https://github.com/suse-edge/fleet-examples.git fleet-local 0/0

36.2.5.4.2 SUC plan deployment - Bundle resource #

A Bundle resource, that ships the needed Kubernetes upgrade SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 36.2.5.4.2.1, “Bundle creation - Rancher UI” (whenRancheris available).By manually deploying (Section 36.2.5.4.2.2, “Bundle creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the Kubernetes upgrade process of the nodes of your targeted cluster, refer to Section 21.3, “Monitoring System Upgrade Controller Plans”.

36.2.5.4.2.1 Bundle creation - Rancher UI #

The Edge team maintains ready to use bundles for both rke2 and k3s Kubernetes distributions. Depending on your environment these bundles could be used directly or as a template.

To create a bundle through Rancher’s UI:

In the upper left corner, click ☰ → Continuous Delivery

Go to Advanced > Bundles

Select Create from YAML

From here you can create the Bundle in one of the following ways:

NoteThere might be use-cases where you would need to include custom changes to the

SUC plansthat the bundle ships (e.g. to add custom tolerations). Make sure to include those changes in the bundle that will be generated by the below steps.By manually copying the bundle content for RKE2 or K3s from

suse-edge/fleet-examplesto the Create from YAML page.By cloning the suse-edge/fleet-examples repository from the desired release tag and selecting the Read from File option in the Create from YAML page. From there, navigate to the bundle that you need (

bundles/day2/system-upgrade-controller-plans/rke2-upgrade/plan-bundle.yamlfor RKE2 andbundles/day2/system-upgrade-controller-plans/k3s-upgrade/plan-bundle.yamlfor K3s). This will auto-populate the Create from YAML page with the bundle content.

Edit the Bundle in the Rancher UI:

Change the namespace of the

Bundleto point to thefleet-localnamespace.# Example kind: Bundle apiVersion: fleet.cattle.io/v1alpha1 metadata: name: rke2-upgrade namespace: fleet-local ...

Change the target clusters for the

Bundleto point to yourlocal(management) cluster:spec: targets: - clusterName: local

NoteThere are some use-cases where your

localcluster could have a different name.To retrieve your

localcluster name, execute the command below:kubectl get clusters.fleet.cattle.io -n fleet-local

Select Create

36.2.5.4.2.2 Bundle creation - manual #

Pull the Bundle resources:

For RKE2 clusters:

curl -o rke2-plan-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/bundles/day2/system-upgrade-controller-plans/rke2-upgrade/plan-bundle.yaml

For K3s clusters:

curl -o k3s-plan-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/refs/tags/release-3.5.0/bundles/day2/system-upgrade-controller-plans/k3s-upgrade/plan-bundle.yaml

Edit the

Bundleconfiguration:Change the target clusters for the

Bundleto point to yourlocal(management) cluster:spec: targets: - clusterName: local

NoteThere are some use-cases where your

localcluster could have a different name.To retrieve your

localcluster name, execute the command below:kubectl get clusters.fleet.cattle.io -n fleet-local

Change the namespace of the

Bundleto point to thefleet-localnamespace.# Example kind: Bundle apiVersion: fleet.cattle.io/v1alpha1 metadata: name: rke2-upgrade namespace: fleet-local ...

Apply the Bundle resources to your

management cluster:# For RKE2 kubectl apply -f rke2-plan-bundle.yaml # For K3s kubectl apply -f k3s-plan-bundle.yaml

View the created Bundle resource under the

fleet-localnamespace:# For RKE2 kubectl get bundles rke2-upgrade -n fleet-local # For K3s kubectl get bundles k3s-upgrade -n fleet-local # Example output NAME BUNDLEDEPLOYMENTS-READY STATUS k3s-upgrade 0/0 rke2-upgrade 0/0

36.2.5.4.3 SUC Plan deployment - third-party GitOps workflow #

There might be use-cases where users would like to incorporate the Kubernetes upgrade SUC plans to their own third-party GitOps workflow (e.g. Flux).

To get the K8s upgrade resources that you need, first determine the Edge release tag of the suse-edge/fleet-examples repository that you would like to use.

After that, the resources can be found at:

For a RKE2 cluster upgrade:

For

control-planenodes -fleets/day2/system-upgrade-controller-plans/rke2-upgrade/plan-control-plane.yamlFor

workernodes -fleets/day2/system-upgrade-controller-plans/rke2-upgrade/plan-worker.yaml

For a K3s cluster upgrade:

For

control-planenodes -fleets/day2/system-upgrade-controller-plans/k3s-upgrade/plan-control-plane.yamlFor

workernodes -fleets/day2/system-upgrade-controller-plans/k3s-upgrade/plan-worker.yaml

These Plan resources are interpreted by the System Upgrade Controller and should be deployed on each downstream cluster that you wish to upgrade. For SUC deployment information, see Section 21.2, “Installing the System Upgrade Controller”.

To better understand how your GitOps workflow can be used to deploy the SUC Plans for Kubernetes version upgrade, it can be beneficial to take a look at the overview (Section 36.2.5.2, “Overview”) of the update procedure using Fleet.

36.2.6 Helm chart upgrade #

This section covers the following parts:

Section 36.2.6.1, “Preparation for air-gapped environments” - holds information on how to ship Edge related OCI charts and images to your private registry.

Section 36.2.6.2, “Upgrade procedure” - holds information on different Helm chart upgrade use-cases and their upgrade procedure.

36.2.6.1 Preparation for air-gapped environments #

36.2.6.1.1 Ensure you have access to your Helm chart Fleet #

Depending on what your environment supports, you can take one of the following options:

Host your chart’s Fleet resources on a local Git server that is accessible by your

management cluster.Use Fleet’s CLI to convert a Helm chart into a Bundle that you can directly use and will not need to be hosted somewhere. Fleet’s CLI can be retrieved from their release page, for Mac users there is a fleet-cli Homebrew Formulae.

36.2.6.1.2 Find the required assets for your Edge release version #

Go to the "Day 2" release page and find the Edge release that you want to upgrade your chart to and click Assets.

From the "Assets" section, download the following files:

Release File

Description

edge-save-images.sh

Pulls the images specified in the

edge-release-images.txtfile and packages them inside of a '.tar.gz' archive.edge-save-oci-artefacts.sh

Pulls the OCI chart images related to the specific Edge release and packages them inside of a '.tar.gz' archive.

edge-load-images.sh

Loads images from a '.tar.gz' archive, retags and pushes them to a private registry.

edge-load-oci-artefacts.sh

Takes a directory containing Edge OCI '.tgz' chart packages and loads them to a private registry.

edge-release-helm-oci-artefacts.txt

Contains a list of OCI chart images related to a specific Edge release.

edge-release-images.txt

Contains a list of images related to a specific Edge release.

36.2.6.1.3 Create the Edge release images archive #

On a machine with internet access:

Make

edge-save-images.shexecutable:chmod +x edge-save-images.sh

Generate the image archive:

./edge-save-images.sh --source-registry registry.suse.com

This will create a ready to load archive named

edge-images.tar.gz.NoteIf the

-i|--imagesoption is specified, the name of the archive may differ.Copy this archive to your air-gapped machine:

scp edge-images.tar.gz <user>@<machine_ip>:/path

36.2.6.1.4 Create the Edge OCI chart images archive #

On a machine with internet access:

Make

edge-save-oci-artefacts.shexecutable:chmod +x edge-save-oci-artefacts.sh

Generate the OCI chart image archive:

./edge-save-oci-artefacts.sh --source-registry registry.suse.com

This will create an archive named

oci-artefacts.tar.gz.NoteIf the

-a|--archiveoption is specified, the name of the archive may differ.Copy this archive to your air-gapped machine:

scp oci-artefacts.tar.gz <user>@<machine_ip>:/path

36.2.6.1.5 Load Edge release images to your air-gapped machine #

On your air-gapped machine:

Log into your private registry (if required):

podman login <REGISTRY.YOURDOMAIN.COM:PORT>

Make

edge-load-images.shexecutable:chmod +x edge-load-images.sh

Execute the script, passing the previously copied

edge-images.tar.gzarchive:./edge-load-images.sh --source-registry registry.suse.com --registry <REGISTRY.YOURDOMAIN.COM:PORT> --images edge-images.tar.gz

NoteThis will load all images from the

edge-images.tar.gz, retag and push them to the registry specified under the--registryoption.

36.2.6.1.6 Load the Edge OCI chart images to your air-gapped machine #

On your air-gapped machine:

Log into your private registry (if required):

podman login <REGISTRY.YOURDOMAIN.COM:PORT>

Make

edge-load-oci-artefacts.shexecutable:chmod +x edge-load-oci-artefacts.sh

Untar the copied

oci-artefacts.tar.gzarchive:tar -xvf oci-artefacts.tar.gz

This will produce a directory with the naming template

edge-release-oci-tgz-<date>Pass this directory to the

edge-load-oci-artefacts.shscript to load the Edge OCI chart images to your private registry:NoteThis script assumes the

helmCLI has been pre-installed on your environment. For Helm installation instructions, see Installing Helm../edge-load-oci-artefacts.sh --archive-directory edge-release-oci-tgz-<date> --registry <REGISTRY.YOURDOMAIN.COM:PORT> --source-registry registry.suse.com

36.2.6.1.7 Configure your private registry in your Kubernetes distribution #

For RKE2, see Private Registry Configuration

For K3s, see Private Registry Configuration

36.2.6.2 Upgrade procedure #

This section focuses on the following Helm upgrade procedure use-cases:

Manually deployed Helm charts cannot be reliably upgraded. We suggest to redeploy the Helm chart using the Section 36.2.6.2.1, “I have a new cluster and would like to deploy and manage an Edge Helm chart” method.

36.2.6.2.1 I have a new cluster and would like to deploy and manage an Edge Helm chart #

This section covers how to:

36.2.6.2.1.1 Prepare the fleet resources for your chart #

Acquire the chart’s Fleet resources from the Edge release tag that you wish to use.

Navigate to the Helm chart fleet (

fleets/day2/chart-templates/<chart>)If you intend to use a GitOps workflow, copy the chart Fleet directory to the Git repository from where you will do GitOps.

Optionally, if the Helm chart requires configurations to its values, edit the

.helm.valuesconfiguration inside thefleet.yamlfile of the copied directory.Optionally, there may be use-cases where you need to add additional resources to your chart’s fleet so that it can better fit your environment. For information on how to enhance your Fleet directory, see Git Repository Contents.

In some cases, the default timeout Fleet uses for Helm operations may be insufficient, resulting in the following error:

failed pre-install: context deadline exceededIn such cases, add the timeoutSeconds property under the helm configuration of your fleet.yaml file.

An example for the longhorn helm chart would look like:

User Git repository structure:

<user_repository_root> ├── longhorn │ └── fleet.yaml └── longhorn-crd └── fleet.yamlfleet.yamlcontent populated with userLonghorndata:defaultNamespace: longhorn-system helm: # timeoutSeconds: 10 releaseName: "longhorn" chart: "longhorn" repo: "https://charts.rancher.io/" version: "1.10.1" takeOwnership: true # custom chart value overrides values: # Example for user provided custom values content defaultSettings: deletingConfirmationFlag: true # https://fleet.rancher.io/bundle-diffs diff: comparePatches: - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: engineimages.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"} - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: nodes.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"} - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: volumes.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"}NoteThese are just example values that are used to illustrate custom configurations over the

longhornchart. They should NOT be treated as deployment guidelines for thelonghornchart.

36.2.6.2.1.2 Deploy the fleet for your chart #

You can deploy the fleet for your chart by either using a GitRepo (Section 36.2.6.2.1.2.1, “GitRepo”) or Bundle (Section 36.2.6.2.1.2.2, “Bundle”).

While deploying your Fleet, if you get a Modified message, make sure to add a corresponding comparePatches entry to the Fleet’s diff section. For more information, see Generating Diffs to Ignore Modified GitRepos.

36.2.6.2.1.2.1 GitRepo #

Fleet’s GitRepo resource holds information on how to access your chart’s Fleet resources and to which clusters it needs to apply those resources.

The GitRepo resource can be deployed through the Rancher UI, or manually, by deploying the resource to the management cluster.

Example Longhorn GitRepo resource for manual deployment:

apiVersion: fleet.cattle.io/v1alpha1

kind: GitRepo

metadata:

name: longhorn-git-repo

namespace: fleet-local

spec:

# If using a tag

# revision: user_repository_tag

#

# If using a branch

# branch: user_repository_branch

paths:

# As seen in the 'Prepare your Fleet resources' example

- longhorn

- longhorn-crd

repo: user_repository_url36.2.6.2.1.2.2 Bundle #

Bundle resources hold the raw Kubernetes resources that need to be deployed by Fleet. Normally it is encouraged to use the GitRepo approach, but for use-cases where the environment is air-gapped and cannot support a local Git server, Bundles can help you in propagating your Helm chart Fleet to your target clusters.

A Bundle can be deployed either through the Rancher UI (Continuous Delivery → Advanced → Bundles → Create from YAML) or by manually deploying the Bundle resource in the correct Fleet namespace. For information about Fleet namespaces, see the upstream documentation.

Bundles for Edge Helm charts can be created by utilizing Fleet’s Convert a Helm Chart into a Bundle approach.

Below you can find an example on how to create a Bundle resource from the longhorn and longhorn-crd Helm chart fleet templates and manually deploy this bundle to your management cluster.

To illustrate the workflow, the below example uses the suse-edge/fleet-examples directory structure.

Navigate to the longhorn Chart fleet template:

cd fleets/day2/chart-templates/longhorn/longhorn

Create a

targets.yamlfile that will instruct Fleet to which clusters it should deploy the Helm chart:cat > targets.yaml <<EOF targets: # Match your local (management) cluster - clusterName: local EOF

NoteThere are some use-cases where your local cluster could have a different name.

To retrieve your local cluster name, execute the command below:

kubectl get clusters.fleet.cattle.io -n fleet-local

Convert the

LonghornHelm chart Fleet to a Bundle resource using the fleet-cli.NoteFleet’s CLI can be retrieved from their release Assets page (

fleet-linux-amd64).For Mac users there is a fleet-cli Homebrew Formulae.

fleet apply --compress --targets-file=targets.yaml -n fleet-local -o - longhorn-bundle > longhorn-bundle.yaml

Navigate to the longhorn-crd Chart fleet template:

cd fleets/day2/chart-templates/longhorn/longhorn-crd

Create a

targets.yamlfile that will instruct Fleet to which clusters it should deploy the Helm chart:cat > targets.yaml <<EOF targets: # Match your local (management) cluster - clusterName: local EOF

Convert the

Longhorn CRDHelm chart Fleet to a Bundle resource using the fleet-cli.fleet apply --compress --targets-file=targets.yaml -n fleet-local -o - longhorn-crd-bundle > longhorn-crd-bundle.yaml

Deploy the

longhorn-bundle.yamlandlonghorn-crd-bundle.yamlfiles to yourmanagement cluster:kubectl apply -f longhorn-crd-bundle.yaml kubectl apply -f longhorn-bundle.yaml

Following these steps will ensure that SUSE Storage is deployed on all of the specified management cluster.

36.2.6.2.1.3 Manage the deployed Helm chart #

Once deployed with Fleet, for Helm chart upgrades, see Section 36.2.6.2.2, “I would like to upgrade a Fleet managed Helm chart”.

36.2.6.2.2 I would like to upgrade a Fleet managed Helm chart #

Determine the version to which you need to upgrade your chart so that it is compatible with the desired Edge release. Helm chart version per Edge release can be viewed from the release notes (Section 53.1, “Abstract”).

In your Fleet monitored Git repository, edit the Helm chart’s

fleet.yamlfile with the correct chart version and repository from the release notes (Section 53.1, “Abstract”).After committing and pushing the changes to your repository, this will trigger an upgrade of the desired Helm chart

36.2.6.2.3 I would like to upgrade a Helm chart deployed via EIB #

Chapter 11, Edge Image Builder deploys Helm charts by creating a HelmChart resource and utilizing the helm-controller introduced by the RKE2/K3s Helm integration feature.

To ensure that a Helm chart deployed via EIB is successfully upgraded, users need to do an upgrade over the respective HelmChart resources.

Below you can find information on:

The general overview (Section 36.2.6.2.3.1, “Overview”) of the upgrade process.

The necessary upgrade steps (Section 36.2.6.2.3.2, “Upgrade Steps”).

An example (Section 36.2.6.2.3.3, “Example”) showcasing a Longhorn chart upgrade using the explained method.

How to use the upgrade process with a different GitOps tool (Section 36.2.6.2.3.4, “Helm chart upgrade using a third-party GitOps tool”).

36.2.6.2.3.1 Overview #

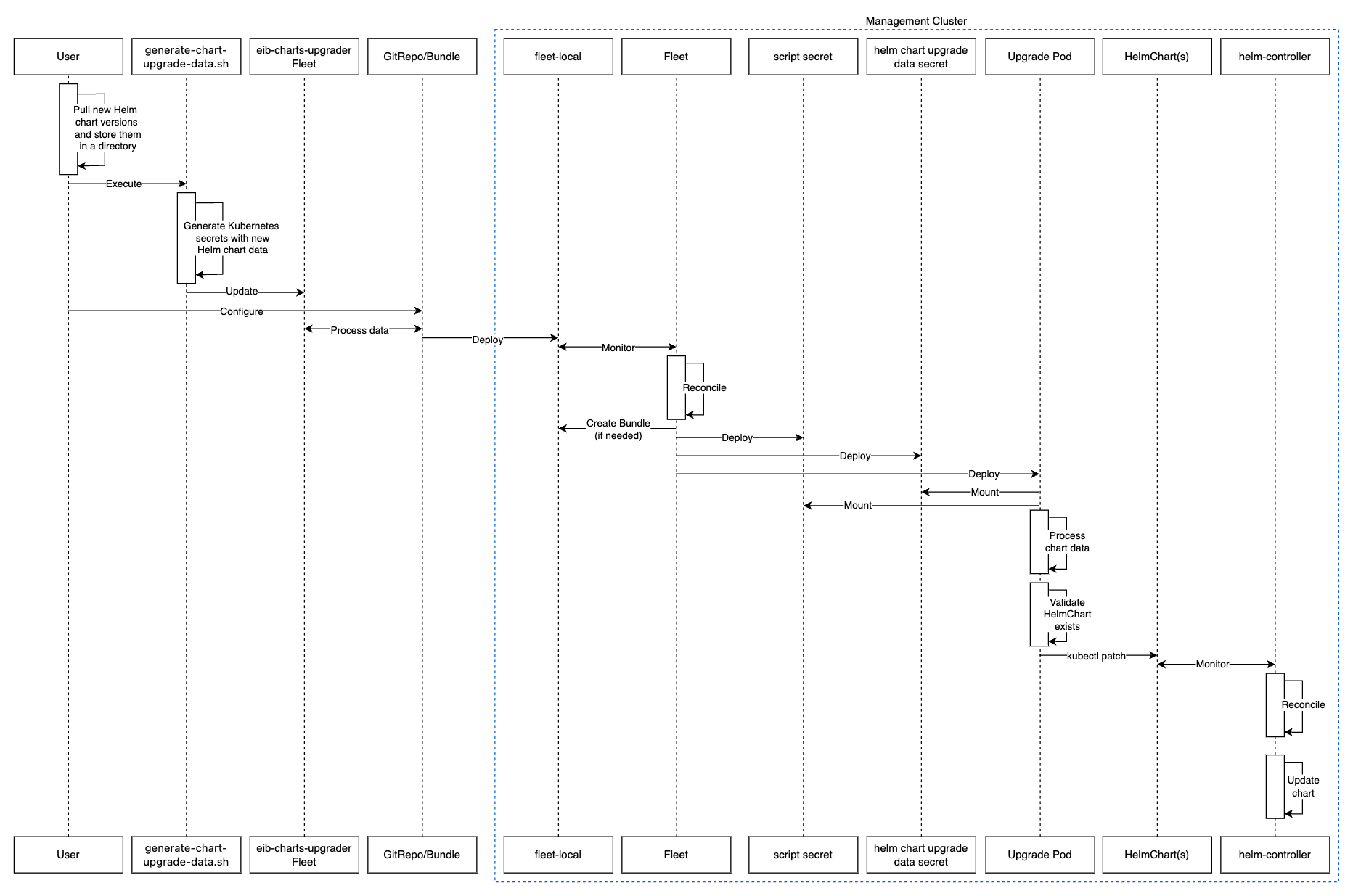

Helm charts that are deployed via EIB are upgraded through a fleet called eib-charts-upgrader.

This fleet processes user-provided data to update a specific set of HelmChart resources.

Updating these resources triggers the helm-controller, which upgrades the Helm charts associated with the modified HelmChart resources.

The user is only expected to:

Locally pull the archives for each Helm chart that needs to be upgraded.

Pass these archives to the generate-chart-upgrade-data.sh

generate-chart-upgrade-data.shscript, which will include the data from these archives to theeib-charts-upgraderfleet.Deploy the

eib-charts-upgraderfleet to theirmanagement cluster. This is done through either aGitRepoorBundleresource.

Once deployed, the eib-charts-upgrader, with the help of Fleet, will ship its resources to the desired management cluster.

These resources include:

A set of

Secretsholding the user-provided Helm chart data.A

Kubernetes Jobwhich will deploy aPodthat will mount the previously mentionedSecretsand based on them patch the corresponding HelmChart resources.

As mentioned previously this will trigger the helm-controller which will perform the actual Helm chart upgrade.

Below you can find a diagram of the above description:

36.2.6.2.3.2 Upgrade Steps #

Clone the

suse-edge/fleet-examplesrepository from the correct release tag.Create a directory in which you will store the pulled Helm chart archive(s).

mkdir archives

Inside of the newly created archive directory, pull the archive(s) for the Helm chart(s) you wish to upgrade:

cd archives helm pull [chart URL | repo/chartname] # Alternatively if you want to pull a specific version: # helm pull [chart URL | repo/chartname] --version 0.0.0

From Assets of the desired release tag, download the

generate-chart-upgrade-data.shscript.Execute the

generate-chart-upgrade-data.shscript:chmod +x ./generate-chart-upgrade-data.sh ./generate-chart-upgrade-data.sh --archive-dir /foo/bar/archives/ --fleet-path /foo/bar/fleet-examples/fleets/day2/eib-charts-upgrader

For each chart archive in the

--archive-dirdirectory, the script generates aKubernetes Secret YAMLfile containing the chart upgrade data and stores it in thebase/secretsdirectory of the fleet specified by--fleet-path.The

generate-chart-upgrade-data.shscript also applies additional modifications to the fleet to ensure the generatedKubernetes Secret YAMLfiles are correctly utilized by the workload deployed by the fleet.ImportantUsers should not make any changes over what the

generate-chart-upgrade-data.shscript generates.

The steps below depend on the environment that you are running:

For an environment that supports GitOps (e.g. is non air-gapped, or is air-gapped, but allows for local Git server support):

Copy the

fleets/day2/eib-charts-upgraderFleet to the repository that you will use for GitOps.NoteMake sure that the Fleet includes the changes that have been made by the

generate-chart-upgrade-data.shscript.Configure a

GitReporesource that will be used to ship all the resources of theeib-charts-upgraderFleet.For

GitRepoconfiguration and deployment through the Rancher UI, see Accessing Fleet in the Rancher UI.For

GitRepomanual configuration and deployment, see Creating a Deployment.

For an environment that does not support GitOps (e.g. is air-gapped and does not allow local Git server usage):

Download the

fleet-clibinary from therancher/fleetrelease page (fleet-linux-amd64for Linux). For Mac users, there is a Homebrew Formulae that can be used - fleet-cli.Navigate to the

eib-charts-upgraderFleet:cd /foo/bar/fleet-examples/fleets/day2/eib-charts-upgrader

Create a

targets.yamlfile that will instruct Fleet where to deploy your resources:cat > targets.yaml <<EOF targets: # To map the local(management) cluster - clusterName: local EOF

NoteThere are some use-cases where your

localcluster could have a different name.To retrieve your

localcluster name, execute the command below:kubectl get clusters.fleet.cattle.io -n fleet-local

Use the

fleet-clito convert the Fleet to aBundleresource:fleet apply --compress --targets-file=targets.yaml -n fleet-local -o - eib-charts-upgrade > bundle.yaml

This will create a Bundle (

bundle.yaml) that will hold all the templated resource from theeib-charts-upgraderFleet.For more information regarding the

fleet applycommand, see fleet apply.For more information regarding converting Fleets to Bundles, see Convert a Helm Chart into a Bundle.

Deploy the

Bundle. This can be done in one of two ways:Through Rancher’s UI - Navigate to Continuous Delivery → Advanced → Bundles → Create from YAML and either paste the

bundle.yamlcontents, or click theRead from Fileoption and pass the file itself.Manually - Deploy the

bundle.yamlfile manually inside of yourmanagement cluster.

Executing these steps will result in a successfully deployed GitRepo/Bundle resource. The resource will be picked up by Fleet and its contents will be deployed onto the target clusters that the user has specified in the previous steps. For an overview of the process, refer to Section 36.2.6.2.3.1, “Overview”.

For information on how to track the upgrade process, you can refer to Section 36.2.6.2.3.3, “Example”.

Once the chart upgrade has been successfully verified, remove the Bundle/GitRepo resource.

This will remove the no longer necessary upgrade resources from your management cluster, ensuring that no future version clashes might occur.

36.2.6.2.3.3 Example #

The example below demonstrates how to upgrade a Helm chart deployed via EIB from one version to another on a management cluster. Note that the versions used in this example are not recommendations. For version recommendations specific to an Edge release, refer to the release notes (Section 53.1, “Abstract”).

Use-case:

A

managementcluster is running an older version of Longhorn.The cluster has been deployed through EIB, using the following image definition snippet:

kubernetes: helm: charts: - name: longhorn-crd repositoryName: rancher-charts targetNamespace: longhorn-system createNamespace: true version: 104.2.0+up1.7.1 installationNamespace: kube-system - name: longhorn repositoryName: rancher-charts targetNamespace: longhorn-system createNamespace: true version: 104.2.0+up1.7.1 installationNamespace: kube-system repositories: - name: rancher-charts url: https://charts.rancher.io/ ...SUSE Storageneeds to be upgraded to a version that is compatible with the Edge 3.5 release. Meaning it needs to be upgraded to1.10.1.It is assumed that the

management clusteris air-gapped, without support for a local Git server and has a working Rancher setup.

Follow the Upgrade Steps (Section 36.2.6.2.3.2, “Upgrade Steps”):

Clone the

suse-edge/fleet-examplerepository from therelease-3.5.0tag.git clone -b release-3.5.0 https://github.com/suse-edge/fleet-examples.git

Create a directory where the

Longhornupgrade archive will be stored.mkdir archives

Pull the desired

Longhornchart archive version:# First add the Rancher Helm chart repository helm repo add rancher-charts https://charts.rancher.io/ # Pull the Longhorn 1.10.1 chart archive helm pull oci://dp.apps.rancher.io/charts/suse-storage --version 1.10.1

Outside of the

archivesdirectory, download thegenerate-chart-upgrade-data.shscript from thesuse-edge/fleet-examplesrelease tag.Directory setup should look similar to:

. ├── archives │ └── longhorn-1.10.1.tgz ├── fleet-examples ... │ ├── fleets │ │ ├── day2 | | | ├── ... │ │ │ ├── eib-charts-upgrader │ │ │ │ ├── base │ │ │ │ │ ├── job.yaml │ │ │ │ │ ├── kustomization.yaml │ │ │ │ │ ├── patches │ │ │ │ │ │ └── job-patch.yaml │ │ │ │ │ ├── rbac │ │ │ │ │ │ ├── cluster-role-binding.yaml │ │ │ │ │ │ ├── cluster-role.yaml │ │ │ │ │ │ ├── kustomization.yaml │ │ │ │ │ │ └── sa.yaml │ │ │ │ │ └── secrets │ │ │ │ │ ├── eib-charts-upgrader-script.yaml │ │ │ │ │ └── kustomization.yaml │ │ │ │ ├── fleet.yaml │ │ │ │ └── kustomization.yaml │ │ │ └── ... │ └── ... └── generate-chart-upgrade-data.sh

Execute the

generate-chart-upgrade-data.shscript:# First make the script executable chmod +x ./generate-chart-upgrade-data.sh # Then execute the script ./generate-chart-upgrade-data.sh --archive-dir ./archives --fleet-path ./fleet-examples/fleets/day2/eib-charts-upgrader

The directory structure after the script execution should look similar to:

. ├── archives │ └── longhorn-1.10.1.tgz ├── fleet-examples ... │ ├── fleets │ │ ├── day2 │ │ │ ├── ... │ │ │ ├── eib-charts-upgrader │ │ │ │ ├── base │ │ │ │ │ ├── job.yaml │ │ │ │ │ ├── kustomization.yaml │ │ │ │ │ ├── patches │ │ │ │ │ │ └── job-patch.yaml │ │ │ │ │ ├── rbac │ │ │ │ │ │ ├── cluster-role-binding.yaml │ │ │ │ │ │ ├── cluster-role.yaml │ │ │ │ │ │ ├── kustomization.yaml │ │ │ │ │ │ └── sa.yaml │ │ │ │ │ └── secrets │ │ │ │ │ ├── eib-charts-upgrader-script.yaml │ │ │ │ │ ├── kustomization.yaml │ │ │ │ │ ├── longhorn-VERSION.yaml - secret created by the generate-chart-upgrade-data.sh script │ │ │ │ │ └── longhorn-crd-VERSION.yaml - secret created by the generate-chart-upgrade-data.sh script │ │ │ │ ├── fleet.yaml │ │ │ │ └── kustomization.yaml │ │ │ └── ... │ └── ... └── generate-chart-upgrade-data.sh

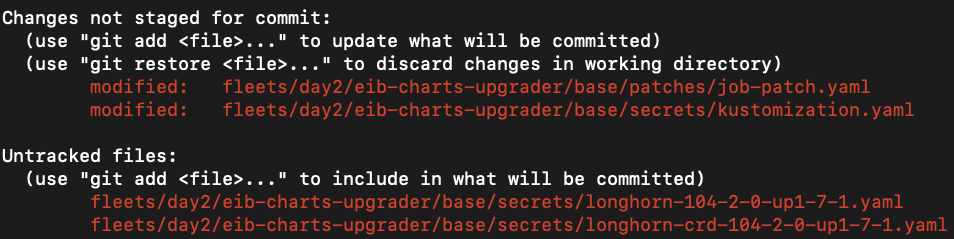

The files changed in git should look like this:

Changes not staged for commit: (use "git add <file>..." to update what will be committed) (use "git restore <file>..." to discard changes in working directory) modified: fleets/day2/eib-charts-upgrader/base/patches/job-patch.yaml modified: fleets/day2/eib-charts-upgrader/base/secrets/kustomization.yaml Untracked files: (use "git add <file>..." to include in what will be committed) fleets/day2/eib-charts-upgrader/base/secrets/longhorn-VERSION.yaml fleets/day2/eib-charts-upgrader/base/secrets/longhorn-crd-VERSION.yaml

Create a

Bundlefor theeib-charts-upgraderFleet:First, navigate to the Fleet itself:

cd ./fleet-examples/fleets/day2/eib-charts-upgrader

Then create a

targets.yamlfile:cat > targets.yaml <<EOF targets: - clusterName: local EOF

Then use the

fleet-clibinary to convert the Fleet to a Bundle:fleet apply --compress --targets-file=targets.yaml -n fleet-local -o - eib-charts-upgrade > bundle.yaml

Deploy the Bundle through the Rancher UI:

Figure 36.1: Deploy Bundle through Rancher UI #From here, select Read from File and find the

bundle.yamlfile on your system.This will auto-populate the

Bundleinside of Rancher’s UI.Select Create.

After a successful deployment, your Bundle would look similar to:

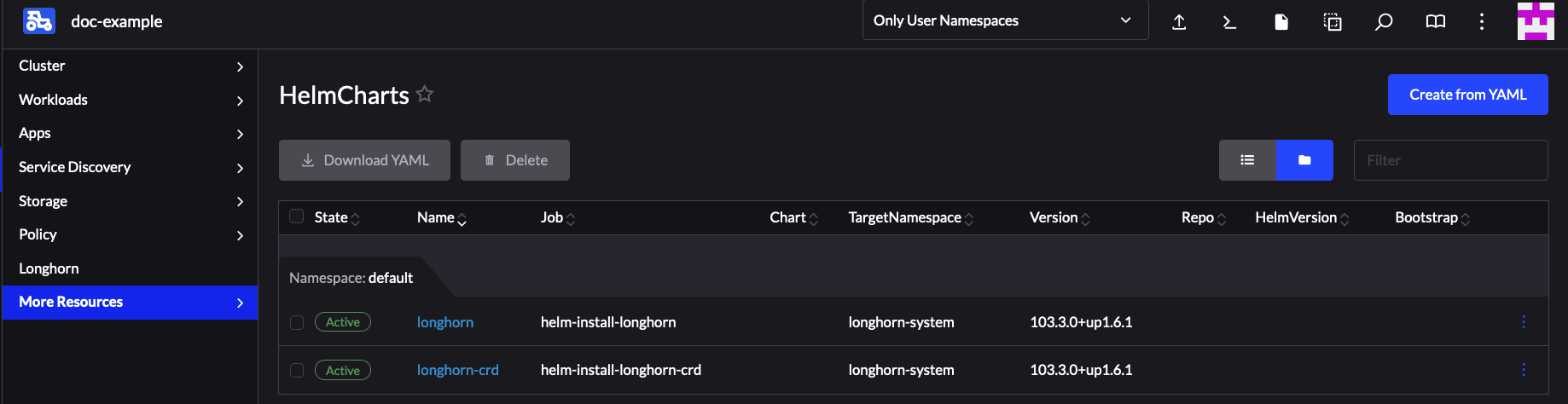

Figure 36.2: Successfully deployed Bundle #

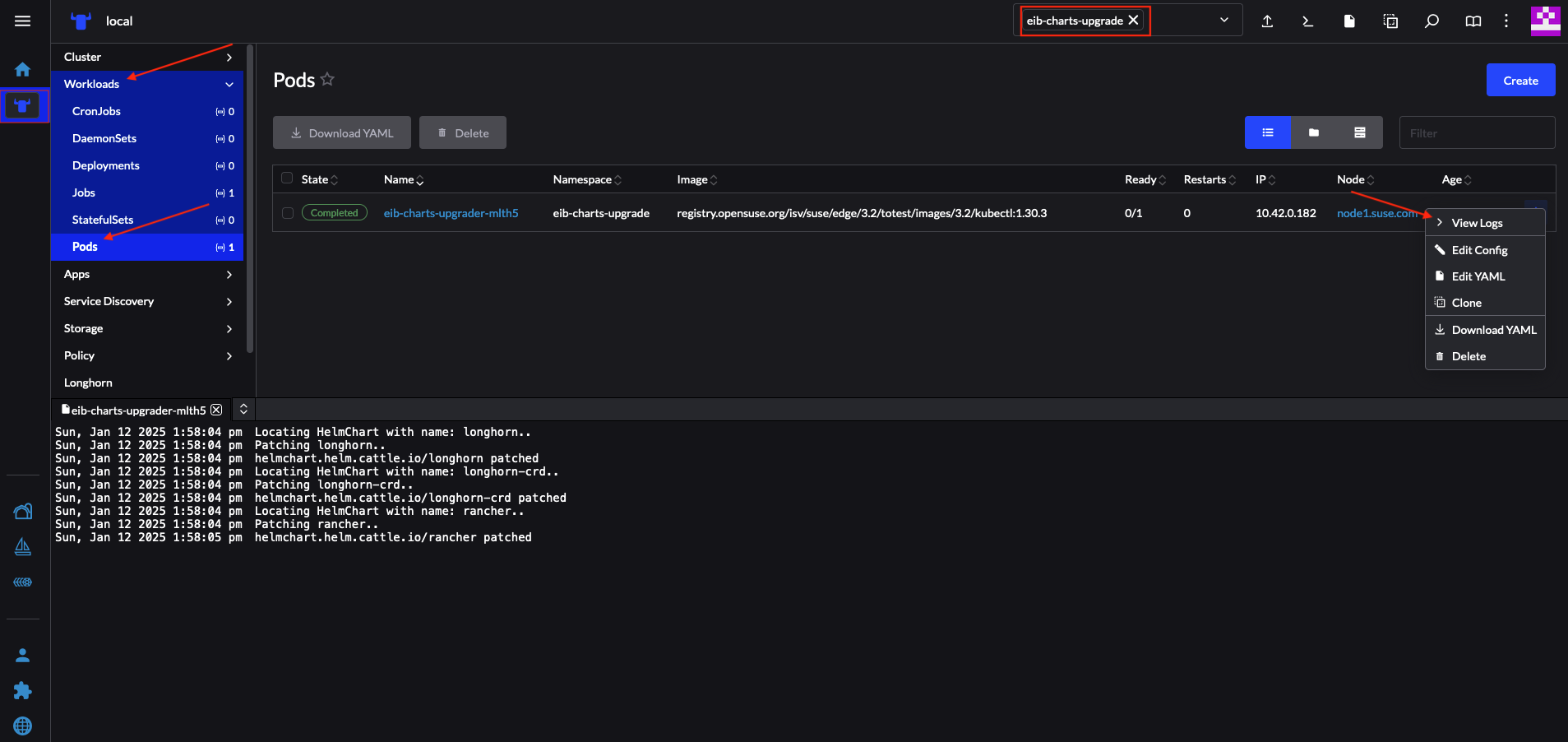

After the successful deployment of the Bundle, to monitor the upgrade process:

Verify the logs of the

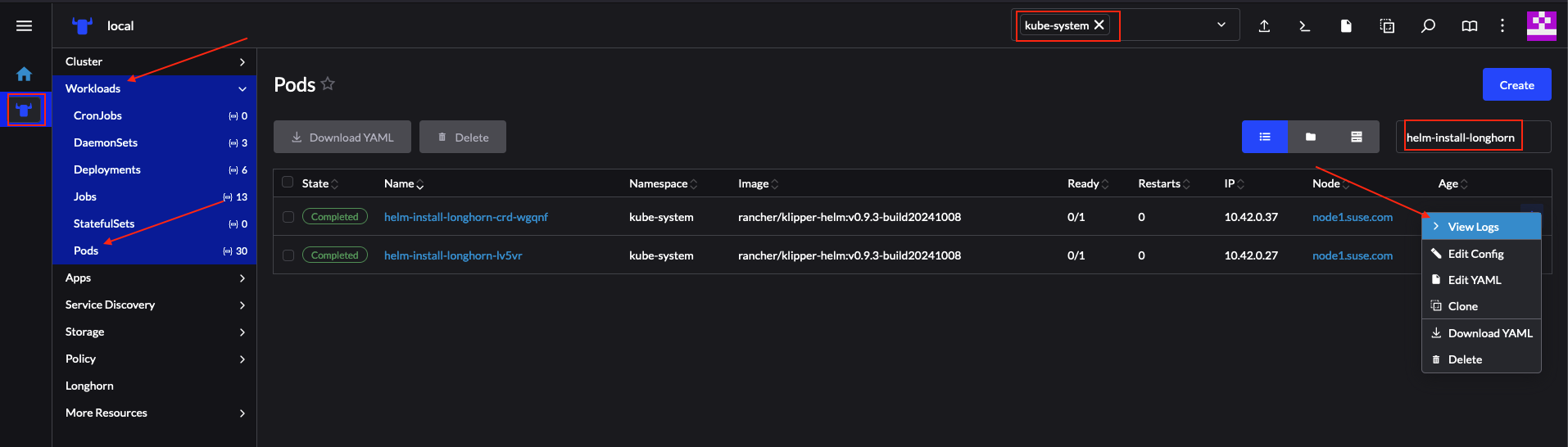

Upgrade Pod:Now verify the logs of the Pod created for the upgrade by the helm-controller:

The Pod name will be with the following template -

helm-install-longhorn-<random-suffix>The Pod will be in the namespace where the

HelmChartresource was deployed. In our case this iskube-system.Figure 36.3: Logs for successfully upgraded Longhorn chart #

Verify that the

HelmChartversion has been updated by navigating to Rancher’sHelmChartssection (More Resources → HelmCharts). Select the namespace where the chart was deployed, for this example it would bekube-system.Finally check that the Longhorn Pods are running.

After making the above validations, it is safe to assume that the Longhorn Helm chart has been upgraded to the 1.10.1 version.

36.2.6.2.3.4 Helm chart upgrade using a third-party GitOps tool #

There might be use-cases where users would like to use this upgrade procedure with a GitOps workflow other than Fleet (e.g. Flux).

To produce the resources needed for the upgrade procedure, you can use the generate-chart-upgrade-data.sh script to populate the eib-charts-upgrader Fleet with the user provided data. For more information on how to do this, see Section 36.2.6.2.3.2, “Upgrade Steps”.

After you have the full setup, you can use kustomize to generate a full working solution that you can deploy in your cluster:

cd /foo/bar/fleets/day2/eib-charts-upgrader

kustomize build .If you want to include the solution to your GitOps workflow, you can remove the fleet.yaml file and use what is left as a valid Kustomize setup. Just do not forget to first run the generate-chart-upgrade-data.sh script, so that it can populate the Kustomize setup with the data for the Helm charts that you wish to upgrade to.

To understand how this workflow is intended to be used, it can be beneficial to look at Section 36.2.6.2.3.1, “Overview” and Section 36.2.6.2.3.2, “Upgrade Steps”.