25 Downstream clusters #

This section covers how to do various Day 2 operations for different parts of your downstream cluster using your management cluster.

25.1 Introduction #

This section is meant to be a starting point for the Day 2 operations documentation. You can find the following information.

The default components (Section 25.1.1, “Components”) used to achieve

Day 2operations over multiple downstream clusters.Determining which

Day 2resources should you use for your specific use-case (Section 25.1.2, “Determine your use-case”).The suggested workflow sequence (Section 25.1.3, “Day 2 workflow”) for

Day 2operations.

25.1.1 Components #

Below you can find a description of the default components that should be setup on either your management cluster or your downstream clusters so that you can successfully perform Day 2 operations.

25.1.1.1 Rancher #

For use-cases where you want to utilise Fleet (Chapter 6, Fleet) without Rancher, you can skip the Rancher component all together.

Responsible for the management of your downstream clusters. Should be deployed on your management cluster.

For more information, see Chapter 4, Rancher.

25.1.1.2 Fleet #

Responsible for multi-cluster resource deployment.

Typically offered by the Rancher component. For use-cases where Rancher is not used, can be deployed as a standalone component.

For more information on installing Fleet as a standalone component, see Fleet’s Installation Details.

For more information regarding the Fleet component, see Chapter 6, Fleet.

This documentation heavily relies on Fleet and more specifically on the GitRepo and Bundle resources (more on this in Section 25.1.2, “Determine your use-case”) for establishing a GitOps way of automating the deployment of resources related to Day 2 operations.

For use-cases, where a third party GitOps tool usage is desired, see:

For

OS package updates- Section 25.3.4.3, “SUC Plan deployment - third-party GitOps workflow”For

Kubernetes distribution upgrades- Section 25.4.4.3, “SUC Plan deployment - third-party GitOps workflow”For

Helm chart upgrades- retrieve the chart version supported by the desired Edge release from the Section A.1, “Abstract” page and populate the chart version and URL in your third party GitOps tool

25.1.1.3 System-upgrade-controller (SUC) #

The system-upgrade-controller (SUC) is responsible for executing tasks on specified nodes based on configuration data provided through a custom resource, called a Plan. Should be located on each downstream cluster that requires some sort of a Day 2 operation.

For more information regarding SUC, see the upstream repository.

For information on how to deploy SUC on your downstream clusters, first determine your use-case (Section 25.1.2, “Determine your use-case”) and then refer to either Section 25.2.1.1, “SUC deployment using a GitRepo resource”, or Section 25.2.1.2, “SUC deployment using a Bundle resource” for SUC deployment information.

25.1.2 Determine your use-case #

As mentioned previously, resources related to Day 2 operations are propagated to downstream clusters using Fleet’s GitRepo and Bundle resources.

Below you can find more information regarding what these resources do and for which use-cases should they be used for Day 2 operations.

25.1.2.1 GitRepo #

A GitRepo is a Fleet (Chapter 6, Fleet) resource that represents a Git repository from which Fleet can create Bundles. Each Bundle is created based on configuration paths defined inside of the GitRepo resource. For more information, see the GitRepo documentation.

In terms of Day 2 operations GitRepo resources are normally used to deploy SUC or SUC Plans on non air-gapped environments that utilise a Fleet GitOps approach.

Alternatively, GitRepo resources can also be used to deploy SUC or SUC Plans on air-gapped environments, if you mirror your repository setup through a local git server.

25.1.2.2 Bundle #

Bundles hold raw Kubernetes resources that will be deployed on the targeted cluster. Usually they are created from a GitRepo resource, but there are use-cases where they can be deployed manually. For more information refer to the Bundle documentation.

In terms of Day 2 operations Bundle resources are normally used to deploy SUC or SUC Plans on air-gapped environments that do not use some form of local GitOps procedure (e.g. a local git server).

Alternatively, if your use-case does not allow for a GitOps workflow (e.g. using a Git repository), Bundle resources could also be used to deploy SUC or SUC Plans on non air-gapped environments.

25.1.3 Day 2 workflow #

The following is a Day 2 workflow that should be followed when upgrading a downstream cluster to a specific Edge release.

OS package update (Section 25.3, “OS package update”)

Kubernetes version upgrade (Section 25.4, “Kubernetes version upgrade”)

Helm chart upgrade (Section 25.5, “Helm chart upgrade”)

25.2 System upgrade controller deployment guide #

The system-upgrade-controller (SUC) is responsible for deploying resources on specific nodes of a cluster based on configurations defined in a custom resource called a Plan. For more information, see the upstream documentation.

This section focuses solely on deploying the system-upgrade-controller. Plan resources should be deployed from the following documentations:

OS package update (Section 25.3, “OS package update”)

Kubernetes version upgrade (Section 25.4, “Kubernetes version upgrade”)

Helm chart upgrade (Section 25.5, “Helm chart upgrade”)

25.2.1 Deployment #

This section assumes that you are going to use Fleet (Chapter 6, Fleet) to orchestrate the SUC deployment. Users using a third-party GitOps workflow should see Section 25.2.1.3, “Deploying system-upgrade-controller when using a third-party GitOps workflow” for information on what resources they need to setup in their workflow.

To determine the resource to use, refer to Section 25.1.2, “Determine your use-case”.

25.2.1.1 SUC deployment using a GitRepo resource #

This section covers how to create a GitRepo resource that will ship the needed SUC Plans for a successful SUC deployment to your target downstream clusters.

The Edge team maintains a ready to use GitRepo resource for SUC in each of our suse-edge/fleet-examples releases under gitrepos/day2/system-upgrade-controller-gitrepo.yaml.

If using the suse-edge/fleet-examples repository, make sure you are using the resources from a dedicated release tag.

GitRepo creation can be done in one of of the following ways:

Through the Rancher UI (Section 25.2.1.1.1, “GitRepo deployment - Rancher UI”) (when

Rancheris available)By manually deploying (Section 25.2.1.1.2, “GitRepo creation - manual”) the resources to your

management cluster

Once created, Fleet will be responsible for picking up the resource and deploying the SUC resources to all your target clusters. For information on how to track the deployment process, see Section 25.2.2.1, “Monitor SUC deployment”.

25.2.1.1.1 GitRepo deployment - Rancher UI #

In the upper left corner, ☰ → Continuous Delivery

Go to Git Repos → Add Repository

If you use the suse-edge/fleet-examples repository:

Repository URL -

https://github.com/suse-edge/fleet-examples.gitWatch → Revision - choose a release tag for the

suse-edge/fleet-examplesrepository that you wish to use, e.g.release-3.0.1.Under Paths add the path to the system-upgrade-controller as seen in the release tag -

fleets/day2/system-upgrade-controllerSelect Next to move to the target configuration section

Only select clusters for which you wish to deploy the

system-upgrade-controller. When you are satisfied with your configurations, click Create

Alternatively, if you decide to use your own repository to host these files, you would need to provide your repo data above.

25.2.1.1.2 GitRepo creation - manual #

Choose the desired Edge release tag that you wish to deploy the SUC

GitRepofrom (referenced below as${REVISION}).Pull the GitRepo resource:

curl -o system-upgrade-controller-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/{REVISION}/gitrepos/day2/system-upgrade-controller-gitrepo.yamlEdit the GitRepo configurations, under

spec.targetsspecify your desired target list. By default, theGitReporesources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters, change the default

GitRepotarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection, see Mapping to Downstream Clusters

Apply the GitRepo resource to your

management cluster:kubectl apply -f system-upgrade-controller-gitrepo.yaml

View the created GitRepo resource under the

fleet-defaultnamespace:kubectl get gitrepo system-upgrade-controller -n fleet-default # Example output NAME REPO COMMIT BUNDLEDEPLOYMENTS-READY STATUS system-upgrade-controller https://github.com/suse-edge/fleet-examples.git release-3.0.1 0/0

25.2.1.2 SUC deployment using a Bundle resource #

This section covers how to create a Bundle resource that will ship the needed SUC Plans for a successful SUC deployment to your target downstream clusters.

The Edge team maintains a ready to use Bundle resources for SUC in each of our suse-edge/fleet-examples releases under bundles/day2/system-upgrade-controller/controller-bundle.yaml.

If using the suse-edge/fleet-examples repository, make sure you are using the resources from a dedicated release tag.

Bundle creation can be done in one of of the following ways:

Through the Rancher UI (Section 25.2.1.2.1, “Bundle creation - Rancher UI”) (when

Rancheris available)By manually deploying (Section 25.2.1.2.2, “Bundle creation - manual”) the resources to your

management cluster

Once created, Fleet will be responsible for pickuping the resource and deploying the SUC resources to all your target clusters. For information on how to track the deployment process, see Section 25.2.2.1, “Monitor SUC deployment”.

25.2.1.2.1 Bundle creation - Rancher UI #

In the upper left corner, ☰ → Continuous Delivery

Go to Advanced > Bundles

Select Create from YAML

From here you can create the Bundle in one of the following ways:

By manually copying the file content to the Create from YAML page. File content can be retrieved from this url - https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller/controller-bundle.yaml. Where

${REVISION}is the Edge release tag that you desire (e.g.release-3.0.1).By cloning the

suse-edge/fleet-examplesrepository to the desired release tag and selecting the Read from File option in the Create from YAML page. From there, navigate tobundles/day2/system-upgrade-controllerdirectory and selectcontroller-bundle.yaml. This will auto-populate the Create from YAML page with the Bundle content.

Change the target clusters for the

Bundle:To match all downstream clusters change the default Bundle

.spec.targetsto:spec: targets: - clusterSelector: {}For a more granular downstream cluster mappings, see Mapping to Downstream Clusters.

Create

25.2.1.2.2 Bundle creation - manual #

Choose the desired Edge release tag that you wish to deploy the SUC

Bundlefrom (referenced below as${REVISION}).Pull the Bundle resource:

curl -o controller-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller/controller-bundle.yamlEdit the

Bundletarget configurations, underspec.targetsprovide your desired target list. By default theBundleresources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters change the default

Bundletarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection, see Mapping to Downstream Clusters

Apply the Bundle resource to your

management cluster:kubectl apply -f controller-bundle.yaml

View the created Bundle resource under the

fleet-defaultnamespace:kubectl get bundles system-upgrade-controller -n fleet-default # Example output NAME BUNDLEDEPLOYMENTS-READY STATUS system-upgrade-controller 0/0

25.2.1.3 Deploying system-upgrade-controller when using a third-party GitOps workflow #

To deploy the system-upgrade-controller using a third-party GitOps tool, depending on the tool, you might need information for the system-upgrade-controller Helm chart or Kubernetes resoruces, or both.

Choose a specific Edge release from which you wish to use the SUC from.

From there, the SUC Helm chart data can be found under the helm configuration section of the fleets/day2/system-upgrade-controller/fleet.ymal file.

The SUC Kubernetes resources can be found under the SUC Bundle configuration under .spec.resources.content. The location for the bundle is bundles/day2/system-upgrade-controller/controller-bundle.yaml.

Use the above mentioned resoruces to populate the data that your third-party GitOps workflow needs in order to deploy SUC.

25.2.2 Monitor SUC resources using Rancher #

This section covers how to monitor the lifecycle of the SUC deployment and any deployed SUC Plans using the Rancher UI.

25.2.2.1 Monitor SUC deployment #

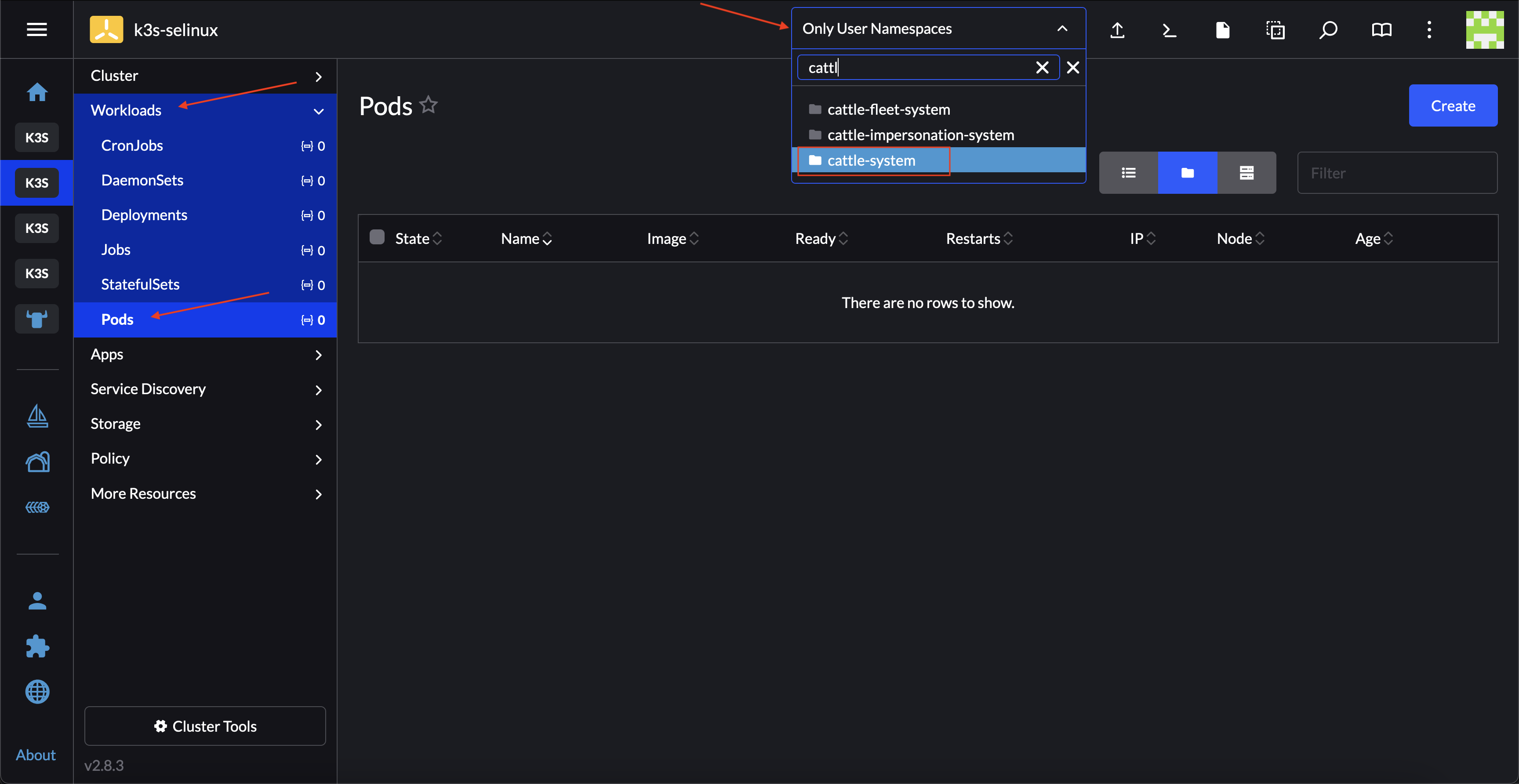

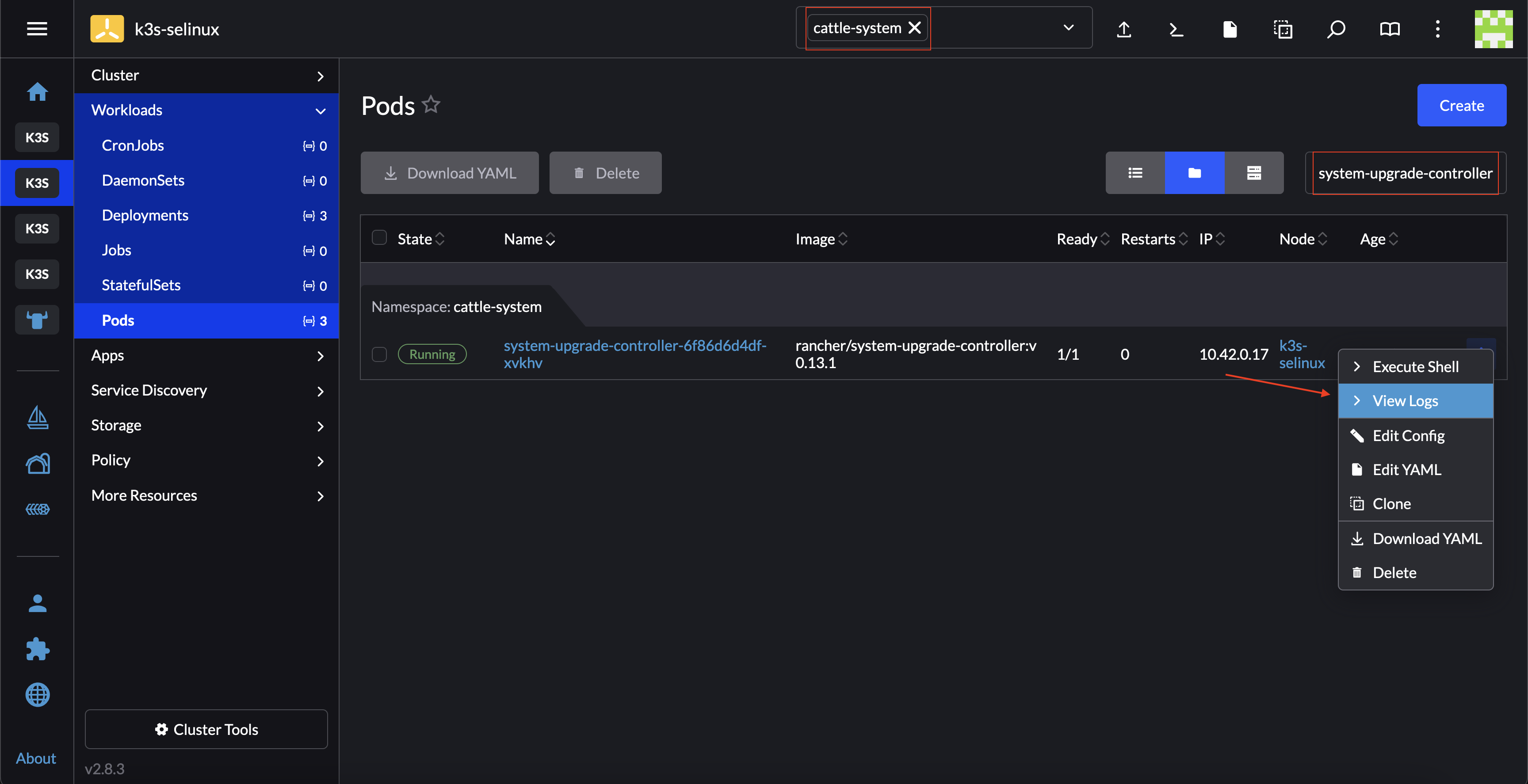

To check the SUC pod logs for a specific cluster:

In the upper left corner, ☰ → <your-cluster-name>

Select Workloads → Pods

Under the namespace drop down menu select the

cattle-systemnamespaceIn the Pod filter bar, write the SUC name -

system-upgrade-controllerOn the right of the pod select ⋮ → View Logs

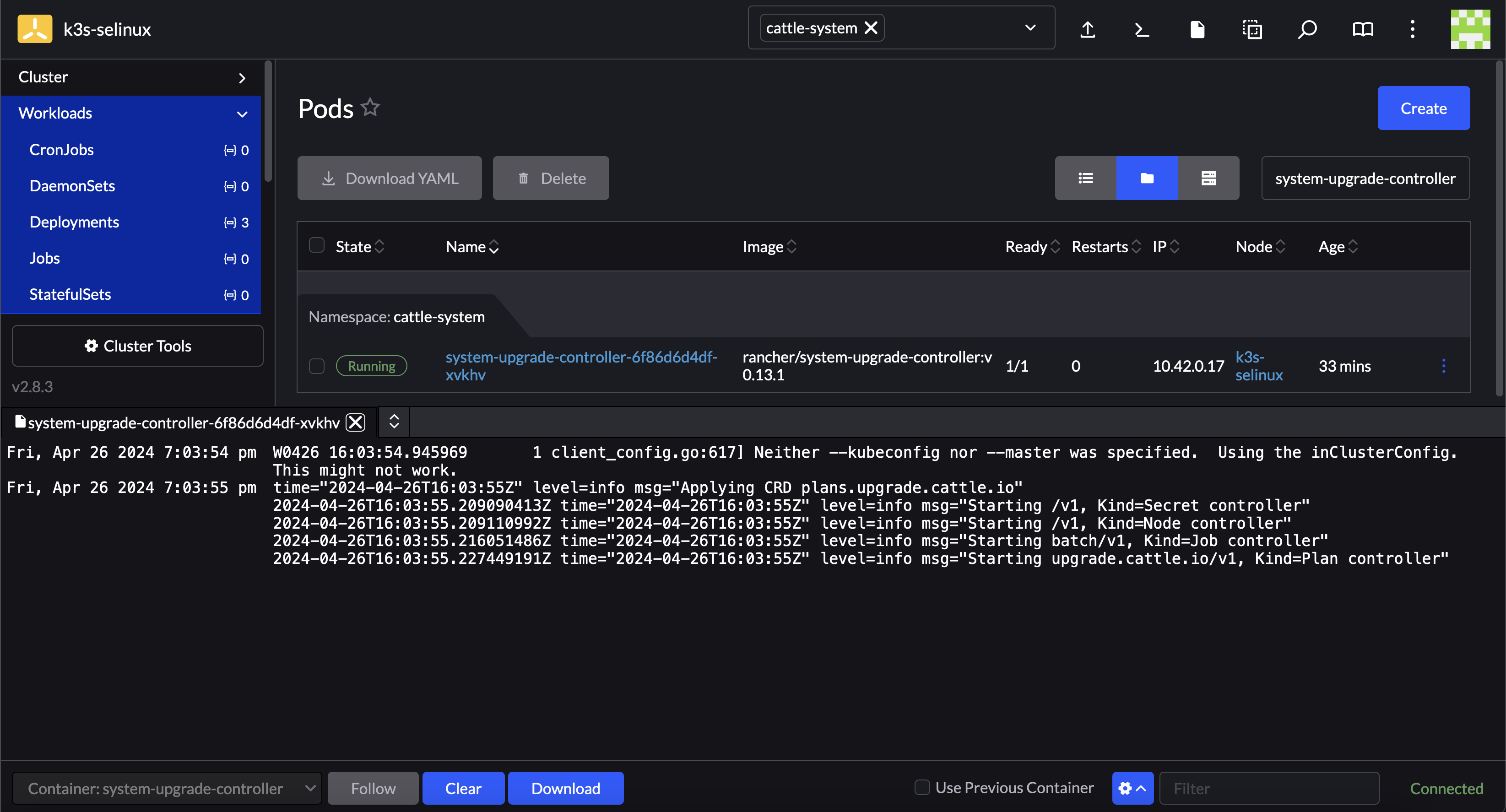

The SUC logs should looks something similar to:

25.2.2.2 Monitor SUC Plans #

The SUC Plan Pods are kept alive for 15 minutes. After that they are removed by the corresponding Job that created them. To have access to the SUC Plan Pod logs, you should enable logging for your cluster. For information on how to do this in Rancher, see Rancher Integration with Logging Services.

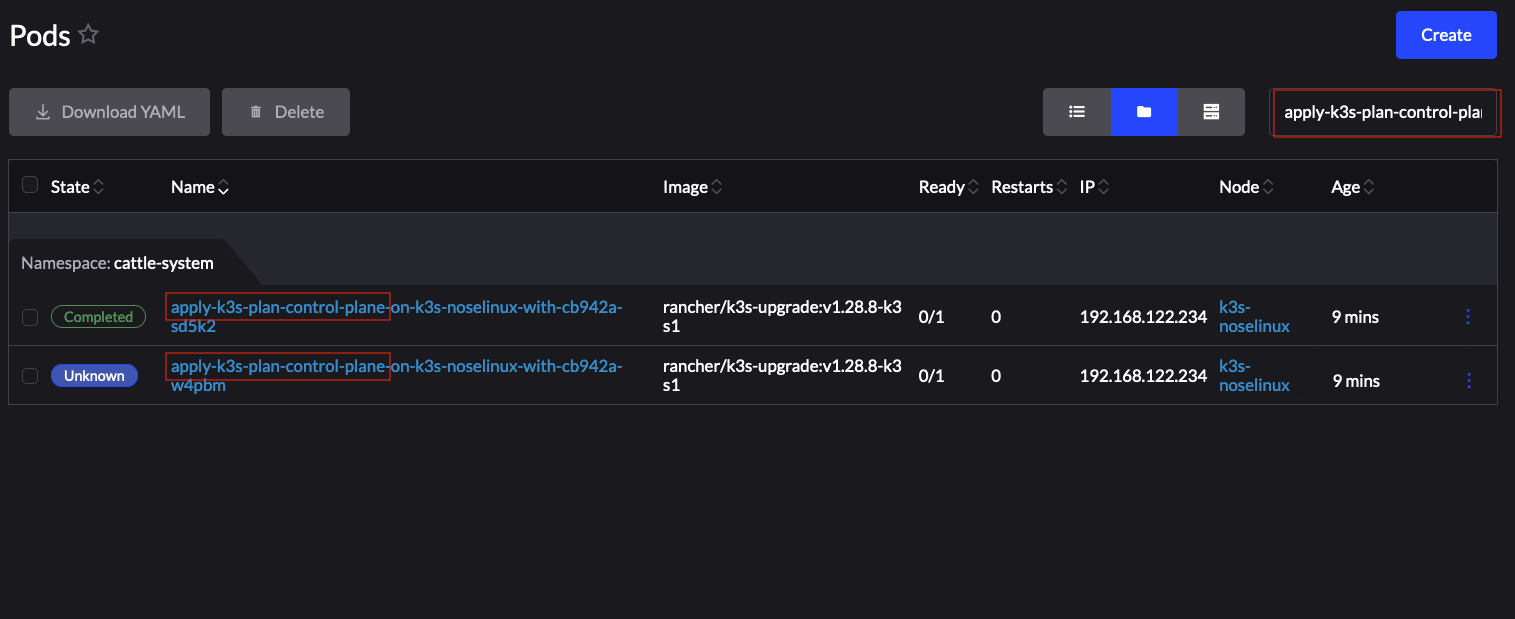

To check Pod logs for the specific SUC plan:

In the upper left corner, ☰ → <your-cluster-name>

Select Workloads → Pods

Under the namespace drop down menu select the

cattle-systemnamespaceIn the Pod filter bar, write the name for your SUC Plan Pod. The name will be in the following template format:

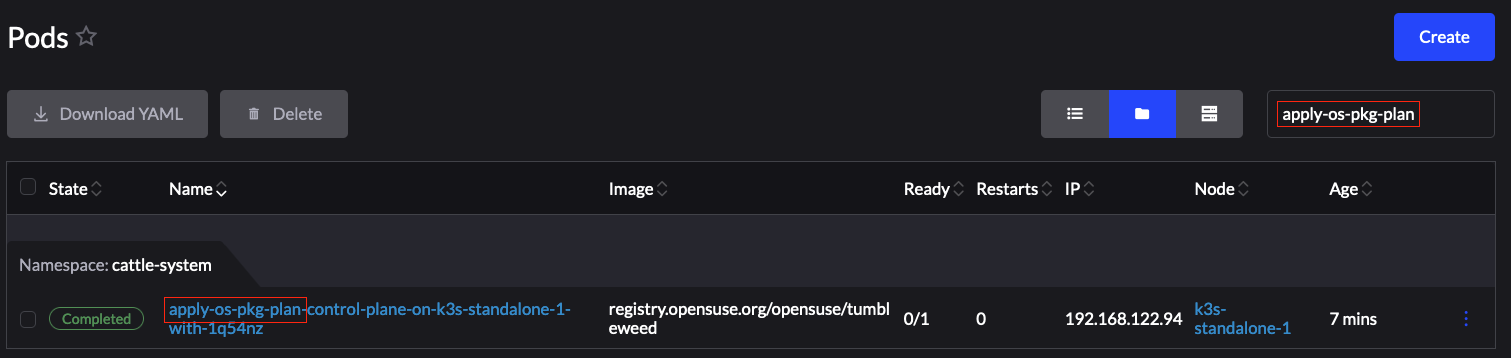

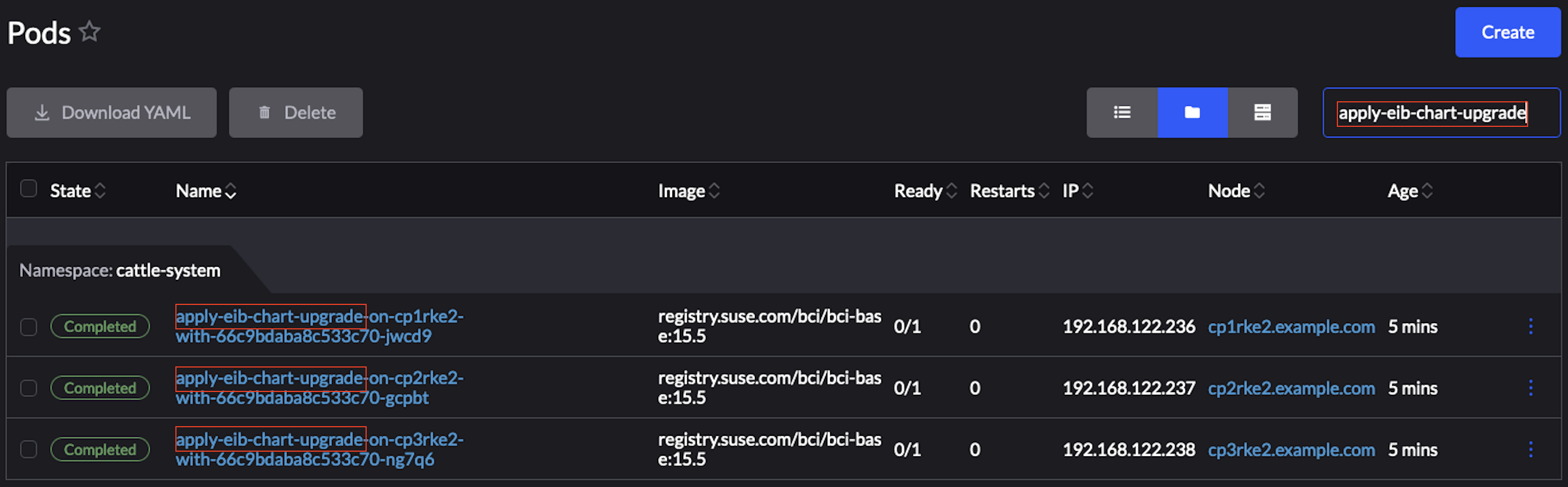

apply-<plan_name>-on-<node_name>Figure 25.1: Example Kubernetes upgrade plan pods #Note how in Figure 1, we have one Pod in Completed and one in Unknown state. This is expected and has happened due to the Kubernetes version upgrade on the node.

Figure 25.2: Example OS pacakge update plan pods #Figure 25.3: Example of upgrade plan pods for EIB deployed Helm charts on an HA cluster #Select the pod that you want to review the logs of and navigate to ⋮ → View Logs

25.3 OS package update #

25.3.1 Components #

This section covers the custom components that the OS package update process uses over the default Day 2 components (Section 25.1.1, “Components”).

25.3.1.1 edge-update.service #

Systemd service responsible for performing the OS package update. Uses the transactional-update command to perform a distribution upgrade (dup).

If you wish to use a normal upgrade method, create a edge-update.conf file under /etc/edge/ on each node. Inside this file, add the UPDATE_METHOD=up variable.

Shipped through a SUC plan, which should be located on each downstream cluster that is in need of a OS package update.

25.3.2 Requirements #

General:

SCC registered machine - All downstream cluster nodes should be registered to

https://scc.suse.com/. This is needed so that theedge-update.servicecan successfully connect to the needed OS RPM repositories.Make sure that SUC Plan tolerations match node tolerations - If your Kubernetes cluster nodes have custom taints, make sure to add tolerations for those taints in the SUC Plans. By default SUC Plans have tolerations only for control-plane nodes. Default tolerations include:

CriticalAddonsOnly=true:NoExecute

node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/etcd:NoExecute

NoteAny additional tolerations must be added under the

.spec.tolerationssection of each Plan. SUC Plans related to the OS package update can be found in the suse-edge/fleet-examples repository underfleets/day2/system-upgrade-controller-plans/os-pkg-update. Make sure you use the Plans from a valid repository release tag.An example of defining custom tolerations for the control-plane SUC Plan, would look like this:

apiVersion: upgrade.cattle.io/v1 kind: Plan metadata: name: os-pkg-plan-control-plane spec: ... tolerations: # default tolerations - key: "CriticalAddonsOnly" operator: "Equal" value: "true" effect: "NoExecute" - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" - key: "node-role.kubernetes.io/etcd" operator: "Equal" effect: "NoExecute" # custom toleration - key: "foo" operator: "Equal" value: "bar" effect: "NoSchedule" ...

Air-gapped:

Mirror SUSE RPM repositories - OS RPM repositories should be locally mirrored so that

edge-update.servicecan have access to them. This can be achieved using RMT.

25.3.3 Update procedure #

This section assumes you will be deploying the OS package update SUC Plan using Fleet (Chapter 6, Fleet). If you intend to deploy the SUC Plan using a different approach, refer to Section 25.3.4.3, “SUC Plan deployment - third-party GitOps workflow”.

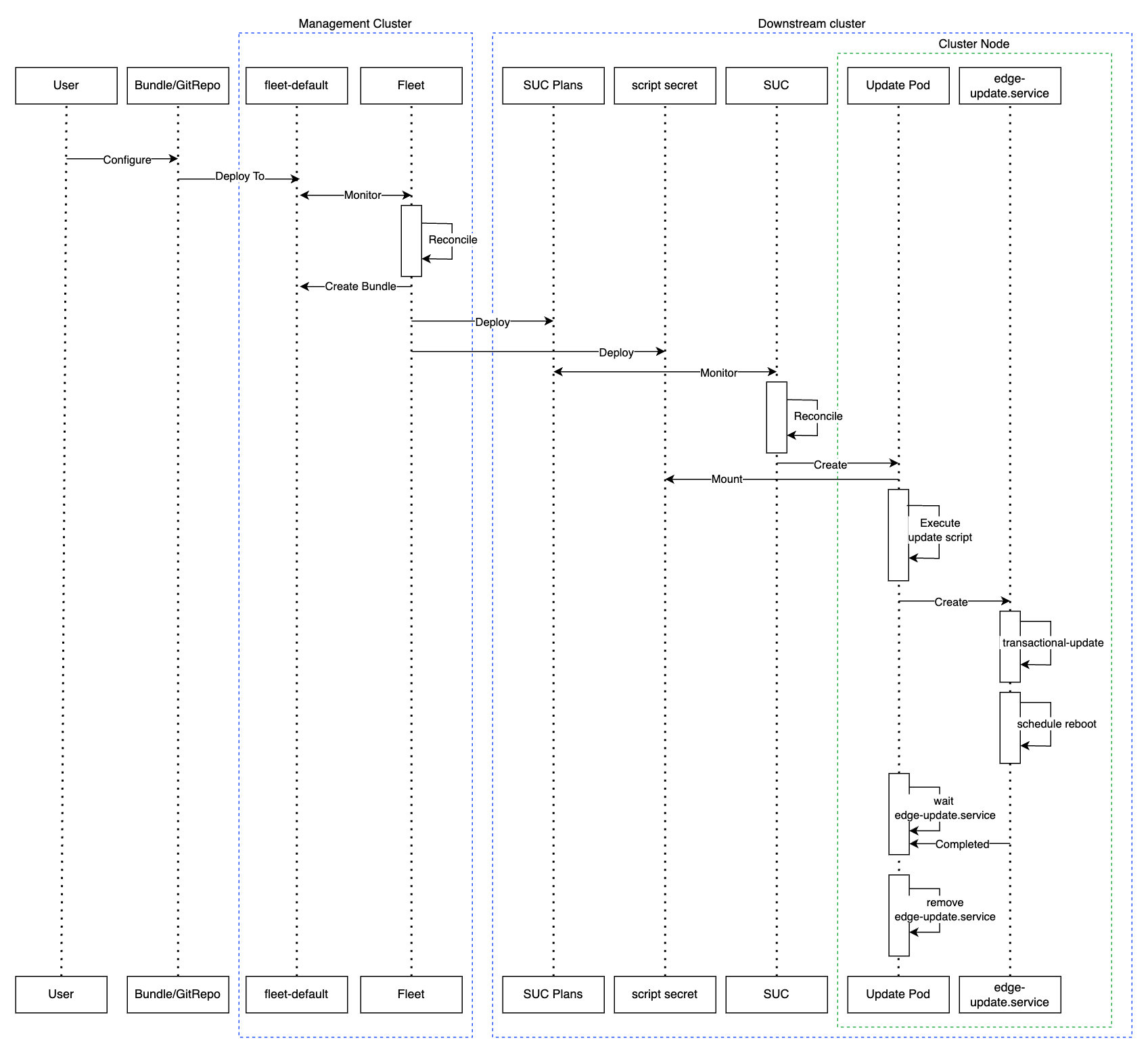

The OS package update procedure revolves around deploying SUC Plans to downstream clusters. These plans then hold information about how and on which nodes to deploy the edge-update.service systemd.service. For information regarding the structure of a SUC Plan, refer to the upstream documentation.

OS package update SUC Plans are shipped in the following ways:

Through a

GitReporesources - Section 25.3.4.1, “SUC Plan deployment - GitRepo resource”Through a

Bundleresource - Section 25.3.4.2, “SUC Plan deployment - Bundle resource”

To determine which resource you should use, refer to Section 25.1.2, “Determine your use-case”.

For a full overview of what happens during the update procedure, refer to the Section 25.3.3.1, “Overview” section.

25.3.3.1 Overview #

This section aims to describe the full workflow that the OS package update process goes throught from start to finish.

OS pacakge update steps:

Based on his use-case, the user determines whether to use a GitRepo or a Bundle resource for the deployment of the

OS package update SUC Plansto the desired downstream clusters. For information on how to map a GitRepo/Bundle to a specific set of downstream clusters, see Mapping to Downstream Clusters.If you are unsure whether you should use a GitRepo or a Bundle resource for the SUC Plan deployment, refer to Section 25.1.2, “Determine your use-case”.

For GitRepo/Bundle configuration options, refer to Section 25.3.4.1, “SUC Plan deployment - GitRepo resource” or Section 25.3.4.2, “SUC Plan deployment - Bundle resource”.

The user deploys the configured GitRepo/Bundle resource to the

fleet-defaultnamespace in hismanagement cluster. This is done either manually or thorugh the Rancher UI if such is available.Fleet (Chapter 6, Fleet) constantly monitors the

fleet-defaultnamespace and immediately detects the newly deployed GitRepo/Bundle resource. For more information regarding what namespaces does Fleet monitor, refer to Fleet’s Namespaces documentation.If the user has deployed a GitRepo resource,

Fleetwill reconcile the GitRepo and based on its paths and fleet.yaml configurations it will deploy a Bundle resource in thefleet-defaultnamespace. For more information, refer to Fleet’s GitRepo Contents documentation.Fleetthen proceeds to deploy theKubernetes resourcesfrom this Bundle to all the targeteddownstream clusters. In the context ofOS package updates, Fleet deploys the following resources from the Bundle:os-pkg-plan-agentSUC Plan - instructs SUC on how to do a package update on cluster agent nodes. Will not be interpreted if the cluster consists only from control-plane nodes.os-pkg-plan-control-planeSUC Plan - instructs SUC on how to do a package update on cluster control-plane nodes.os-pkg-updateSecret - referenced in each SUC Plan; ships anupdate.shscript responsible for creating theedge-update.servicesustemd.service which will do the actual package update.NoteThe above resources will be deployed in the

cattle-systemnamespace of each downstream cluster.

On the downstream cluster, SUC picks up the newly deployed SUC Plans and deploys an Update Pod on each node that matches the node selector defined in the SUC Plan. For information how to monitor the SUC Plan Pod, refer to Section 25.2.2.2, “Monitor SUC Plans”.

The Update Pod (deployed on each node) mounts the

os-pkg-updateSecret and executes theupdate.shscript that the Secret ships.The

update.shproceeds to do the following:Create the

edge-update.service- the service created will be of type oneshot and will adopt the following workflow:Update all package versions on the node OS, by executing:

transactional-update cleanup dup

After a successful

transactional-update, shedule a system reboot so that the package version updates can take effectNoteSystem reboot will be scheduled for 1 minute after a successful

transactional-updateexecution.

Start the

edge-update.serviceand wait for it to completeCleanup the

edge-update.service- after the systemd.service has done its job, it is removed from the system in order to ensure that no accidental executions/reboots happen in the future.

The OS package update procedure finishes with the system reboot. After the reboot all OS package versions should be updated to their respective latest version as seen in the available OS RPM repositories.

25.3.4 OS package update - SUC Plan deployment #

This section describes how to orchestrate the deployment of SUC Plans related OS package updates using Fleet’s GitRepo and Bundle resources.

25.3.4.1 SUC Plan deployment - GitRepo resource #

A GitRepo resource, that ships the needed OS package update SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 25.3.4.1.1, “GitRepo creation - Rancher UI” (whenRancheris available).By manually deploying (Section 25.3.4.1.2, “GitRepo creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the OS package update process of the nodes of your targeted cluster, refer to the Section 25.2.2.2, “Monitor SUC Plans” documentation.

25.3.4.1.1 GitRepo creation - Rancher UI #

In the upper left corner, ☰ → Continuous Delivery

Go to Git Repos → Add Repository

If you use the suse-edge/fleet-examples repository:

Repository URL -

https://github.com/suse-edge/fleet-examples.gitWatch → Revision - choose a release tag for the

suse-edge/fleet-examplesrepository that you wish to useUnder Paths add the path to the OS package update Fleets that you wish to use -

fleets/day2/system-upgrade-controller-plans/os-pkg-updateSelect Next to move to the target configuration section. Only select clusters whose node’s packages you wish to upgrade

Create

Alternatively, if you decide to use your own repository to host these files, you would need to provide your repo data above.

25.3.4.1.2 GitRepo creation - manual #

Choose the desired Edge release tag that you wish to apply the OS SUC update Plans from (referenced below as

${REVISION}).Pull the GitRepo resource:

curl -o os-pkg-update-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/gitrepos/day2/os-pkg-update-gitrepo.yamlEdit the GitRepo configuration, under

spec.targetsspecify your desired target list. By default theGitReporesources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters change the default

GitRepotarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection see Mapping to Downstream Clusters

Apply the GitRepo resources to your

management cluster:kubectl apply -f os-pkg-update-gitrepo.yaml

View the created GitRepo resource under the

fleet-defaultnamespace:kubectl get gitrepo os-pkg-update -n fleet-default # Example output NAME REPO COMMIT BUNDLEDEPLOYMENTS-READY STATUS os-pkg-update https://github.com/suse-edge/fleet-examples.git release-3.0.1 0/0

25.3.4.2 SUC Plan deployment - Bundle resource #

A Bundle resource, that ships the needed OS package update SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 25.3.4.2.1, “Bundle creation - Rancher UI” (whenRancheris available).By manually deploying (Section 25.3.4.2.2, “Bundle creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the OS package update process of the nodes of your targeted cluster, refer to the Section 25.2.2.2, “Monitor SUC Plans” documentation.

25.3.4.2.1 Bundle creation - Rancher UI #

In the upper left corner, click ☰ → Continuous Delivery

Go to Advanced > Bundles

Select Create from YAML

From here you can create the Bundle in one of the following ways:

By manually copying the Bundle content to the Create from YAML page. Content can be retrieved from https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller-plans/os-pkg-update/pkg-update-bundle.yaml, where

${REVISION}is the Edge release that you are usingBy cloning the suse-edge/fleet-examples repository to the desired release tag and selecting the Read from File option in the Create from YAML page. From there, navigate to

bundles/day2/system-upgrade-controller-plans/os-pkg-updatedirectory and selectpkg-update-bundle.yaml. This will auto-populate the Create from YAML page with the Bundle content.

Change the target clusters for the

Bundle:To match all downstream clusters change the default Bundle

.spec.targetsto:spec: targets: - clusterSelector: {}For a more granular downstream cluster mappings, see Mapping to Downstream Clusters.

Select Create

25.3.4.2.2 Bundle creation - manual #

Choose the desired Edge release tag that you wish to apply the OS package update SUC Plans from (referenced below as

${REVISION}).Pull the Bundle resource:

curl -o pkg-update-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller-plans/os-pkg-update/pkg-update-bundle.yamlEdit the

Bundletarget configurations, underspec.targetsprovide your desired target list. By default theBundleresources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters change the default

Bundletarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection see Mapping to Downstream Clusters

Apply the Bundle resources to your

management cluster:kubectl apply -f pkg-update-bundle.yaml

View the created Bundle resource under the

fleet-defaultnamespace:kubectl get bundles os-pkg-update -n fleet-default # Example output NAME BUNDLEDEPLOYMENTS-READY STATUS os-pkg-update 0/0

25.3.4.3 SUC Plan deployment - third-party GitOps workflow #

There might be use-cases where users would like to incorporate the OS package update SUC Plans to their own third-party GitOps workflow (e.g. Flux).

To get the OS package update resources that you need, first determine the Edge release tag of the suse-edge/fleet-examples repository that you would like to use.

After that, resources can be found at fleets/day2/system-upgrade-controller-plans/os-pkg-update, where:

plan-control-plane.yaml-system-upgrade-controllerPlan resource for control-plane nodesplan-agent.yaml-system-upgrade-controllerPlan resource for agent nodessecret.yaml- secret that ships a script that creates theedge-update.servicesystemd.service

These Plan resources are interpreted by the system-upgrade-controller and should be deployed on each downstream cluster that you wish to upgrade. For information on how to deploy the system-upgrade-controller, see Section 25.2.1.3, “Deploying system-upgrade-controller when using a third-party GitOps workflow”.

To better understand how your GitOps workflow can be used to deploy the SUC Plans for OS package update, it can be beneficial to take a look at the overview (Section 25.3.3.1, “Overview”) of the update procedure using Fleet.

25.4 Kubernetes version upgrade #

This section covers Kubernetes upgrades for downstream clusters that have NOT been created through a Rancher (Chapter 4, Rancher) instance. For information on how to upgrade the Kubernetes version of Rancher created clusters, see Upgrading and Rolling Back Kubernetes.

25.4.1 Components #

This section covers the custom components that the Kubernetes upgrade process uses over the default Day 2 components (Section 25.1.1, “Components”).

25.4.1.1 rke2-upgrade #

Image responsible for upgrading the RKE2 version of a specific node.

Shipped through a Pod created by SUC based on a SUC Plan. The Plan should be located on each downstream cluster that is in need of a RKE2 upgrade.

For more information regarding how the rke2-upgrade image performs the upgrade, see the upstream documentation.

25.4.1.2 k3s-upgrade #

Image responsible for upgrading the K3s version of a specific node.

Shipped through a Pod created by SUC based on a SUC Plan. The Plan should be located on each downstream cluster that is in need of a K3s upgrade.

For more information regarding how the k3s-upgrade image performs the upgrade, see the upstream documentation.

25.4.2 Requirements #

Backup your Kubernetes distribution:

For imported RKE2 clusters, see the RKE2 Backup and Restore documentation.

For imported K3s clusters, see the K3s Backup and Restore documentation.

Make sure that SUC Plan tolerations match node tolerations - If your Kubernetes cluster nodes have custom taints, make sure to add tolerations for those taints in the SUC Plans. By default SUC Plans have tolerations only for control-plane nodes. Default tolerations include:

CriticalAddonsOnly=true:NoExecute

node-role.kubernetes.io/control-plane:NoSchedule

node-role.kubernetes.io/etcd:NoExecute

NoteAny additional tolerations must be added under the

.spec.tolerationssection of each Plan. SUC Plans related to the Kubernetes version upgrade can be found in the suse-edge/fleet-examples repository under:For RKE2 -

fleets/day2/system-upgrade-controller-plans/rke2-upgradeFor K3s -

fleets/day2/system-upgrade-controller-plans/k3s-upgrade

Make sure you use the Plans from a valid repository release tag.

An example of defining custom tolerations for the RKE2 control-plane SUC Plan, would look like this:

apiVersion: upgrade.cattle.io/v1 kind: Plan metadata: name: rke2-plan-control-plane spec: ... tolerations: # default tolerations - key: "CriticalAddonsOnly" operator: "Equal" value: "true" effect: "NoExecute" - key: "node-role.kubernetes.io/control-plane" operator: "Equal" effect: "NoSchedule" - key: "node-role.kubernetes.io/etcd" operator: "Equal" effect: "NoExecute" # custom toleration - key: "foo" operator: "Equal" value: "bar" effect: "NoSchedule" ...

25.4.3 Upgrade procedure #

This section assumes you will be deploying SUC Plans using Fleet (Chapter 6, Fleet). If you intend to deploy the SUC Plan using a different approach, refer to Section 25.4.4.3, “SUC Plan deployment - third-party GitOps workflow”.

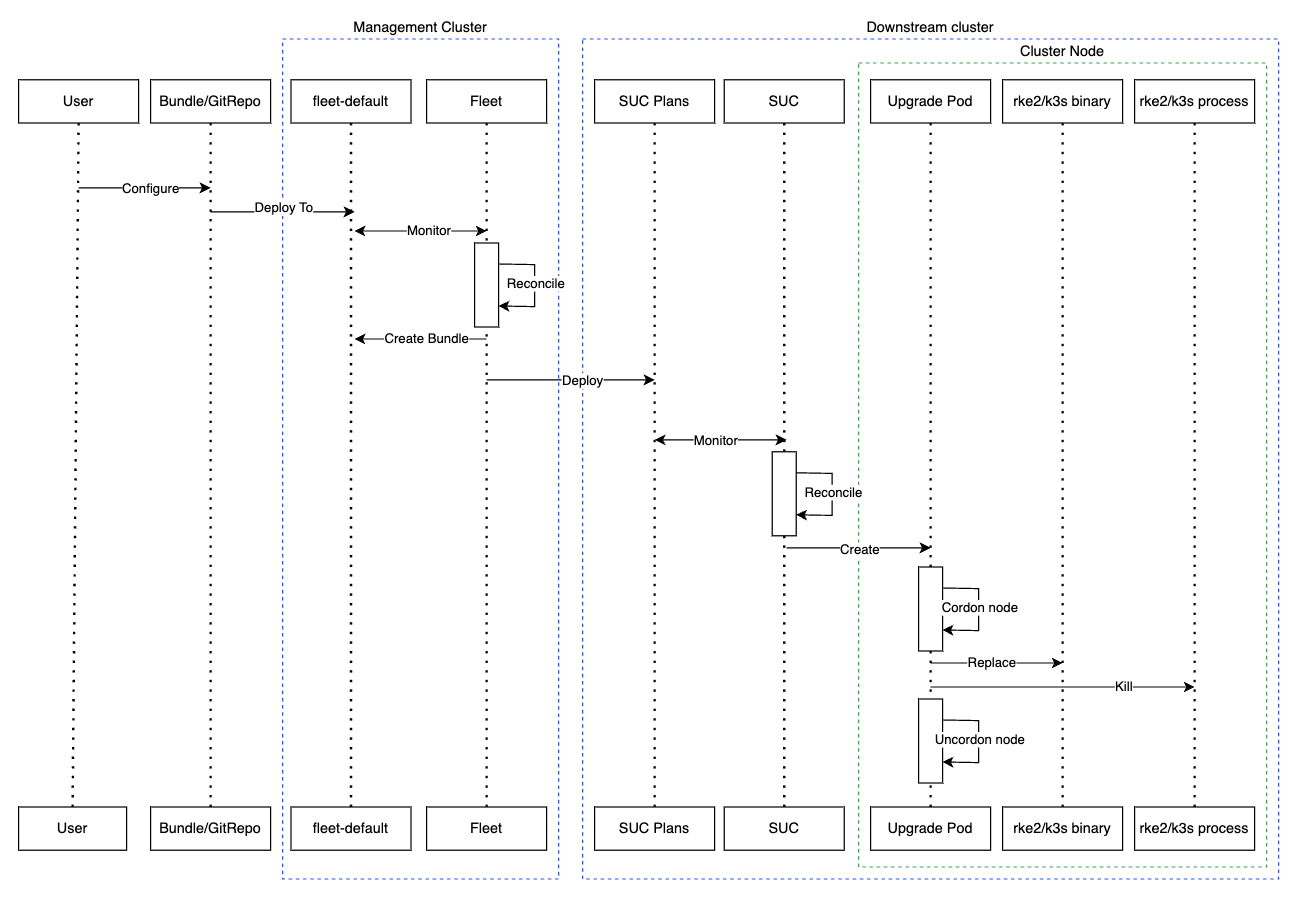

The Kubernetes version upgrade procedure revolves around deploying SUC Plans to downstream clusters. These plans hold information that instructs the SUC on which nodes to create Pods which run the rke2/k3s-upgrade images. For information regarding the structure of a SUC Plan, refer to the upstream documentation.

Kubernetes upgrade Plans are shipped in the following ways:

Through a

GitReporesources - Section 25.4.4.1, “SUC Plan deployment - GitRepo resource”Through a

Bundleresource - Section 25.4.4.2, “SUC Plan deployment - Bundle resource”

To determine which resource you should use, refer to Section 25.1.2, “Determine your use-case”.

For a full overview of what happens during the update procedure, refer to the Section 25.4.3.1, “Overview” section.

25.4.3.1 Overview #

This section aims to describe the full workflow that the Kubernetes version upgrade process goes throught from start to finish.

Kubernetes version upgrade steps:

Based on his use-case, the user determines whether to use a GitRepo or a Bundle resource for the deployment of the

Kubernetes upgrade SUC Plansto the desired downstream clusters. For information on how to map a GitRepo/Bundle to a specific set of downstream clusters, see Mapping to Downstream Clusters.If you are unsure whether you should use a GitRepo or a Bundle resource for the SUC Plan deployment, refer to Section 25.1.2, “Determine your use-case”.

For GitRepo/Bundle configuration options, refer to Section 25.4.4.1, “SUC Plan deployment - GitRepo resource” or Section 25.4.4.2, “SUC Plan deployment - Bundle resource”.

The user deploys the configured GitRepo/Bundle resource to the

fleet-defaultnamespace in hismanagement cluster. This is done either manually or thorugh the Rancher UI if such is available.Fleet (Chapter 6, Fleet) constantly monitors the

fleet-defaultnamespace and immediately detects the newly deployed GitRepo/Bundle resource. For more information regarding what namespaces does Fleet monitor, refer to Fleet’s Namespaces documentation.If the user has deployed a GitRepo resource,

Fleetwill reconcile the GitRepo and based on its paths and fleet.yaml configurations it will deploy a Bundle resource in thefleet-defaultnamespace. For more information, refer to Fleet’s GitRepo Contents documentation.Fleetthen proceeds to deploy theKubernetes resourcesfrom this Bundle to all the targeteddownstream clusters. In the context of theKubernetes version upgrade, Fleet deploys the following resources from the Bundle (depending on the Kubernetes distrubution):rke2-plan-agent/k3s-plan-agent- instructs SUC on how to do a Kubernetes upgrade on cluster agent nodes. Will not be interpreted if the cluster consists only from control-plane nodes.rke2-plan-control-plane/k3s-plan-control-plane- instructs SUC on how to do a Kubernetes upgrade on cluster control-plane nodes.NoteThe above SUC Plans will be deployed in the

cattle-systemnamespace of each downstream cluster.

On the downstream cluster, SUC picks up the newly deployed SUC Plans and deploys an Update Pod on each node that matches the node selector defined in the SUC Plan. For information how to monitor the SUC Plan Pod, refer to Section 25.2.2.2, “Monitor SUC Plans”.

Depending on which SUC Plans you have deployed, the Update Pod will run either a rke2-upgrade or a k3s-upgrade image and will execute the following workflow on each cluster node:

Cordon cluster node - to ensure that no pods are scheduled accidentally on this node while it is being upgraded, we mark it as

unschedulable.Replace the

rke2/k3sbinary that is installed on the node OS with the binary shipped by therke2-upgrade/k3s-upgradeimage that the Pod is currently running.Kill the

rke2/k3sprocess that is running on the node OS - this instructs the supervisor to automatically restart therke2/k3sprocess using the new version.Uncordon cluster node - after the successful Kubernetes distribution upgrade, the node is again marked as

scheduable.NoteFor further information regarding how the

rke2-upgradeandk3s-upgradeimages work, see the rke2-upgrade and k3s-upgrade upstream projects.

With the above steps executed, the Kubernetes version of each cluster node should have been upgraded to the desired Edge compatible release.

25.4.4 Kubernetes version upgrade - SUC Plan deployment #

25.4.4.1 SUC Plan deployment - GitRepo resource #

A GitRepo resource, that ships the needed Kubernetes upgrade SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 25.4.4.1.1, “GitRepo creation - Rancher UI” (whenRancheris available).By manually deploying (Section 25.4.4.1.2, “GitRepo creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the Kubernetes upgrade process of the nodes of your targeted cluster, refer to the Section 25.2.2.2, “Monitor SUC Plans” documentation.

25.4.4.1.1 GitRepo creation - Rancher UI #

In the upper left corner, ☰ → Continuous Delivery

Go to Git Repos → Add Repository

If you use the suse-edge/fleet-examples repository:

Repository URL -

https://github.com/suse-edge/fleet-examples.gitWatch → Revision - choose a release tag for the

suse-edge/fleet-examplesrepository that you wish to useUnder Paths add the path to the Kubernetes distribution upgrade Fleets as seen in the release tag:

For RKE2 -

fleets/day2/system-upgrade-controller-plans/rke2-upgradeFor K3s -

fleets/day2/system-upgrade-controller-plans/k3s-upgrade

Select Next to move to the target configuration section. Only select clusters for which you wish to upgrade the desired Kubernetes distribution

Create

Alternatively, if you decide to use your own repository to host these files, you would need to provide your repo data above.

25.4.4.1.2 GitRepo creation - manual #

Choose the desired Edge release tag that you wish to apply the Kubernetes SUC upgrade Plans from (referenced below as

${REVISION}).Pull the GitRepo resource:

For RKE2 clusters:

curl -o rke2-upgrade-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/gitrepos/day2/rke2-upgrade-gitrepo.yamlFor K3s clusters:

curl -o k3s-upgrade-gitrepo.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/gitrepos/day2/k3s-upgrade-gitrepo.yaml

Edit the GitRepo configuration, under

spec.targetsspecify your desired target list. By default theGitReporesources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters change the default

GitRepotarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection see Mapping to Downstream Clusters

Apply the GitRepo resources to your

management cluster:# RKE2 kubectl apply -f rke2-upgrade-gitrepo.yaml # K3s kubectl apply -f k3s-upgrade-gitrepo.yaml

View the created GitRepo resource under the

fleet-defaultnamespace:# RKE2 kubectl get gitrepo rke2-upgrade -n fleet-default # K3s kubectl get gitrepo k3s-upgrade -n fleet-default # Example output NAME REPO COMMIT BUNDLEDEPLOYMENTS-READY STATUS k3s-upgrade https://github.com/suse-edge/fleet-examples.git release-3.0.1 0/0 rke2-upgrade https://github.com/suse-edge/fleet-examples.git release-3.0.1 0/0

25.4.4.2 SUC Plan deployment - Bundle resource #

A Bundle resource, that ships the needed Kubernetes upgrade SUC Plans, can be deployed in one of the following ways:

Through the

Rancher UI- Section 25.4.4.2.1, “Bundle creation - Rancher UI” (whenRancheris available).By manually deploying (Section 25.4.4.2.2, “Bundle creation - manual”) the resource to your

management cluster.

Once deployed, to monitor the Kubernetes upgrade process of the nodes of your targeted cluster, refer to the Section 25.2.2.2, “Monitor SUC Plans” documentation.

25.4.4.2.1 Bundle creation - Rancher UI #

In the upper left corner, click ☰ → Continuous Delivery

Go to Advanced > Bundles

Select Create from YAML

From here you can create the Bundle in one of the following ways:

By manually copying the Bundle content to the Create from YAML page. Content can be retrieved:

By cloning the suse-edge/fleet-examples repository to the desired release tag and selecting the Read from File option in the Create from YAML page. From there, navigate to the bundle that you need (

/bundles/day2/system-upgrade-controller-plans/rke2-upgrade/plan-bundle.yamlfor RKE2 and/bundles/day2/system-upgrade-controller-plans/k3s-upgrade/plan-bundle.yamlfor K3s). This will auto-populate the Create from YAML page with the Bundle content

Change the target clusters for the

Bundle:To match all downstream clusters change the default Bundle

.spec.targetsto:spec: targets: - clusterSelector: {}For a more granular downstream cluster mappings, see Mapping to Downstream Clusters.

Create

25.4.4.2.2 Bundle creation - manual #

Choose the desired Edge release tag that you wish to apply the Kubernetes SUC upgrade Plans from (referenced below as

${REVISION}).Pull the Bundle resources:

For RKE2 clusters:

curl -o rke2-plan-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller-plans/rke2-upgrade/plan-bundle.yamlFor K3s clusters:

curl -o k3s-plan-bundle.yaml https://raw.githubusercontent.com/suse-edge/fleet-examples/${REVISION}/bundles/day2/system-upgrade-controller-plans/k3s-upgrade/plan-bundle.yaml

Edit the

Bundletarget configurations, underspec.targetsprovide your desired target list. By default theBundleresources from thesuse-edge/fleet-examplesare NOT mapped to any down stream clusters.To match all clusters change the default

Bundletarget to:spec: targets: - clusterSelector: {}Alternatively, if you want a more granular cluster selection see Mapping to Downstream Clusters

Apply the Bundle resources to your

management cluster:# For RKE2 kubectl apply -f rke2-plan-bundle.yaml # For K3s kubectl apply -f k3s-plan-bundle.yaml

View the created Bundle resource under the

fleet-defaultnamespace:# For RKE2 kubectl get bundles rke2-upgrade -n fleet-default # For K3s kubectl get bundles k3s-upgrade -n fleet-default # Example output NAME BUNDLEDEPLOYMENTS-READY STATUS k3s-upgrade 0/0 rke2-upgrade 0/0

25.4.4.3 SUC Plan deployment - third-party GitOps workflow #

There might be use-cases where users would like to incorporate the Kubernetes upgrade resources to their own third-party GitOps workflow (e.g. Flux).

To get the upgrade resources that you need, first determine the he Edge release tag of the suse-edge/fleet-examples repository that you would like to use.

After that, the resources can be found at:

For a RKE2 cluster upgrade:

For

control-planenodes -fleets/day2/system-upgrade-controller-plans/rke2-upgrade/plan-control-plane.yamlFor

agentnodes -fleets/day2/system-upgrade-controller-plans/rke2-upgrade/plan-agent.yaml

For a K3s cluster upgrade:

For

control-planenodes -fleets/day2/system-upgrade-controller-plans/k3s-upgrade/plan-control-plane.yamlFor

agentnodes -fleets/day2/system-upgrade-controller-plans/k3s-upgrade/plan-agent.yaml

These Plan resources are interpreted by the system-upgrade-controller and should be deployed on each downstream cluster that you wish to upgrade. For information on how to deploy the system-upgrade-controller, see Section 25.2.1.3, “Deploying system-upgrade-controller when using a third-party GitOps workflow”.

To better understand how your GitOps workflow can be used to deploy the SUC Plans for Kubernetes version upgrade, it can be beneficial to take a look at the overview (Section 25.4.3.1, “Overview”) of the update procedure using Fleet.

25.5 Helm chart upgrade #

The below sections focus on using Fleet functionalities to achieve a Helm chart update.

Users adopting a third-party GitOps workflow, should take the configurations for their desired helm chart from its fleet.yaml located at fleets/day2/chart-templates/<chart-name>. Make sure you are retrieving the chart data from a valid "Day 2" Edge release.

25.5.1 Components #

Apart from the default Day 2 components (Section 25.1.1, “Components”), no other custom components are needed for this operation.

25.5.2 Preparation for air-gapped environments #

25.5.2.1 Ensure that you have access to your Helm chart’s upgrade fleet.yaml file #

Host the needed resources on a local git server that is accessible by your management cluster.

25.5.2.2 Find the required assets for your Edge release version #

Go to the Day 2 release page and find the Edge 3.X.Y release that you want to upgrade your chart to and click Assets.

From the release’s Assets section, download the following files, which are required for an air-gapped upgrade of a SUSE supported helm chart:

Release File

Description

edge-save-images.sh

This script pulls the images in the

edge-release-images.txtfile and saves them to a '.tar.gz' archive that can then be used in your air-gapped environment.edge-save-oci-artefacts.sh

This script pulls the SUSE OCI chart artefacts in the

edge-release-helm-oci-artefacts.txtfile and creates a '.tar.gz' archive of a directory containing all other chart OCI archives.edge-load-images.sh

This script loads the images in the '.tar.gz' archive generated by

edge-save-images.sh, retags them and pushes them to your private registry.edge-load-oci-artefacts.sh

This script takes a directory containing '.tgz' SUSE OCI charts and loads all OCI charts to your private registry. The directory is retrieved from the '.tar.gz' archive that the

edge-save-oci-artefacts.shscript has generated.edge-release-helm-oci-artefacts.txt

This file contains a list of OCI artefacts for the SUSE Edge release Helm charts.

edge-release-images.txt

This file contains a list of images needed by the Edge release Helm charts.

25.5.2.3 Create the SUSE Edge release images archive #

On a machine with internet access:

Make

edge-save-images.shexecutable:chmod +x edge-save-images.sh

Use

edge-save-images.shscript to create a Docker importable '.tar.gz' archive:./edge-save-images.sh --source-registry registry.suse.com

This will create a ready to load

edge-images.tar.gz(unless you have specified the-i|--imagesoption) archive with the needed images.Copy this archive to your air-gapped machine

scp edge-images.tar.gz <user>@<machine_ip>:/path

25.5.2.4 Create a SUSE Edge Helm chart OCI images archive #

On a machine with internet access:

Make

edge-save-oci-artefacts.shexecutable:chmod +x edge-save-oci-artefacts.sh

Use

edge-save-oci-artefacts.shscript to create a '.tar.gz' archive of all SUSE Edge Helm chart OCI images:./edge-save-oci-artefacts.sh --source-registry registry.suse.com

This will create a

oci-artefacts.tar.gzarchive containing all SUSE Edge Helm chart OCI imagesCopy this archive to your air-gapped machine

scp oci-artefacts.tar.gz <user>@<machine_ip>:/path

25.5.2.5 Load SUSE Edge release images to your air-gapped machine #

On your air-gapped machine:

Log into your private registry (if required):

podman login <REGISTRY.YOURDOMAIN.COM:PORT>

Make

edge-load-images.shexecutable:chmod +x edge-load-images.sh

Use

edge-load-images.shto load the images from the copiededge-images.tar.gzarchive, retag them and push them to your private registry:./edge-load-images.sh --source-registry registry.suse.com --registry <REGISTRY.YOURDOMAIN.COM:PORT> --images edge-images.tar.gz

25.5.2.6 Load SUSE Edge Helm chart OCI images to your air-gapped machine #

On your air-gapped machine:

Log into your private registry (if required):

podman login <REGISTRY.YOURDOMAIN.COM:PORT>

Make

edge-load-oci-artefacts.shexecutable:chmod +x edge-load-oci-artefacts.sh

Untar the copied

oci-artefacts.tar.gzarchive:tar -xvf oci-artefacts.tar.gz

This will produce a directory with the naming template

edge-release-oci-tgz-<date>Pass this directory to the

edge-load-oci-artefacts.shscript to load the SUSE Edge helm chart OCI images to your private registry:NoteThis script assumes the

helmCLI has been pre-installed on your environment. For Helm installation instructions, see Installing Helm../edge-load-oci-artefacts.sh --archive-directory edge-release-oci-tgz-<date> --registry <REGISTRY.YOURDOMAIN.COM:PORT> --source-registry registry.suse.com

25.5.2.7 Create registry mirrors pointing to your private registry for your Kubernetes distribution #

For RKE2, see Containerd Registry Configuration

For K3s, see Embedded Registry Mirror

25.5.3 Upgrade procedure #

The below upgrade procedure utilises Rancher’s Fleet (Chapter 6, Fleet) funtionality. Users using a third-party GitOps workflow should retrieve the chart versions supported by each Edge release from the Section A.1, “Abstract” and populate these versions to their third-party GitOps workflow.

This section focuses on the following Helm upgrade procedure use-cases:

I have a new cluster and would like to deploy and manage a SUSE Helm chart (Section 25.5.3.1, “I have a new cluster and would like to deploy and manage a SUSE Helm chart”)

I would like to upgrade a Fleet managed Helm chart (Section 25.5.3.2, “I would like to upgrade a Fleet managed Helm chart”)

I would like to upgrade an EIB created Helm chart (Section 25.5.3.3, “I would like to upgrade an EIB created Helm chart”)

Manually deployed Helm charts cannot be reliably upgraded. We suggest to redeploy the helm chart using the Section 25.5.3.1, “I have a new cluster and would like to deploy and manage a SUSE Helm chart” method.

25.5.3.1 I have a new cluster and would like to deploy and manage a SUSE Helm chart #

For users that want to manage their Helm chart lifecycle through Fleet.

25.5.3.1.1 Prepare your Fleet resources #

Acquire the Chart’s Fleet resources from the Edge release tag that you wish to use

From the selected Edge release tag revision, navigate to the Helm chart fleet -

fleets/day2/chart-templates/<chart>Copy the chart Fleet directory to the Git repository that you will be using for your GitOps workflow

Optionally, if the Helm chart requires configurations to its values, edit the

.helm.valuesconfiguration inside thefleet.yamlfile of the copied directoryOptionally, there may be use-cases where you need to add additional resources to your chart’s fleet so that it can better fit your environment. For information on how to enhance your Fleet directory, see Git Repository Contents

An example for the longhorn helm chart would look like:

User Git repository strucutre:

<user_repository_root> └── longhorn └── fleet.yamlfleet.yamlcontent populated with userlonghorndata:defaultNamespace: longhorn-system helm: releaseName: "longhorn" chart: "longhorn" repo: "https://charts.longhorn.io" version: "1.6.1" takeOwnership: true # custom chart value overrides values: # Example for user provided custom values content defaultSettings: deletingConfirmationFlag: true # https://fleet.rancher.io/bundle-diffs diff: comparePatches: - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: engineimages.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"} - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: nodes.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"} - apiVersion: apiextensions.k8s.io/v1 kind: CustomResourceDefinition name: volumes.longhorn.io operations: - {"op":"remove", "path":"/status/conditions"} - {"op":"remove", "path":"/status/storedVersions"} - {"op":"remove", "path":"/status/acceptedNames"}NoteThese are just example values that are used to illustrate custom configurations over the

longhornchart. They should NOT be treated as deployment guidelines for thelonghornchart.

25.5.3.1.2 Create the GitRepo #

After populating your repository with the chart’s Fleet resources, you must create a GitRepo resource. This resource will hold information on how to access your chart’s Fleet resources and to which clusters it needs to apply those resources.

The GitRepo resource can be created through the Rancher UI, or by manually deploying the resource to the management cluster.

For information on how to create and deploy the GitRepo resource manually, see Creating a Deployment.

To create a GitRepo resource through the Rancher UI, see Accessing Fleet in the Rancher UI.

Example longhorn GitRepo resource for manual deployment:

apiVersion: fleet.cattle.io/v1alpha1

kind: GitRepo

metadata:

name: longhorn-git-repo

namespace: fleet-default

spec:

# If using a tag

# revision: <user_repository_tag>

#

# If using a branch

# branch: <user_repository_branch>

paths:

# As seen in the 'Prepare your Fleet resources' example

- longhorn

repo: <user_repository_url>

targets:

# Match all clusters

- clusterSelector: {}25.5.3.1.3 Managing the deployed Helm chart #

Once deployed with Fleet, for Helm chart upgrades, see Section 25.5.3.2, “I would like to upgrade a Fleet managed Helm chart”.

25.5.3.2 I would like to upgrade a Fleet managed Helm chart #

Determine the version to which you need to upgrade your chart so that it is compatible with an Edge 3.X.Y release. Helm chart version per Edge release can be viewed from the Section A.1, “Abstract”.

In your Fleet monitored Git repository, edit the Helm chart’s

fleet.yamlfile with the correct chart version and repository from the Section A.1, “Abstract”.After commiting and pushing the changes to your repository, this will trigger an upgrade of the desired Helm chart

25.5.3.3 I would like to upgrade an EIB created Helm chart #

This section assumes that you have deployed the system-upgrade-controller (SUC) beforehand, if you have not done so, or are unsure why you need it, see the default Day 2 components (Section 25.1.1, “Components”) list.

EIB deploys Helm charts by utilizing the auto-deploy manifests functionality of rke2/k3s. It creates a HelmChart resource definition manifest unter the /var/lib/rancher/<rke2/k3s>/server/manifests location of the initialiser node and lets rke2/k3s pick it up and auto-deploy it in the cluster.

From a Day 2 point of view this would mean that any upgrades of the Helm chart need to happen by editing the HelmChart manifest file of the specific chart. To automate this process for multiple clusters, this section uses SUC Plans.

Below you can find information on:

The general overview (Section 25.5.3.3.1, “Overview”) of the helm chart upgrade workflow.

The necessary upgrade steps (Section 25.5.3.3.2, “Upgrade Steps”) needed for a successful helm chart upgrade.

An example (Section 25.5.3.3.3, “Example”) showcasing a Longhorn chart upgrade using the explained method.

How to use the upgrade process with a different GitOps tool (Section 25.5.3.3.4, “Helm chart upgrade using a third-party GitOps tool”).

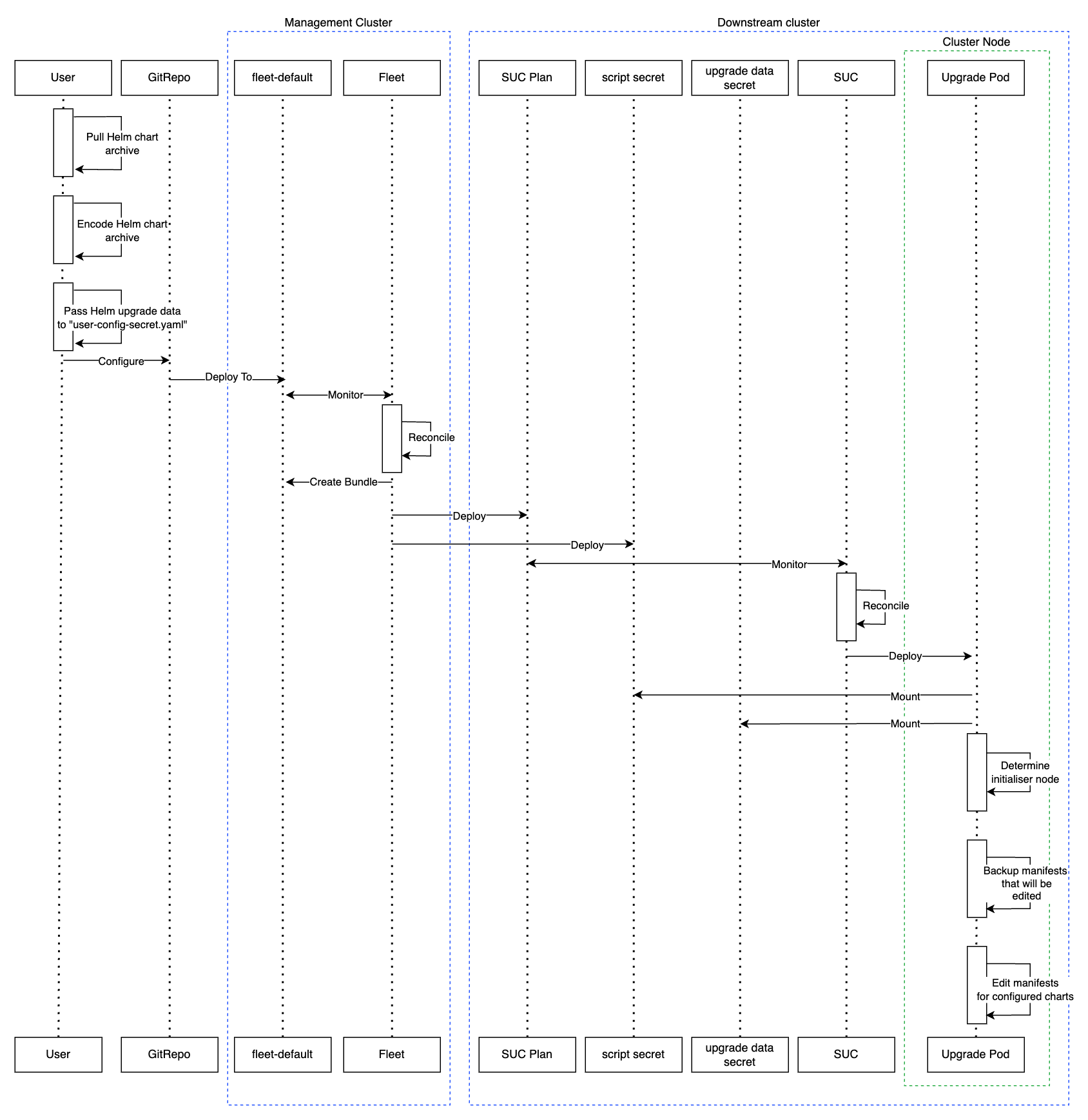

25.5.3.3.1 Overview #

This section is meant to give a high overview of the workflow that the user goes through in order to upgrade one or multiple Helm charts. For a detailed explanation of the steps needed for a Helm chart upgrade, see Section 25.5.3.3.2, “Upgrade Steps”.

The workflow begins with the user pulling the new Helm chart archive(s) that he wishes to upgrade his chart(s) to.

The archive(s) should then be encoded and passed as configuration to the

eib-chart-upgrade-user-data.yamlfile that is located under the fleet directory for the related SUC Plan. This is further explained in the upgrade steps (Section 25.5.3.3.2, “Upgrade Steps”) section.The user then proceeds to configure and deploy a

GitReporesource that will ship all the needed resources (SUC Plan, secrets, etc.) to the downstream clusters.The resource is deployed on the

management clusterunder thefleet-defaultnamespace.

Fleet (Chapter 6, Fleet) detects the deployed resource and deploys all the configured resources to the specified downstream clusters. Deployed resources include:

The

eib-chart-upgradeSUC Plan that will be used by SUC to create an Upgrade Pod on each node.The

eib-chart-upgrade-scriptSecret that ships theupgrade scriptthat the Upgrade Pod will use to upgrade theHelmChartmanifests on the initialiser node.The

eib-chart-upgrade-user-dataSecret that ships the chart data that theupgrade scriptwill use in order to understand which chart manifests it needs to upgrade.

Once the

eib-chart-upgradeSUC Plan has been deployed, the SUC picks it up and creates a Job which deploys the Upgrade Pod.Once deployed, the Upgrade Pod mounts the

eib-chart-upgrade-scriptandeib-chart-upgrade-user-dataSecrets and executes theupgrade scriptthat is shipped by theeib-chart-upgrade-scriptSecret.The

upgrade scriptdoes the following:Determine whether the Pod that the script is running on has been deployed on the

initialisernode. Theinitialisernode is the node that is hosting theHelmChartmanifests. For a single-node cluster it is the single control-plane node. For HA clusters it is the node that you have marked asinitializerwhen creating the cluster in EIB. If you have not specified theinitializerproperty, then the first node from thenodeslist is marked asinitializer. For more information, see the upstream documentation for EIB.NoteIf the

upgrade scriptis running on a non-initialiser node, it immediately finishes its execution and does not go through the steps below.Backup the manifests that will be edited in order to ensure disaster recover.

NoteBy default backups of the manifests are stored under the

/tmp/eib-helm-chart-upgrade-<date>directory. If you wish to use a custom location you can pass theMANIFEST_BACKUP_DIRenviroment variable to the Helm chart upgrade SUC Plan (example in the Plan).Edit the

HelmChartmanifests. As of this version, the following properties are changed in order to trigger a chart upgrade:The content of the

chartContentproperty is replaced with the encoded archive provided in theeib-chart-upgrade-user-dataSecret.The value of the

versionproperty is replaced with the version provided in theeib-chart-upgrade-user-dataSecret.

After the successful execution of the

upgrade script, the Helm integration for RKE2/K3s will pickup the change and automatically trigger an upgrade on the Helm chart.

25.5.3.3.2 Upgrade Steps #

Determine an Edge relase tag from which you wish to copy the Helm chart upgrade logic.

Copy the

fleets/day2/system-upgrade-controller-plans/eib-chart-upgradefleet to the repository that your Fleet will be using to do GitOps from.Pull the Helm chart archive that you wish to upgrade to:

helm pull [chart URL | repo/chartname] # Alternatively if you want to pull a specific version: # helm pull [chart URL | repo/chartname] --version 0.0.0

Encode the chart archive that you pulled:

# Encode the archive and disable line wrapping base64 -w 0 <chart-archive>.tgz

Configure the

eib-chart-upgrade-user-data.yamlsecret located under theeib-chart-upgradefleet that you copied from step (2):The secret ships a file called

chart_upgrade_data.txt. This file holds the chart upgrade data that theupgrade scriptwill use to know which charts need to be upgraded. The file expects one-line per chart entries in the following format "<name>|<version>|<base64_encoded_archive>":name- is the name of the helm chart as seen in thekubernetes.helm.charts.name[]property of the EIB definition file.version- should hold the new version of the Helm chart. During the upgrade this value will be used to replace the oldversionvalue of theHelmChartmanifest.base64_encoded_archive- pass the output of thebase64 -w 0 <chart-archive>.tgzhere. During upgrade this value will be used to replace the oldchartContentvalue of theHelmChartmanifest.NoteThe <name>|<version>|<base64_encoded_archive> line should be removed from the file before you start adding your data. It serves as an example of where and how you should configure your chart data.

Configure a

GitReporesource that will be shipping your chart upgradefleet. For more information on what aGitRepois, see GitRepo Resource.Configure

GitRepothrough the Rancher UI:In the upper left corner, ☰ → Continuous Delivery

Go to Git Repos → Add Repository

Here pass your repository data and path to your chart

upgrade fleetSelect Next and specify the target clusters of which you want to upgrade the configured charts

Create

If

Rancheris not available on your setup, you can configure aGitRepomanually on yourmanagement cluster:Populate the following template with your data:

apiVersion: fleet.cattle.io/v1alpha1 kind: GitRepo metadata: name: CHANGE_ME namespace: fleet-default spec: # if running from a tag # revision: CHANGE_ME # if running from a branch # branch: CHANGE_ME paths: # path to your chart upgrade fleet relative to your repository - CHANGE_ME # your repository URL repo: CHANGE_ME targets: # Select target clusters - clusterSelector: CHANGE_ME # To match all clusters: # - clusterSelector: {}For more information on how to setup and deploy a

GitReporesource, see GitRepo Resource and Create a GitRepo Resource.For information on how to match taget clusters on a more granular level, see Mapping to Downstream Clusters.

Deploy the configured

GitReporesource to thefleet-defaultnamespace of themanagement cluster.

Executing this steps should result in a successfully created GitRepo resource. It will then be picked up by Fleet and a Bundle will be created. This Bunlde will hold the raw Kubernetes resources that the GitRepo has configured under its fleet directory.

Fleet will then deploy all the Kubernetes resources from the Bundle to the specified downstream clusters. One of this resources will be a SUC Plan that will trigger the chart upgrade. For a full list of the resoruces that will be deployed and the workflow of the upgrade process, refer to the overview (Section 25.5.3.3.1, “Overview”) section.

To track the upgrade process itself, refer to the Monitor SUC Plans (Section 25.2.2.2, “Monitor SUC Plans”) section.

25.5.3.3.3 Example #

The following section serves to provide a real life example to the Section 25.5.3.3.2, “Upgrade Steps” section.

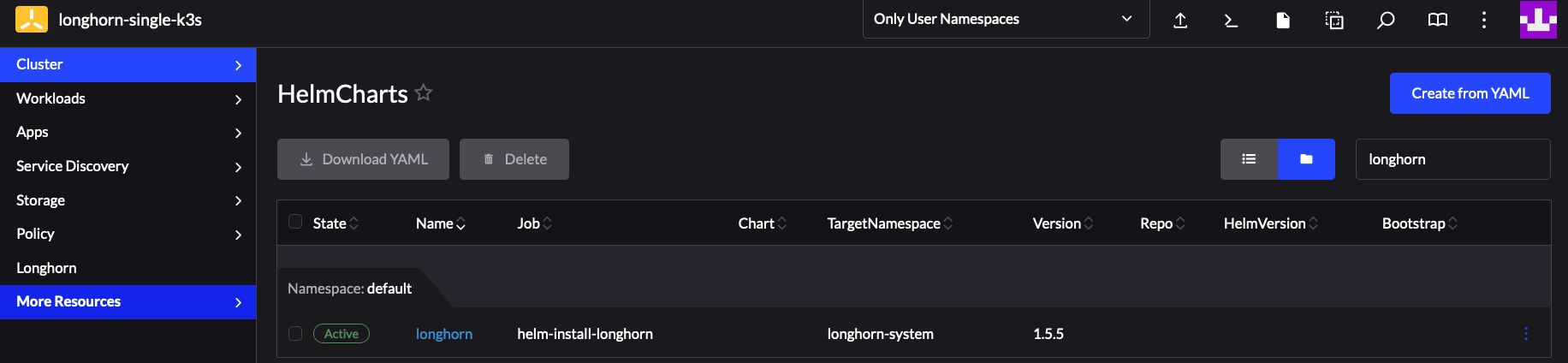

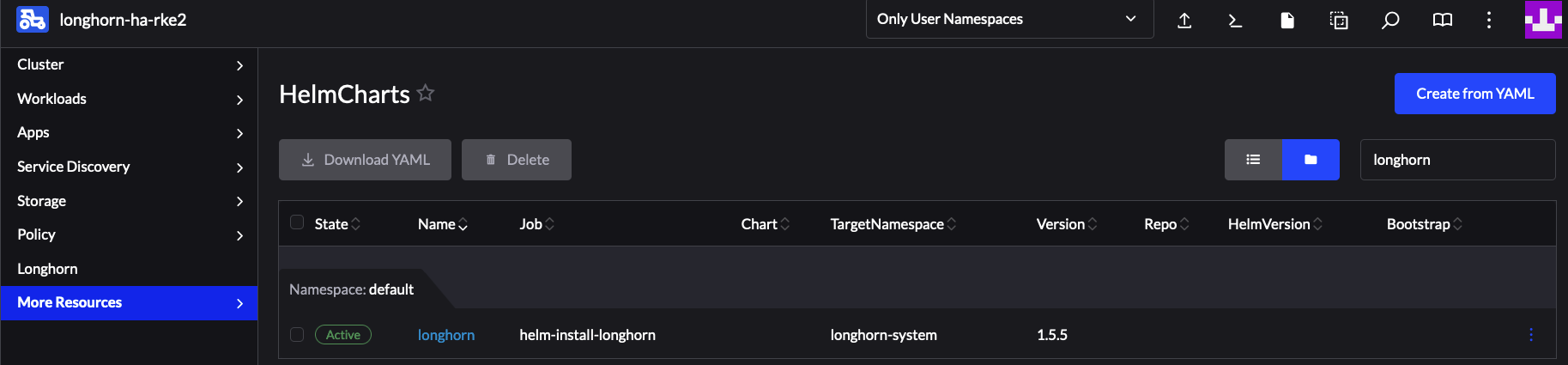

I have the following two EIB deployed clusters:

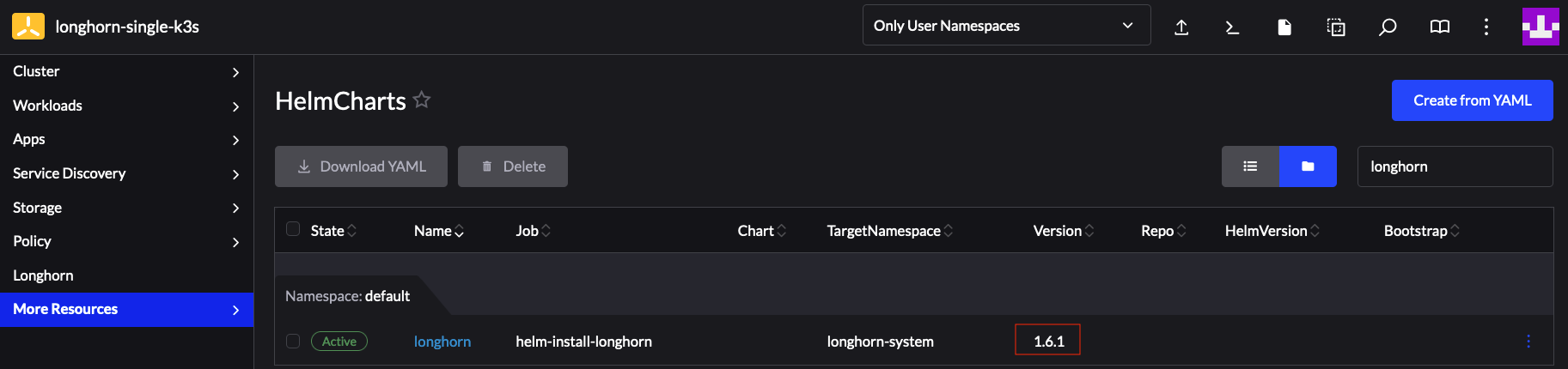

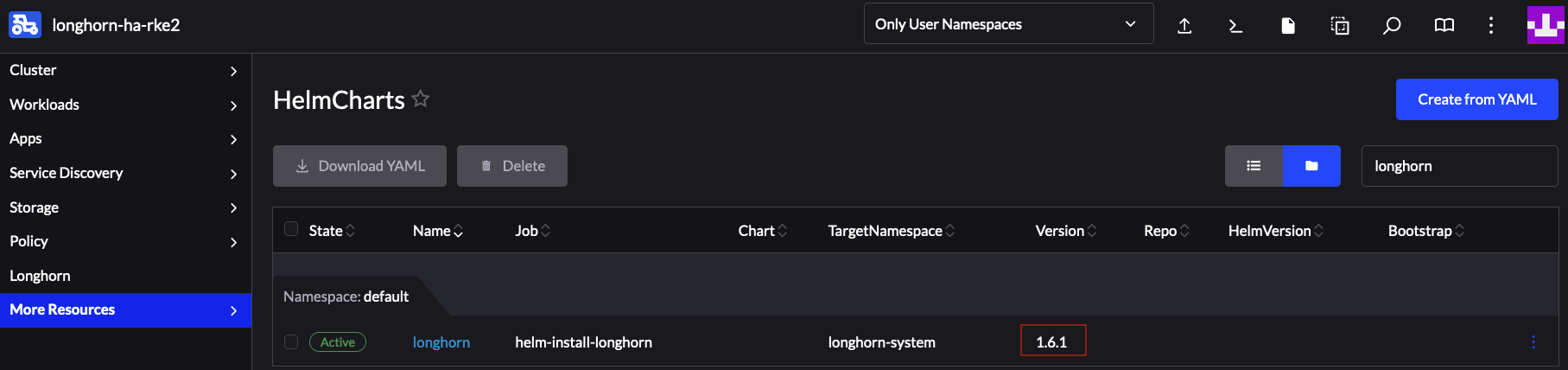

longhorn-single-k3s- single node K3s clusterlonghorn-ha-rke2- HA RKE2 cluster

Both clusters are running Longhorn and have been deployed through EIB, using the following image definition snippet:

kubernetes:

# HA RKE2 cluster specific snippet

# nodes:

# - hostname: cp1rke2.example.com

# initializer: true

# type: server

# - hostname: cp2rke2.example.com

# type: server

# - hostname: cp3rke2.example.com

# type: server

# - hostname: agent1rke2.example.com

# type: agent

# - hostname: agent2rke2.example.com

# type: agent

# version depending on the distribution

version: v1.28.9+k3s1/v1.28.9+rke2r1

helm:

charts:

- name: longhorn

repositoryName: longhorn

targetNamespace: longhorn-system

createNamespace: true

version: 1.5.5

repositories:

- name: longhorn

url: https://charts.longhorn.io

...The problem with this is that currently longhorn-single-k3s and longhorn-ha-rke2 are running with a Longhorn version that is not compatible with any Edge release.

We need to upgrade the chart on both clusters to a Edge supported Longhorn version.

To do this we follow these steps:

Determine the Edge relase tag from which we want to take the upgrade logic. For example, this example will use the

release-3.0.1release tag for which the supported Longhorn version is1.6.1.Clone the

release-3.0.1release tag and copy thefleets/day2/system-upgrade-controller-plans/eib-chart-upgradedirectory to our own repository.For simplicity this section works from a branch of the

suse-edge/fleet-examplesrepository, so the directory structure is the same, but you can place theeib-chart-upgradefleet anywhere in your repository.Directory structure example.

. ... |-- fleets | `-- day2 | `-- system-upgrade-controller-plans | `-- eib-chart-upgrade | |-- eib-chart-upgrade-script.yaml | |-- eib-chart-upgrade-user-data.yaml | |-- fleet.yaml | `-- plan.yaml ...

Add the Longhorn chart repository:

helm repo add longhorn https://charts.longhorn.io

Pull the Longhorn chart version

1.6.1:helm pull longhorn/longhorn --version 1.6.1

This will pull the longhorn as an archvie named

longhorn-1.6.1.tgz.Encode the Longhorn archive:

base64 -w 0 longhorn-1.6.1.tgz

This will output a long one-line base64 encoded string of the archive.

Now we have all the needed data to configure the

eib-chart-upgrade-user-data.yamlfile. The file configuration should look like this:apiVersion: v1 kind: Secret metadata: name: eib-chart-upgrade-user-data type: Opaque stringData: # <name>|<version>|<base64_encoded_archive> chart_upgrade_data.txt: | longhorn|1.6.1|H4sIFAAAAAAA/ykAK2FIUjBjSE02THk5NWIzV...longhornis the name of the chart as seen in my EIB definition file1.6.1is the version to which I want to upgrade theversionproperty of the LonghornHelmChartmanifestH4sIFAAAAAAA/ykAK2FIUjBjSE02THk5NWIzV…is a snippet of the encoded Longhorn1.6.1archive. A snippet has been added here for better readibility. You should always provide the full base64 encoded archive string here.NoteThis example shows configuration for a single chart upgrade, but if your use-case requires to upgrade multiple charts on multiple clusters, you can append the additional chart data as seen below:

apiVersion: v1 kind: Secret metadata: name: eib-chart-upgrade-user-data type: Opaque stringData: # <name>|<version>|<base64_encoded_archive> chart_upgrade_data.txt: | chartA|0.0.0|<chartA_base64_archive> chartB|0.0.0|<chartB_base64_archive> chartC|0.0.0|<chartC_base64_archive> ...

We also decided that we do not want to keep manifest backups at

/tmp, so the following configuration was added to theplan.yamlfile:apiVersion: upgrade.cattle.io/v1 kind: Plan metadata: name: eib-chart-upgrade spec: ... upgrade: ... # For when you want to backup your chart # manifest data under a specific directory # envs: - name: MANIFEST_BACKUP_DIR value: "/root"This will ensure that manifest backups will be saved under the

/rootdirectory instead of/tmp.Now that we have made all the needed configurations, what is left is to create the

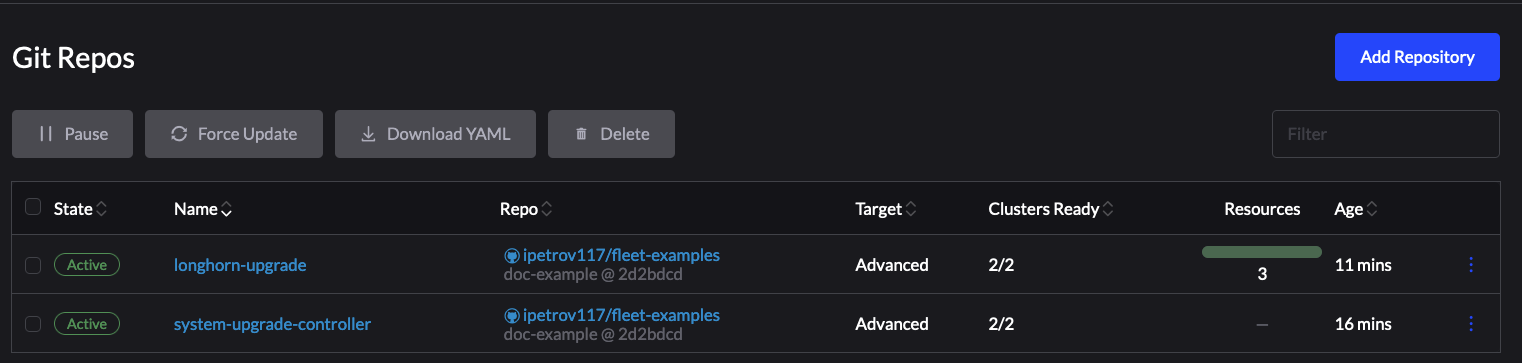

GitReporesource. This example creates theGitReporesource through theRancher UI.Following the steps described in the Upgrade Steps (Section 25.5.3.3.2, “Upgrade Steps”), we:

Named the

GitRepo"longhorn-upgrade".Passed the URL to the repository that will be used - https://github.com/suse-edge/fleet-examples.git

Passed the branch of the repository - "doc-example"

Passed the path to the

eib-chart-upgradefleet as seen in the repo -fleets/day2/system-upgrade-controller-plans/eib-chart-upgradeSelected the target clusters and created the resource

Figure 25.9: Successfully deployed SUC and longhorn GitRepos #

Now we need to monitor the upgrade procedures on the clusters:

Check the status of the Upgrade Pods, following the directions from the SUC plan monitor (Section 25.2.2.2, “Monitor SUC Plans”) section.

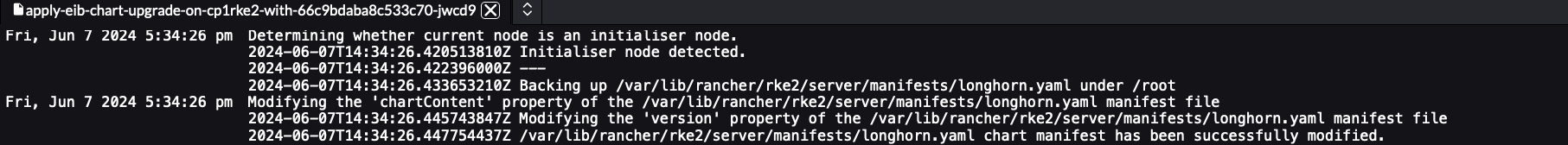

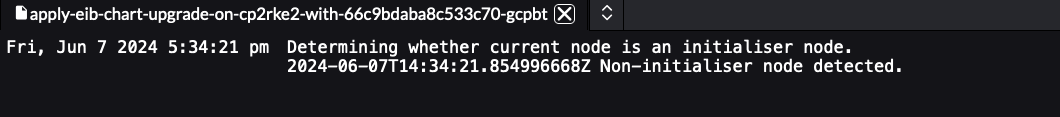

A successfully completed Upgrade Pod that has been working on an

intialisernode should hold logs similar to:Figure 25.10: Upgrade Pod running on an initialiser node #A successfully completed Upgrade Pod that has been working on a

non-initialisernode should hold logs similar to:Figure 25.11: Upgrade Pod running on a non-initialiser node #

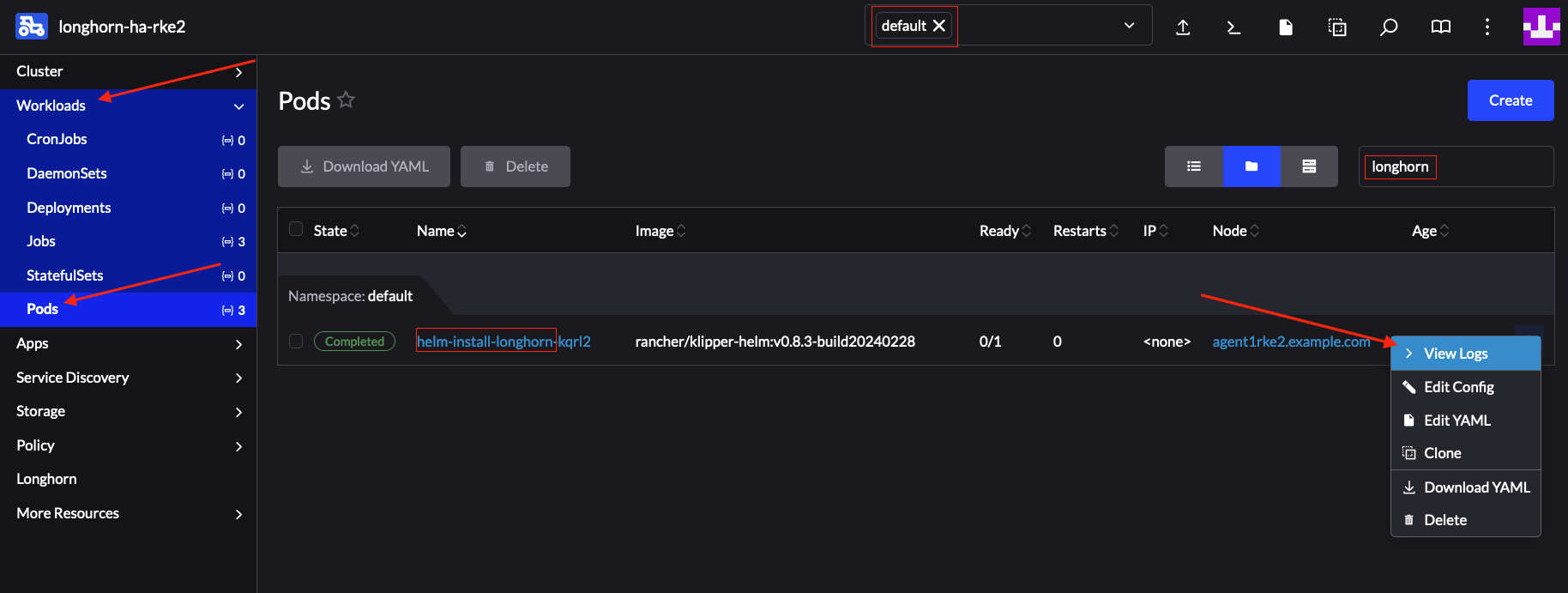

After a successful Upgrade Pod completion, we would also need to wait and monitor for the pods that wil lbe created by the helm controller. These pods will do the actual upgrade based on the file chagnes that the Upgrade Pod has done to the

HelmChartmanifest file.In your cluster, go to Workloads → Pods and search for a pod that contains the

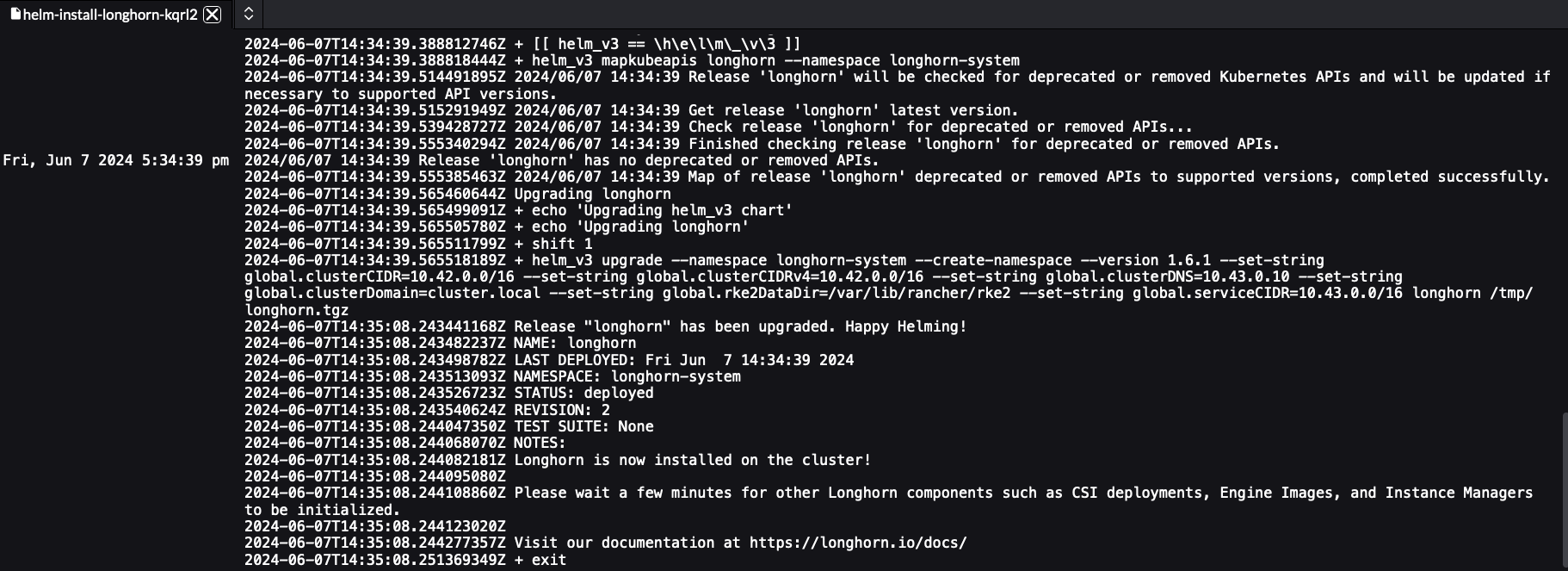

longhornstring in thedefaultnamespace. This should produce a pod with the naming templatehelm-install-longhorn-*, view the logs of this pod.Figure 25.12: Successfully completed helm-install pod #The logs should be similar to:

Figure 25.13: Successfully completed helm-install pod logs #

Now that we have ensured that everything has completed succesfully, we need to verify the version change:

This ensures that the Longhorn helm chart has been successfully upgraded and concludes this example.

If for some reason we would like to revert to the previous chart version of Longhorn, the previous Longhorn manifest will be located under /root/longhorn.yaml on the initialiser node. This is true, because we have specified the MANIFEST_BACKUP_DIR in the SUC Plan.

25.5.3.3.4 Helm chart upgrade using a third-party GitOps tool #

There might be use-cases where users would like to use this upgrade procedure with a GitOps workflow other than Fleet (e.g. Flux).

To get the resources related to EIB deployed Helm chart upgrades you need to first determine the Edge release tag of the suse-edge/fleet-examples repository that you would like to use.

After that, resources can be found at fleets/day2/system-upgrade-controller-plans/eib-chart-upgrade, where:

plan.yaml- system-upgrade-controller Plan related to the upgrade procedure.eib-chart-upgrade-script.yaml- Secret holding theupgrade scriptthat is responsible for editing and upgrade theHelmChartmanifest files.eib-chart-upgrade-user-data.yaml- Secret holding a file that is utilised by theupgrade scritp; populated by the user with relevat chart upgrade data beforehand.

These Plan resources are interpreted by the system-upgrade-controller and should be deployed on each downstream cluster that holds charts in need of an upgrade. For information on how to deploy the system-upgrade-controller, see Section 25.2.1.3, “Deploying system-upgrade-controller when using a third-party GitOps workflow”.

To better understand how your GitOps workflow can be used to deploy the SUC Plans for the upgrade process, it can be beneficial to take a look at the overview (Section 25.5.3.3.1, “Overview”) of the process using Fleet.