SUSE Edge 3.1.0 Documentation #

Welcome to the SUSE Edge documentation. You will find the high level architectural overview, quick start guides, validated designs, guidance on using components, third-party integrations, and best practices for managing your edge computing infrastructure and workloads.

1 What is SUSE Edge? #

SUSE Edge is a purpose-built, tightly integrated, and comprehensively validated end-to-end solution for addressing the unique challenges of the deployment of infrastructure and cloud-native applications at the edge. Its driving focus is to provide an opinionated, yet highly flexible, highly scalable, and secure platform that spans initial deployment image building, node provisioning and onboarding, application deployment, observability, and complete lifecycle operations. The platform is built on best-of-breed open source software from the ground up, consistent with both our 30-year+ history in delivering secure, stable, and certified SUSE Linux platforms and our experience in providing highly scalable and feature-rich Kubernetes management with our Rancher portfolio. SUSE Edge builds on-top of these capabilities to deliver functionality that can address a wide number of market segments, including retail, medical, transportation, logistics, telecommunications, smart manufacturing, and Industrial IoT.

2 Design Philosophy #

The solution is designed with the notion that there is no "one-size-fits-all" edge platform due to customers’ widely varying requirements and expectations. Edge deployments push us to solve, and continually evolve, some of the most challenging problems, including massive scalability, restricted network availability, physical space constraints, new security threats and attack vectors, variations in hardware architecture and system resources, the requirement to deploy and interface with legacy infrastructure and applications, and customer solutions that have extended lifespans. Since many of these challenges are different from traditional ways of thinking, e.g. deployment of infrastructure and applications within data centers or in the public cloud, we have to look into the design in much more granular detail, and rethinking many common assumptions.

For example, we find value in minimalism, modularity, and ease of operations. Minimalism is important for edge environments since the more complex a system is, the more likely it is to break. When looking at hundreds of locations, up to hundreds of thousands, complex systems will break in complex ways. Modularity in our solution allows for more user choice while removing unneeded complexity in the deployed platform. We also need to balance these with the ease of operations. Humans may make mistakes when repeating a process thousands of times, so the platform should make sure any potential mistakes are recoverable, eliminating the need for on-site technician visits, but also strive for consistency and standardization.

3 High Level Architecture #

The high level system architecture of SUSE Edge is broken into two core categories, namely "management" and "downstream" clusters. The management cluster is responsible for remote management of one or more downstream clusters, although it’s recognized that in certain circumstances, downstream clusters need to operate without remote management, e.g. in situations where an edge site has no external connectivity and needs to operate independently. In SUSE Edge, the technical components that are utilized for the operation of both the management and downstream clusters are largely common, although likely differentiate in both the system specifications and the applications that reside on-top, i.e. the management cluster would run applications that enable systems management and lifecycle operations, whereas the downstream clusters fulfil the requirements for serving user applications.

3.1 Components used in SUSE Edge #

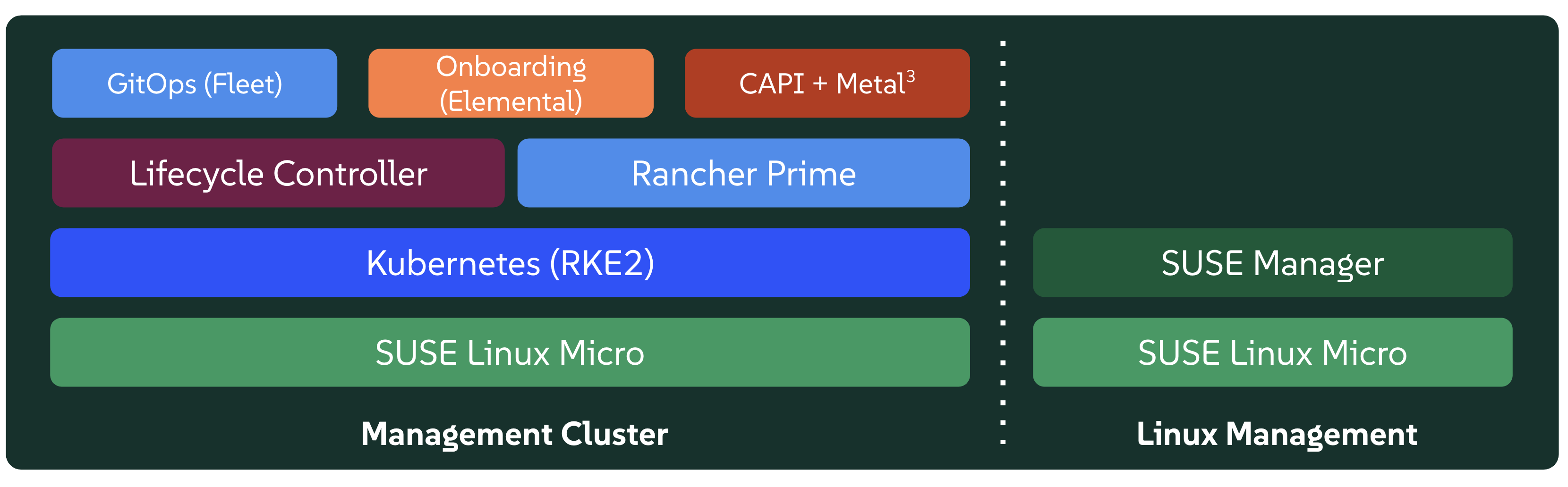

SUSE Edge is comprised of both existing SUSE and Rancher components along with additional features and components built by the Edge team to enable us to address the constraints and intricacies required in edge computing. The components used within both the management and downstream clusters are explained below, with a simplified high-level architecture diagram, noting that this isn’t an exhaustive list:

3.1.1 Management Cluster #

Management: This is the centralized part of SUSE Edge that is used to manage the provisioning and lifecycle of connected downstream clusters. The management cluster typically includes the following components:

Multi-cluster management with Rancher Prime (Chapter 4, Rancher), enabling a common dashboard for downstream cluster onboarding and ongoing lifecycle management of infrastructure and applications, also providing comprehensive tenant isolation and

IDP(Identity Provider) integrations, a large marketplace of third-party integrations and extensions, and a vendor-neutral API.Linux systems management with SUSE Manager, enabling automated Linux patch and configuration management of the underlying Linux operating system (*SLE Micro (Chapter 7, SLE Micro)) that runs on the downstream clusters. Note that while this component is containerized, it currently needs to run on a separate system to the rest of the management components, hence labelled as "Linux Management" in the diagram above.

A dedicated Lifecycle Management (Chapter 20, Upgrade Controller) controller that handles management cluster component upgrades to a given SUSE Edge release.

Remote system on-boarding into Rancher Prime with Elemental (Chapter 11, Elemental), enabling late binding of connected edge nodes to desired Kubernetes clusters and application deployment, e.g. via GitOps.

An Optional full bare-metal lifecycle and management support with Metal3 (Chapter 8, Metal3), MetalLB (Chapter 17, MetalLB), and

CAPI(Cluster API) infrastructure providers, enabling the full end-to-end provisioning of baremetal systems that have remote management capabilities.An optional GitOps engine called Fleet (Chapter 6, Fleet) for managing the provisioning and lifecycle of downstream clusters and applications that reside on them.

Underpinning the management cluster itself is SLE Micro (Chapter 7, SLE Micro) as the base operating system and RKE2 (Chapter 14, RKE2) as the Kubernetes distribution supporting the management cluster applications.

3.1.2 Downstream Clusters #

Downstream: This is the distributed part of SUSE Edge that is used to run the user workloads at the Edge, i.e. the software that is running at the edge location itself, and is typically comprised of the following components:

A choice of Kubernetes distributions, with secure and lightweight distributions like K3s (Chapter 13, K3s) and RKE2 (Chapter 14, RKE2) (

RKE2is hardened, certified and optimized for usage in government and regulated industries).NeuVector (Chapter 16, NeuVector) to enable security features like image vulnerability scanning, deep packet inspection, and real-time threat and vulnerability protection.

Software block storage with Longhorn (Chapter 15, Longhorn) to enable lightweight persistent, resilient, and scalable block-storage.

A lightweight, container-optimized, hardened Linux operating system with SLE Micro (Chapter 7, SLE Micro), providing an immutable and highly resilient OS for running containers and virtual machines at the edge. SLE Micro is available for both

aarch64andx86_64architectures, and it also supportsReal-Time Kernelfor latency sentitive applications (e.g. telco use-cases).For connected clusters (i.e. those that do have connectivity to the management cluster) two agents are deployed, namely Rancher System Agent for managing the connectivity to Rancher Prime, and venv-salt-minion for taking instructions from SUSE Manager for applying Linux software updates. These agents are not required for management of disconnected clusters.

3.2 Connectivity #

The above image provides a high-level architectural overview for connected downstream clusters and their attachment to the management cluster. The management cluster can be deployed on a wide variety of underlying infrastructure platforms, in both on-premises and cloud capacities, depending on networking availability between the downstream clusters and the target management cluster. The only requirement for this to function are API and callback URL’s to be accessible over the network that connects downstream cluster nodes to the management infrastructure.

It’s important to recognize that there are distinct mechanisms in which this connectivity is established relative to the mechanism of downstream cluster deployment. The details of this are explained in much more depth in the next section, but to set a baseline understanding, there are three primary mechanisms for connected downstream clusters to be established as a "managed" cluster:

The downstream clusters are deployed in a "disconnected" capacity at first (e.g. via Edge Image Builder (Chapter 9, Edge Image Builder)), and are then imported into the management cluster if/when connectivity allows.

The downstream clusters are configured to use the built-in onboarding mechanism (e.g. via Elemental (Chapter 11, Elemental)), and they automatically register into the management cluster at first-boot, allowing for late-binding of the cluster configuration.

The downstream clusters have been provisioned with the baremetal management capabilities (CAPI + Metal^3), and they’re automatically imported into the management cluster once the cluster has been deployed and configured (via the Rancher Turtles operator).

It’s recommended that multiple management clusters are implemented to accommodate the scale of large deployments, optimize for bandwidth and latency concerns in geographically dispersed environments, and to minimize the disruption in the event of an outage or management cluster upgrade. You can find the current management cluster scalability limits and system requirements here.

4 Common Edge Deployment Patterns #

Due to the varying set of operating environments and lifecycle requirements, we’ve implemented support for a number of distinct deployment patterns that loosely align to the market segments and use-cases that SUSE Edge operates in. We have documented a quickstart guide for each of these deployment patterns to help you get familiar with the SUSE Edge platform based around your needs. The three deployment patterns that we support today are described below, with a link to the respective quickstart page.

4.1 Directed network provisioning #

Directed network provisioning is where you know the details of the hardware you wish to deploy to and have direct access to the out-of-band management interface to orchestrate and automate the entire provisioning process. In this scenario, our customers expect a solution to be able to provision edge sites fully automated from a centralized location, going much further than the creation of a boot image by minimizing the manual operations at the edge location; simply rack, power, and attach the required networks to the physical hardware, and the automation process powers up the machine via the out-of-band management (e.g. via the Redfish API) and handles the provisioning, onboarding, and deployment of infrastructure without user intervention. The key for this to work is that the systems are known to the administrators; they know which hardware is in which location, and that deployment is expected to be handled centrally.

This solution is the most robust since you are directly interacting with the hardware’s management interface, are dealing with known hardware, and have fewer constraints on network availability. Functionality wise, this solution extensively uses Cluster API and Metal3 for automated provisioning from bare-metal, through operating system, Kubernetes, and layered applications, and provides the ability to link into the rest of the common lifecycle management capabilities of SUSE Edge post-deployment. The quickstart for this solution can be found in Chapter 1, BMC automated deployments with Metal3.

4.2 "Phone Home" network provisioning #

Sometimes you are operating in an environment where the central management cluster cannot manage the hardware directly (for example, your remote network is behind a firewall or there is no out-of-band management interface; common in "PC" type hardware often found at the edge). In this scenario, we provide tooling to remotely provision clusters and their workloads with no need to know where hardware is being shipped when it is bootstrapped. This is what most people think of when they think about edge computing; it’s the thousands or tens of thousands of somewhat unknown systems booting up at edge locations and securely phoning home, validating who they are, and receiving their instructions on what they’re supposed to do. Our requirements here expect provisioning and lifecycle management with very little user-intervention other than either pre-imaging the machine at the factory, or simply attaching a boot image, e.g. via USB, and switching the system on. The primary challenges in this space are addressing scale, consistency, security, and lifecycle of these devices in the wild.

This solution provides a great deal of flexibility and consistency in the way that systems are provisioned and on-boarded, regardless of their location, system type or specification, or when they’re powered on for the first time. SUSE Edge enables full flexibility and customization of the system via Edge Image Builder, and leverages the registration capabilities Rancher’s Elemental offering for node on-boarding and Kubernetes provisioning, along with SUSE Manager for operating system patching. The quick start for this solution can be found in Chapter 2, Remote host onboarding with Elemental.

4.3 Image-based provisioning #

For customers that need to operate in standalone, air-gapped, or network limited environments, SUSE Edge provides a solution that enables customers to generate fully customized installation media that contains all of the required deployment artifacts to enable both single-node and multi-node highly-available Kubernetes clusters at the edge, including any workloads or additional layered components required, all without any network connectivity to the outside world, and without the intervention of a centralized management platform. The user-experience follows closely to the "phone home" solution in that installation media is provided to the target systems, but the solution will "bootstrap in-place". In this scenario, it’s possible to attach the resulting clusters into Rancher for ongoing management (i.e. going from a "disconnected" to "connected" mode of operation without major reconfiguration or redeployment), or can continue to operate in isolation. Note that in both cases the same consistent mechanism for automating lifecycle operations can be applied.

Furthermore, this solution can be used to quickly create management clusters that may host the centralized infrastructure that supports both the "directed network provisioning" and "phone home network provisioning" models as it can be the quickest and most simple way to provision all types of Edge infrastructure. This solution heavily utilizes the capabilities of SUSE Edge Image Builder to create fully customized and unattended installation media; the quickstart can be found in Chapter 3, Standalone clusters with Edge Image Builder.

5 SUSE Edge Stack Validation #

All SUSE Edge releases comprise of tightly integrated and thorougly validated components that are versioned as one. As part of the continuous integration and stack validation efforts that not only test the integration between components but ensure that the system performs as expected under forced failure scenarios, the SUSE Edge team publishes all of the test runs and the results to the public. The results along with all input parameters can be found at ci.edge.suse.com.

6 Full Component List #

The full list of components, along with a link to a high-level description of each and how it’s used in SUSE Edge can be found below:

Rancher (Chapter 4, Rancher)

Rancher Dashboard Extensions (Chapter 5, Rancher Dashboard Extensions)

SUSE Manager

Fleet (Chapter 6, Fleet)

SLE Micro (Chapter 7, SLE Micro)

Metal³ (Chapter 8, Metal3)

Edge Image Builder (Chapter 9, Edge Image Builder)

NetworkManager Configurator (Chapter 10, Edge Networking)

Elemental (Chapter 11, Elemental)

Akri (Chapter 12, Akri)

K3s (Chapter 13, K3s)

RKE2 (Chapter 14, RKE2)

Longhorn (Chapter 15, Longhorn)

NeuVector (Chapter 16, NeuVector)

MetalLB (Chapter 17, MetalLB)

KubeVirt (Chapter 18, Edge Virtualization)

System Upgrade Controller (Chapter 19, System Upgrade Controller)

Upgrade Controller (Chapter 20, Upgrade Controller)